As of January 27, 2026, the global technology landscape is witnessing a seismic shift in the semiconductor supply chain, anchored by India’s aggressive transition from a design-heavy "back office" to a self-sustaining manufacturing and product-owning powerhouse. At the 39th International Conference on VLSI Design and Embedded Systems (VLSI 2026) held earlier this month in Pune, industry leaders and government officials officially signaled the end of the "service-only" era. The new mandate is "product-led growth," a strategic pivot designed to ensure that the intellectual property (IP) and the final hardware—ranging from AI-optimized server chips to automotive microcontrollers—are owned and branded within India.

This development marks a definitive milestone in the India Semiconductor Mission (ISM), moving beyond the initial "groundbreaking" ceremonies of 2023 and 2024 into a phase of high-volume commercial output. With major facilities from Micron Technology (NASDAQ: MU) and the Tata Group nearing operational status, India is no longer just a participant in the global chip race; it has emerged as a "Secondary Global Anchor" for the industry. This achievement corresponds directly to Item 22 on our "Top 25 AI and Tech Milestones of 2026," highlighting the successful integration of domestic silicon production with the global AI infrastructure.

The Technical Pivot: From Digital Twins to First Silicon

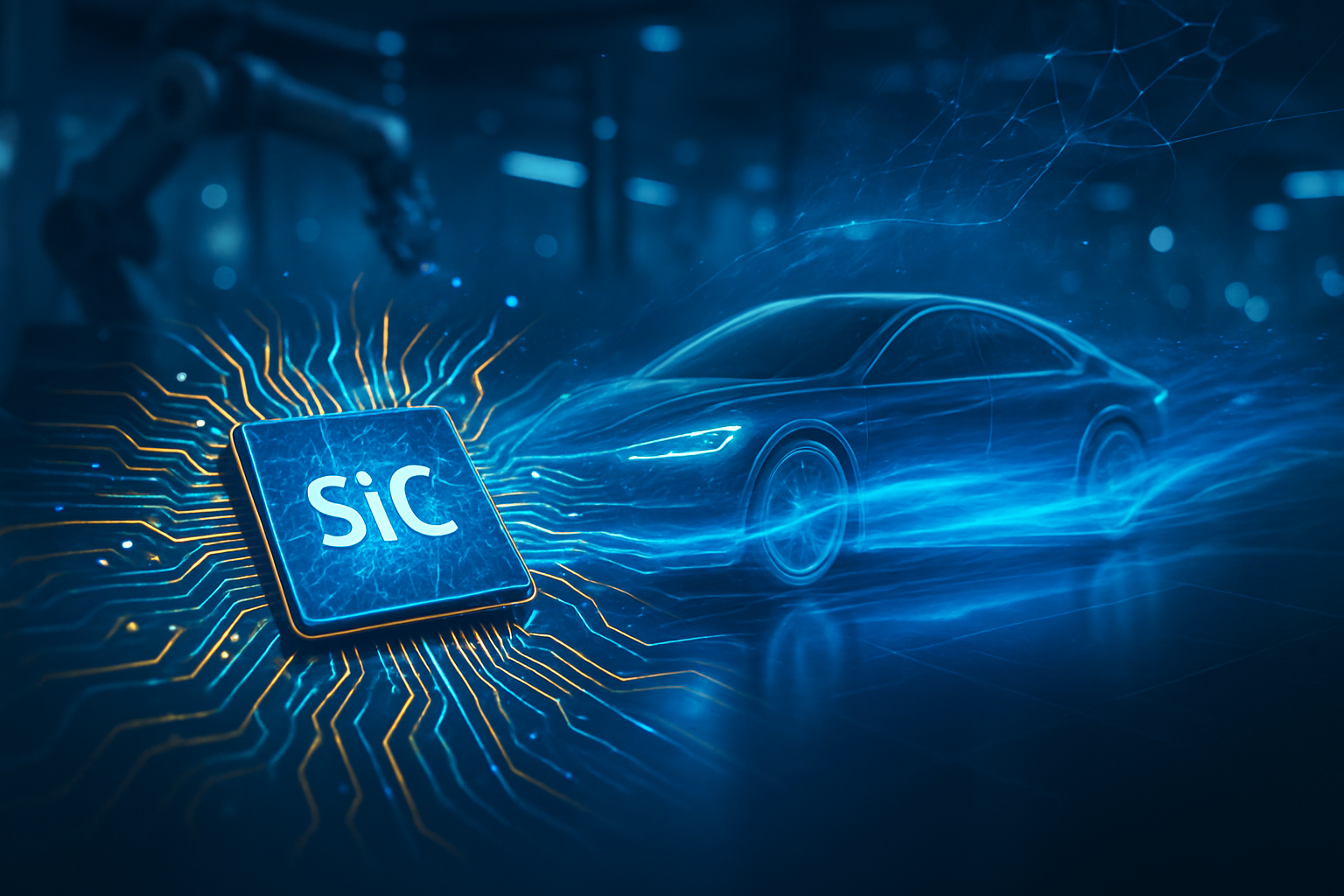

The VLSI 2026 conference provided a deep dive into the technical roadmap that will define India’s semiconductor output over the next three years. A primary focus of the event was the "1-TOPS Program," an indigenous talent and design initiative aimed at creating ultra-low-power Edge AI chips. Unlike previous years where the focus was on general-purpose processing, the 2026 agenda is dominated by specialized silicon. These chips utilize 28nm and 40nm nodes—technologies that, while not at the "leading edge" of 3nm, are critical for the burgeoning electric vehicle (EV) and industrial IoT markets.

Technically, India is leapfrogging traditional manufacturing hurdles through the commercialization of "Virtual Twin" technology. In a landmark partnership with Lam Research (NASDAQ: LRCX), the ISM has deployed SEMulator3D software across its training hubs. This allows engineers to simulate complex nanofabrication processes in a virtual environment with 99% accuracy before a single wafer is processed. This "AI-first" approach to manufacturing has reportedly reduced the "talent-to-fab" timeline—the time it takes for a new engineer to become productive in a cleanroom—by 40%, a feat that was central to the discussions in Pune.

Initial reactions from the global research community have been overwhelmingly positive. Dr. Chen-Wei Liu, a senior researcher at the International Semiconductor Consortium, noted that "India's focus on mature nodes for Edge AI is a masterstroke of pragmatism. While the world fights over 2nm for data centers, India is securing the foundation of the physical AI world—cars, drones, and smart cities." This strategy differentiates India from China’s "at-all-costs" pursuit of the leading edge, focusing instead on market-ready reliability and sovereign IP.

Corporate Chess: Micron, Tata, and the Global Supply Chain

The strategic implications for global tech giants are profound. Micron Technology (NASDAQ: MU) is currently in the final "silicon bring-up" phase at its $2.75 billion ATMP (Assembly, Test, Marking, and Packaging) facility in Sanand, Gujarat. With commercial production slated to begin in late February 2026, Micron is positioned to use India as a primary hub for high-volume memory packaging, reducing its reliance on East Asian supply chains that have been increasingly fraught with geopolitical tension.

Meanwhile, Tata Electronics, a subsidiary of the venerable Tata Group, is making strides that have put legacy semiconductor firms on notice. The Dholera "Mega-Fab," built in partnership with Taiwan’s PSMC, is currently installing advanced lithography equipment from ASML (NASDAQ: ASML) and is on track for "First Silicon" by December 2026. Simultaneously, Tata’s $3.2 billion OSAT plant in Jagiroad, Assam, is expected to commission its first phase by April 2026. Once fully operational, this facility is projected to churn out 48 million chips per day. This massive capacity directly benefits companies like Tata Motors (NYSE: TTM), which are increasingly moving toward vertically integrated EV production.

The competitive landscape is shifting as a result. Design software leaders like Synopsys (NASDAQ: SNPS) and Cadence (NASDAQ: CDNS) are expanding their Indian footprints, no longer just for engineering support but for co-developing Indian-branded "System-on-Chip" (SoC) products. This shift potentially disrupts the traditional relationship between Western chip designers and Asian foundries, as India begins to offer a vertically integrated alternative that combines low-cost design with high-capacity assembly and testing.

Item 22: India as a Secondary Global Anchor

The emergence of India as a global semiconductor hub is not merely a regional success story; it is a critical stabilization factor for the global economy. In recent reports by the World Economic Forum and KPMG, this development was categorized as "Item 22" on the list of most significant tech shifts of 2026. The classification identifies India as a "Secondary Global Anchor," a status granted to nations capable of sustaining global supply chains during periods of disruption in primary hubs like Taiwan or South Korea.

This shift fits into a broader trend of "de-risking" that has dominated the AI and hardware sectors since 2024. By establishing a robust manufacturing base that is deeply integrated with its massive AI software ecosystem—such as the Bhashini language platform—India is creating a blueprint for "democratized technology access." This was recently cited by UNESCO as a global template for how developing nations can achieve digital sovereignty without falling into the "trap" of being perpetual importers of high-end silicon.

The potential concerns, however, remain centered on resource management. The sheer scale of the Dholera and Sanand projects requires unprecedented levels of water and stable electricity. While the Indian government has promised "green corridors" for these fabs, the environmental impact of such industrial expansion remains a point of contention among climate policy experts. Nevertheless, compared to the semiconductor breakthroughs of the early 2010s, India’s 2026 milestone is distinct because it is being built on a foundation of sustainability and AI-driven efficiency.

The Road to Semicon 2.0

Looking ahead, the next 12 to 24 months will be a "proving ground" for the India Semiconductor Mission. The government is already drafting "Semicon 2.0," a policy successor expected to be announced in late 2026. This new iteration is rumored to offer even more aggressive subsidies for advanced 7nm and 5nm nodes, as well as an "R&D-led equity fund" to support the very product-led startups that were the stars of VLSI 2026.

One of the most anticipated applications on the horizon is the development of an Indian-designed AI server chip, specifically tailored for the "India Stack." If successful, this would allow the country to run its massive public digital infrastructure on entirely indigenous silicon by 2028. Experts predict that as Micron and Tata hit their stride in the coming months, we will see a flurry of joint ventures between Indian firms and European automotive giants looking for a "China Plus One" manufacturing strategy.

The challenge remains the "last mile" of logistics. While the fabs are being built, the surrounding infrastructure—high-speed rail, dedicated power grids, and specialized logistics—must keep pace. The "product-led" growth mantra will only succeed if these chips can reach the global market as efficiently as they are designed.

A New Chapter in Silicon History

The developments of January 2026 represent a "coming of age" for the India Semiconductor Mission. From the successful conclusion of the VLSI 2026 conference to the imminent production start at Micron’s Sanand plant, the momentum is undeniable. India has moved past the stage of aspirational policy and into the era of commercial execution. The shift to a "product-led" strategy ensures that the value created by Indian engineers stays within the country, fostering a new generation of "Silicon Sovereigns."

In the history of artificial intelligence and hardware, 2026 will likely be remembered as the year the semiconductor map was permanently redrawn. India’s rise as a "Secondary Global Anchor" provides a much-needed buffer for a world that has become dangerously dependent on a handful of geographic points of failure. As we watch the first Indian-packaged chips roll off the assembly lines in the coming weeks, the significance of Item 22 becomes clear: the "Silicon Century" has officially found its second home.

Investors and tech analysts should keep a close eye on the "First Silicon" announcements from Dholera later this year, as well as the upcoming "Semicon 2.0" policy drafts, which will dictate the pace of India’s move into the ultra-advanced node market.

This content is intended for informational purposes only and represents analysis of current AI and semiconductor developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.