The University of Pittsburgh has officially inaugurated the Hub for AI and Data Science Leadership (HAIL), a centralized initiative designed to unify the university’s sprawling artificial intelligence efforts into a cohesive engine for academic innovation and regional economic growth. Launched in December 2025, HAIL represents a significant shift from theoretical AI research toward a "practical first" approach, aiming to equip students and the local workforce with the specific competencies required to navigate an AI-driven economy.

The establishment of HAIL marks a pivotal moment for Western Pennsylvania, positioning Pittsburgh as a primary node in the national AI landscape. By integrating advanced generative AI tools directly into the student experience and forging deep ties with industry leaders, the University of Pittsburgh is moving beyond the "ivory tower" model of technology development. Instead, it is creating a scalable framework where AI is treated as a foundational literacy, as essential to the modern workforce as digital communication or data analysis.

Bridging the Gap: The Technical Architecture of the "Campus of the Future"

At the heart of HAIL is a sophisticated technical infrastructure developed in collaboration with Amazon.com, Inc. (NASDAQ:AMZN) and the AI safety and research company Anthropic. Pitt has distinguished itself as the first academic institution to secure an enterprise-wide agreement for "Claude for Education," a specialized suite of tools built on Anthropic’s most advanced models, including Claude 4.5 Sonnet. Unlike consumer-facing chatbots, these models are configured to utilize a "Socratic Method" of interaction, serving as learning companions that guide students through complex problem-solving rather than simply providing answers.

The hub’s digital backbone relies on Amazon Bedrock, a fully managed service that allows the university to build and scale generative AI applications within a secure, private cloud environment. This infrastructure supports "PittGPT," a proprietary platform that provides students and faculty with access to high-performance large language models (LLMs) while ensuring that sensitive data—such as research intellectual property or student records protected by FERPA—is never used to train public models. This "closed-loop" system addresses one of the primary hurdles to AI adoption in higher education: the risk of data leakage and the loss of institutional privacy.

Beyond the software layer, HAIL leverages significant hardware investments through the Pitt Center for Research Computing. The university has deployed specialized GPU clusters featuring NVIDIA (NASDAQ:NVDA) A100 and L40S nodes, providing the raw compute power necessary for faculty to conduct high-level machine learning research on-site. This hybrid approach—combining the scalability of the AWS cloud with the control of on-premise high-performance computing—allows Pitt to support everything from undergraduate AI fluency to cutting-edge research in computational pathology.

Industry Integration and the Rise of "AI Avenue"

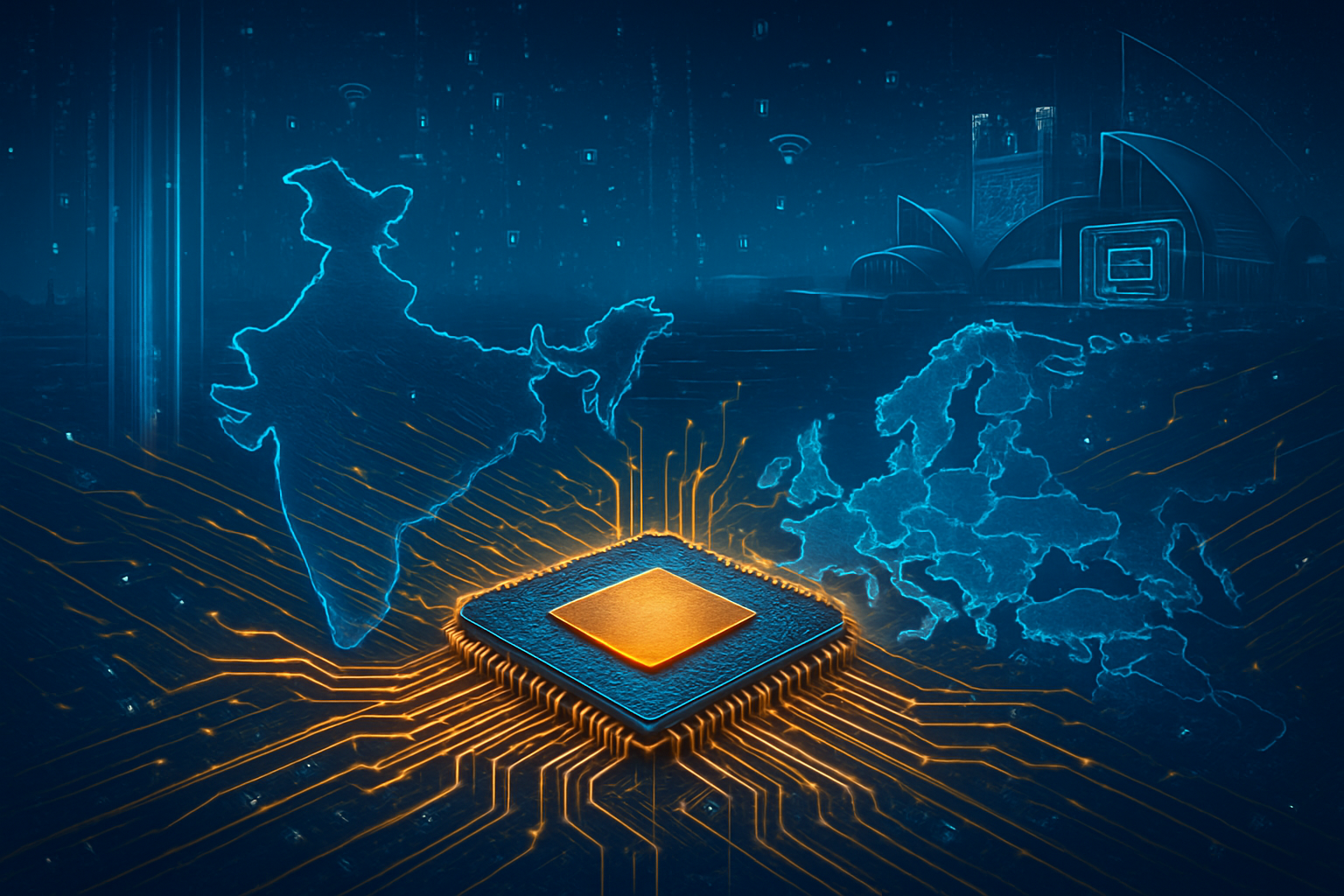

The launch of HAIL has immediate implications for the broader tech ecosystem, particularly for the companies that have increasingly viewed Pittsburgh as a strategic hub. The university’s efforts are a central component of the city’s "AI Avenue," a high-tech corridor near Bakery Square that includes major offices for Google (NASDAQ:GOOGL) and Duolingo (NASDAQ:DUOL). By aligning its curriculum with the needs of these tech giants and local startups, Pitt is creating a direct pipeline of "AI-ready" talent, a move that provides a significant competitive advantage to companies operating in the region.

Strategic partnerships are a cornerstone of the HAIL model. A $10 million investment from Leidos (NYSE:LDOS) has already established the Computational Pathology and AI Center of Excellence (CPACE), which focuses on AI-driven cancer detection. Furthermore, a joint initiative with NVIDIA has led to the creation of a "Joint Center for AI and Intelligent Systems," which bridges the gap between clinical medicine and AI-driven manufacturing. These collaborations suggest that the future of AI development will not be confined to isolated labs but will instead thrive in "innovation districts" where academia and industry share both data and physical space.

For tech giants like Amazon and NVIDIA, Pitt serves as a "living laboratory" to test the deployment of AI at scale. The success of the "Campus of the Future" model could provide a blueprint for how these companies market their enterprise AI solutions to other large-scale institutions, including other universities, healthcare systems, and government agencies. By demonstrating that AI can be deployed ethically and securely across a population of tens of thousands of users, Pitt is helping to de-risk the technology for the broader market.

A Regional Model for Economic Transition and Ethical AI

The significance of HAIL extends beyond the borders of the campus, serving as a model for how "Rust Belt" cities can transition into the "Tech Belt." The initiative is deeply integrated with regional economic development projects, most notably the BioForge at Hazelwood Green. This $250 million biomanufacturing facility, a partnership with ElevateBio, is powered by AI and designed to revitalize a former industrial site. Through HAIL, the university is ensuring that the high-tech jobs created at BioForge are accessible to local residents by offering "Life Sciences Career Pathways" and AI-driven vocational training.

This focus on "broad economic inclusion" addresses a major concern in the AI community: the potential for the technology to exacerbate economic inequality. By placing AI training in Community Engagement Centers (CECs) in neighborhoods like Hazelwood and Homewood, Pitt is attempting to democratize access to the tools of the future. The hub’s leadership, including Director Michael Colaresi, has emphasized that "Responsible Data Science" is the foundation of the initiative, ensuring that AI development is transparent, ethical, and focused on human-centric outcomes.

In many ways, HAIL represents a maturation of the AI trend. While previous milestones in the field were defined by the release of increasingly large models, this development is defined by integration. It mirrors the historical shift of the internet from a specialized research tool to a ubiquitous utility. By treating AI as a utility that must be managed, taught, and secured, the University of Pittsburgh is establishing a new standard for how society adapts to transformative technological shifts.

The Horizon: Bio-Manufacturing and the 2026 Curriculum

Looking ahead, the influence of HAIL is expected to grow as its first dedicated degree programs come online. In 2026, the university will launch its first fully online undergraduate degree, a B.S. in Health Informatics, which will integrate AI training into the core of the clinical curriculum. This move signals a long-term strategy to embed AI fluency into every discipline, from nursing and social work to business and the arts.

The next phase of HAIL’s evolution will likely involve the expansion of "agentic AI"—systems that can not only answer questions but also perform complex tasks autonomously. As the university refines its "PittGPT" platform, experts predict that AI agents will eventually handle administrative tasks like course scheduling and financial aid processing, allowing human staff to focus on high-touch student support. However, the challenge remains in ensuring these systems remain unbiased and that the "human-in-the-loop" philosophy is maintained as the technology becomes more autonomous.

Conclusion: A New Standard for the AI Era

The launch of the Hub for AI and Data Science Leadership at the University of Pittsburgh is more than just an administrative reorganization; it is a bold statement on the future of higher education. By combining enterprise-grade infrastructure from AWS and Anthropic with a commitment to regional workforce development, Pitt has created a comprehensive ecosystem that addresses the technical, ethical, and economic challenges of the AI era.

As the "Campus of the Future" initiative matures, it will be a critical case study for other institutions worldwide. The key takeaway is that the successful adoption of AI requires more than just high-performance hardware; it requires a culture of "AI fluency" and a commitment to community-wide benefits. In the coming months, the tech industry will be watching closely as Pitt begins to graduate its first cohort of "AI-native" students, potentially setting a new benchmark for what it means to be a prepared worker in the 21st century.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.