The semiconductor industry has officially breached the $1 trillion annual revenue ceiling in 2026, marking a monumental shift in the global economy. This milestone, achieved nearly four years ahead of pre-pandemic projections, serves as the definitive proof that the "AI Super-cycle" is not merely a temporary bubble but a fundamental restructuring of the world’s technological foundations. Driven by an insatiable demand for high-performance computing, the industry has transitioned from its historically cyclical nature into a period of unprecedented, sustained expansion.

According to the latest data from market research firm Omdia, the global semiconductor market is projected to grow by a staggering 30.7% year-over-year in 2026. This growth is being propelled almost entirely by the Computing and Data Storage segment, which is expected to surge by 41.4% this year alone. As hyperscalers and sovereign nations scramble to build out the infrastructure required for trillion-parameter AI models, the silicon landscape is being redrawn, placing a premium on advanced logic and high-bandwidth memory that has left traditional segments of the market in the rearview mirror.

The Technical Engine of the $1 Trillion Milestone

The surge to $1 trillion is underpinned by a radical shift in chip architecture and manufacturing complexity. At the heart of this growth is the move toward 2-nanometer (2nm) process nodes and the mass adoption of High Bandwidth Memory 4 (HBM4). These technologies are designed specifically to overcome the "memory wall"—the physical bottleneck where the speed of data transfer between the processor and memory cannot keep pace with the processing power of the chip. By integrating HBM4 directly onto the chip package using advanced 2.5D and 3D packaging techniques, manufacturers are achieving the throughput necessary for the next generation of generative AI.

NVIDIA (NASDAQ: NVDA) continues to dominate this technical frontier with its Blackwell Ultra and the newly unveiled Rubin architectures. These platforms utilize CoWoS (Chip-on-Wafer-on-Substrate) technology from TSMC (NYSE: TSM) to fuse multiple compute dies and memory stacks into a single, massive powerhouse. The complexity of these systems is reflected in their price points and the specialized infrastructure required to run them, including liquid cooling and high-speed InfiniBand networking.

Initial reactions from the AI research community suggest that this hardware leap is enabling a transition from "Large Language Models" to "World Models"—AI systems capable of reasoning across physical and temporal dimensions in real-time. Experts note that the technical specifications of 2026-era silicon are roughly 100 times more capable in terms of FP8 compute power than the chips that powered the initial ChatGPT boom just three years ago. This rapid iteration has forced a complete overhaul of data center design, shifting the focus from general-purpose CPUs to dense clusters of specialized AI accelerators.

Hyperscaler Expenditures and Market Concentration

The financial gravity of the $1 trillion milestone is centered around a remarkably small group of players. The "Big Four" hyperscalers—Microsoft (NASDAQ: MSFT), Alphabet (NASDAQ: GOOGL), Amazon (NASDAQ: AMZN), and Meta (NASDAQ: META)—are projected to reach a combined capital expenditure (CapEx) of $500 billion in 2026. This half-trillion-dollar investment is almost exclusively directed toward AI infrastructure, creating a "winner-take-most" dynamic in the cloud and hardware sectors.

NVIDIA remains the primary beneficiary, maintaining a market share of over 90% in the AI GPU space. However, the sheer scale of demand has allowed for the rise of specialized "silicon-as-a-service" models. TSMC, as the world’s leading foundry, has seen its 2026 CapEx climb to a projected $52–$56 billion to keep up with orders for 2nm logic and advanced packaging. This has created a strategic advantage for companies that can secure guaranteed capacity, leading to long-term supply agreements that resemble sovereign treaties more than corporate contracts.

Meanwhile, the memory sector is undergoing its own "NVIDIA moment." Micron (NASDAQ: MU) and SK Hynix (KRX: 000660) have reported that their HBM4 production lines are fully committed through the end of 2026. Samsung (KRX: 005930) has also pivoted aggressively to capture the AI memory market, recognizing that the era of low-margin commodity DRAM is being replaced by high-value, AI-specific silicon. This concentration of wealth and technology among a few key firms is disrupting the traditional competitive landscape, as startups and smaller chipmakers find it increasingly difficult to compete with the R&D budgets and manufacturing scale of the giants.

The AI Super-Cycle and Global Economic Implications

This $1 trillion milestone represents more than just a financial figure; it marks the arrival of the "AI Super-cycle." Unlike previous cycles driven by PCs or smartphones, the AI era is characterized by "Giga-cycle" dynamics—massive, multi-year waves of investment that are less sensitive to interest rate fluctuations or consumer spending habits. The demand is now being driven by corporate automation, scientific discovery, and "Sovereign AI," where nations invest in domestic computing power as a matter of national security and economic autonomy.

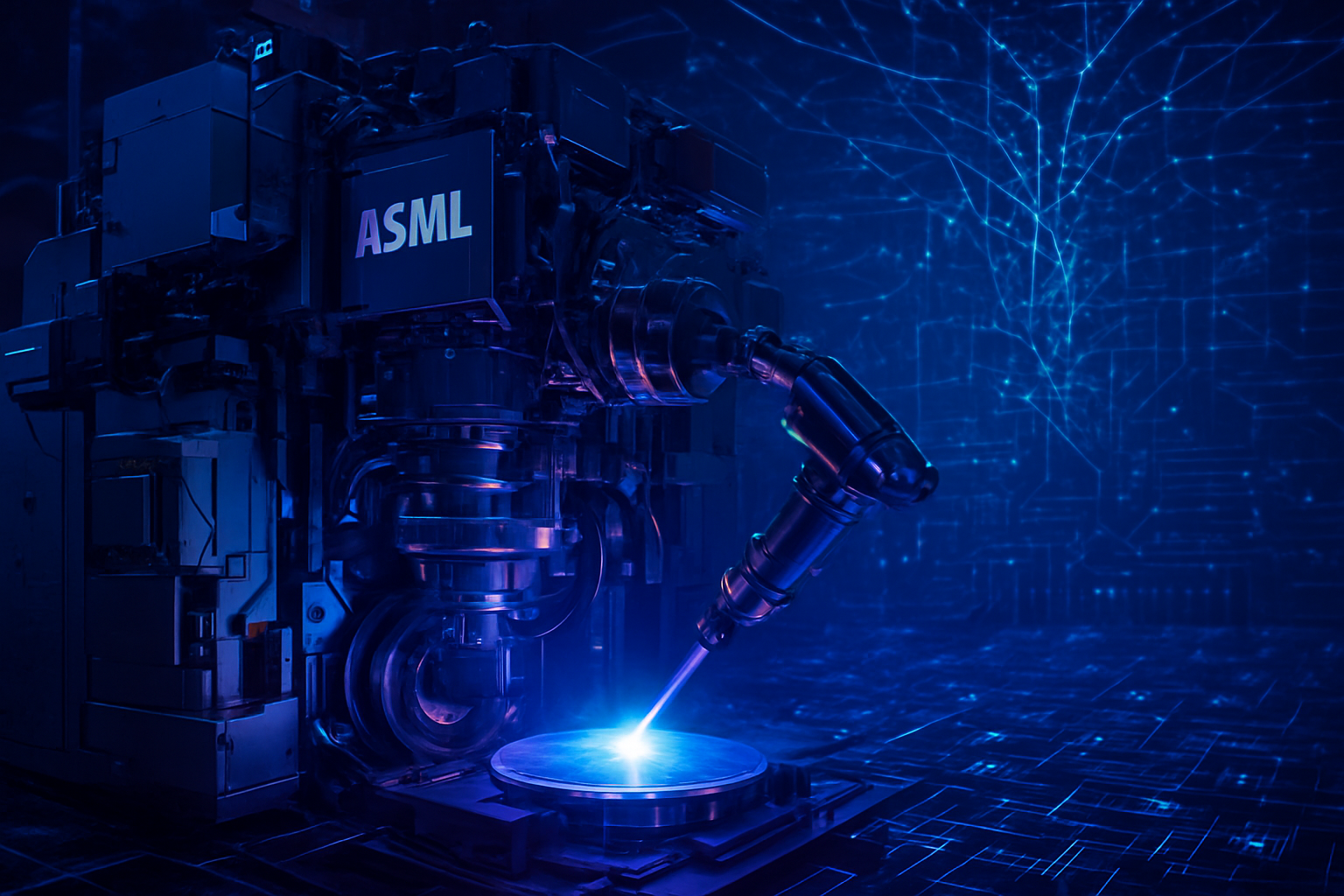

When compared to previous milestones—such as the semiconductor industry crossing the $100 billion mark in the 1990s or the $500 billion mark in 2021—the jump to $1 trillion is unprecedented in its speed and concentration. However, this rapid growth brings significant concerns. The industry’s heavy reliance on a single foundry (TSMC) and a single equipment provider (ASML (NASDAQ: ASML)) creates a fragile global supply chain. Any geopolitical instability in East Asia or disruptions in the supply of Extreme Ultraviolet (EUV) lithography machines could send shockwaves through the $1 trillion market.

Furthermore, the environmental impact of this expansion is coming under intense scrutiny. The energy requirements of 2026-class AI data centers are immense, prompting a parallel boom in nuclear and renewable energy investments by tech giants. The industry is now at a crossroads where its growth is limited not by consumer demand, but by the physical availability of electricity and the raw materials needed for advanced chip fabrication.

The Horizon: 2027 and Beyond

Looking ahead, the semiconductor industry shows no signs of slowing down. Near-term developments include the wider deployment of High-NA EUV lithography, which will allow for even greater transistor density and energy efficiency. We are also seeing the first commercial applications of silicon photonics, which use light instead of electricity to transmit data between chips, potentially solving the next great bottleneck in AI scaling.

On the horizon, researchers are exploring "neuromorphic" chips that mimic the human brain's architecture to provide AI capabilities with a fraction of the power consumption. While these are not expected to disrupt the $1 trillion market in 2026, they represent the next frontier of the super-cycle. The challenge for the coming years will be moving from training-heavy AI to "inference-at-the-edge," where powerful AI models run locally on devices rather than in massive data centers.

Experts predict that if the current trajectory holds, the semiconductor industry could eye the $1.5 trillion mark by the end of the decade. However, this will require addressing the talent shortage in chip design and engineering, as well as navigating the increasingly complex web of global trade restrictions and "chip-act" subsidies that are fragmenting the global market into regional hubs.

A New Era for Silicon

The achievement of $1 trillion in annual revenue is a watershed moment for the semiconductor industry. It confirms that silicon is now the most critical commodity in the modern world, surpassing oil in its strategic importance to global GDP. The transition from a 30.7% growth rate in 2026 is a testament to the transformative power of artificial intelligence and the massive capital investments being made to realize its potential.

As we look at the key takeaways, it is clear that the Computing and Data Storage segment has become the new heart of the industry, and the "AI Super-cycle" has rewritten the rules of market cyclicality. For investors, policymakers, and technologists, the significance of this development cannot be overstated. We have entered an era where computing power is the primary driver of economic progress.

In the coming weeks and months, the industry will be watching for the first quarterly earnings reports of 2026 to see if the projected growth holds. Attention will also be focused on the rollout of High-NA EUV systems and any further announcements regarding sovereign AI investments. For now, the semiconductor industry stands as the undisputed titan of the global economy, fueled by the relentless march of artificial intelligence.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.