As the calendar turns to 2026, the artificial intelligence industry has arrived at a pivotal architectural crossroads. For decades, the movement of data within computers has relied on the flow of electrons through copper wiring. However, as AI clusters scale toward the "million-GPU" milestone, the physical limits of electricity—long whispered about as the "Copper Wall"—have finally been reached. In the high-stakes race to build the infrastructure for Artificial General Intelligence (AGI), the industry is officially abandoning traditional electrical interconnects in favor of Silicon Photonics and Co-Packaged Optics (CPO).

This transition marks one of the most significant shifts in computing history. By integrating laser-based data transmission directly onto the silicon chip, industry titans like Broadcom (NASDAQ:AVGO) and NVIDIA (NASDAQ:NVDA) are enabling petabit-per-second connectivity with energy efficiency that was previously thought impossible. The arrival of these optical "superhighways" in early 2026 signals the end of the copper era in high-performance data centers, effectively decoupling bandwidth growth from the crippling power constraints that threatened to stall AI progress.

Breaking the Copper Wall: The Technical Leap to CPO

The technical crisis necessitating this shift is rooted in the physics of 224 Gbps signaling. At these speeds, the reach of traditional passive copper cables has shrunk to less than one meter, and the power required to force electrical signals through these wires has skyrocketed. In early 2025, data center operators reported that interconnects were consuming nearly 30% of total cluster power. The solution, arriving in volume this year, is Co-Packaged Optics. Unlike traditional pluggable transceivers that sit on the edge of a switch, CPO brings the optical engine directly into the chip's package.

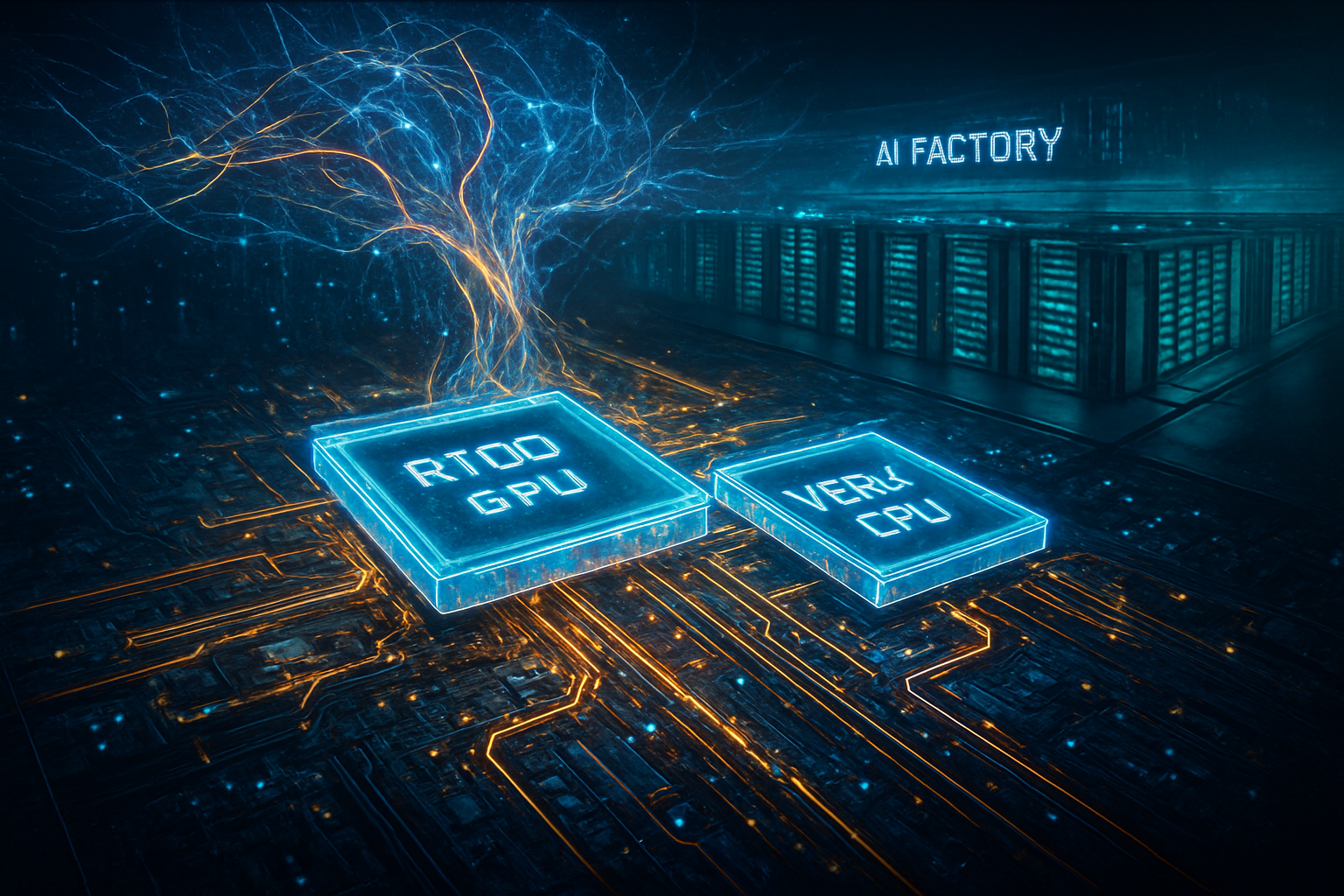

Broadcom (NASDAQ:AVGO) has set the pace with its 2026 flagship, the Tomahawk 6-Davisson switch. Boasting a staggering 102.4 Terabits per second (Tbps) of aggregate capacity, the Davisson utilizes TSMC (NYSE:TSM) COUPE technology to stack photonic engines directly onto the switching silicon. This integration reduces data transmission energy by over 70%, moving from roughly 15 picojoules per bit (pJ/bit) in traditional systems to less than 5 pJ/bit. Meanwhile, NVIDIA (NASDAQ:NVDA) has launched its Quantum-X Photonics InfiniBand platform, specifically designed to link its "million-GPU" clusters. These systems replace bulky copper cables with thin, liquid-cooled fiber optics that provide 10x better network resiliency and nanosecond-level latency.

The AI research community has reacted with a mix of relief and awe. Experts at leading labs note that without CPO, the "scaling laws" of large language models would have hit a hard ceiling due to I/O bottlenecks. The ability to move data at light speed across a massive fabric allows a million GPUs to behave as a single, coherent computational entity. This technical breakthrough is not merely an incremental upgrade; it is the foundational plumbing required for the next generation of multi-trillion parameter models.

The New Power Players: Market Shifts and Strategic Moats

The shift to Silicon Photonics is fundamentally reordering the semiconductor landscape. Broadcom (NASDAQ:AVGO) has emerged as the clear leader in the Ethernet-based merchant silicon market, leveraging its $73 billion AI backlog to solidify its role as the primary alternative to NVIDIA’s proprietary ecosystem. By providing custom CPO-integrated ASICs to hyperscalers like Meta (NASDAQ:META) and OpenAI, Broadcom is helping these giants build "hardware moats" that are optimized for their specific AI architectures, often achieving 30-50% better performance-per-watt than general-purpose hardware.

NVIDIA (NASDAQ:NVDA), however, remains the dominant force in the "scale-up" fabric. By vertically integrating CPO into its NVLink and InfiniBand stacks, NVIDIA is effectively locking customers into a high-performance ecosystem where the network is as inseparable from the GPU as the memory. This strategy has forced competitors like Marvell (NASDAQ:MRVL) and Cisco (NASDAQ:CSCO) to innovate rapidly. Marvell, in particular, has positioned itself as a key challenger following its acquisition of Celestial AI, offering a "Photonic Fabric" that allows for optical memory pooling—a technology that lets thousands of GPUs share a massive, low-latency memory pool across an entire data center.

This transition has also created a "paradox of disruption" for traditional optical component makers like Lumentum (NASDAQ:LITE) and Coherent (NYSE:COHR). While the traditional pluggable module business is being cannibalized by CPO, these companies have successfully pivoted to become "laser foundries." As the primary suppliers of the high-powered Indium Phosphide (InP) lasers required for CPO, their role in the supply chain has shifted from assembly to critical component manufacturing, making them indispensable partners to the silicon giants.

A Global Imperative: Energy, Sustainability, and the Race for AGI

Beyond the technical and market implications, the move to Silicon Photonics is a response to a looming environmental and societal crisis. By 2026, global data center electricity usage is projected to reach approximately 1,050 terawatt-hours, nearly the total power consumption of Japan. In tech hubs like Northern Virginia and Ireland, "grid nationalism" has become a reality, with local governments restricting new data center permits due to massive power spikes. Silicon Photonics provides a critical "pressure valve" for these grids by drastically reducing the energy overhead of AI training.

The societal significance of this transition cannot be overstated. We are witnessing the construction of "Gigafactory" scale clusters, such as xAI’s Colossus 2 and Microsoft’s (NASDAQ:MSFT) Fairwater site, which are designed to house upwards of one million GPUs. These facilities are the physical manifestations of the race for AGI. Without the energy savings provided by optical interconnects, the carbon footprint and water usage (required for cooling) of these sites would be politically and environmentally untenable. CPO is effectively the "green technology" that allows the AI revolution to continue scaling.

Furthermore, this shift highlights the world's extreme dependence on TSMC (NYSE:TSM). As the only foundry currently capable of the ultra-precise 3D chip-stacking required for CPO, TSMC has become the ultimate bottleneck in the global AI supply chain. The complexity of manufacturing these integrated photonic/electronic packages means that any disruption at TSMC’s advanced packaging facilities in 2026 could stall global AI development more effectively than any previous chip shortage.

The Horizon: Optical Computing and the Post-Silicon Future

Looking ahead, 2026 is just the beginning of the optical revolution. While CPO currently focuses on data transmission, the next frontier is optical computation. Startups like Lightmatter are already sampling "Photonic Compute Units" that perform matrix multiplications using light rather than electricity. These chips promise a 100x improvement in efficiency for specific AI inference tasks, potentially replacing traditional electrical transistors in the late 2020s.

In the near term, the industry is already pathfinding for the 448G-per-lane standard. This will involve the use of plasmonic modulators—ultra-compact devices that can operate at speeds exceeding 145 GHz while consuming less than 1 pJ/bit. Experts predict that by 2028, the "Copper Era" will be a distant memory even in consumer-level networking, as the cost of silicon photonics drops and the technology trickles down from the data center to the edge.

The challenges remains significant, particularly regarding the reliability of laser sources and the sheer complexity of field-repairing co-packaged systems. However, the momentum is irreversible. The industry has realized that the only way to keep pace with the exponential growth of AI is to stop fighting the physics of electrons and start harnessing the speed of light.

Summary: A New Architecture for a New Intelligence

The transition to Silicon Photonics and Co-Packaged Optics in 2026 represents a fundamental decoupling of computing power from energy consumption. By shattering the "Copper Wall," companies like Broadcom, NVIDIA, and TSMC have cleared the path for the million-GPU clusters that will likely train the first true AGI models. The key takeaways from this shift include a 70% reduction in interconnect power, the rise of custom optical ASICs for major AI labs, and a renewed focus on data center sustainability.

In the history of computing, we will look back at 2026 as the year the industry "saw the light." The long-term impact will be felt in every corner of society, from the speed of AI breakthroughs to the stability of our global power grids. In the coming months, watch for the first performance benchmarks from xAI’s million-GPU cluster and further announcements from the OIF (Optical Internetworking Forum) regarding the 448G standard. The era of copper is over; the era of the optical supercomputer has begun.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms. For more information, visit https://www.tokenring.ai/.