The semiconductor industry has officially moved beyond the flatlands of traditional chip design. As of December 2024, the "2D barrier" that has governed Moore’s Law for decades is being dismantled by a new generation of vertical 3D logic chips. By stacking memory and compute layers like floors in a skyscraper, researchers and tech giants are unlocking performance levels previously deemed impossible. This architectural shift represents the most significant change in chip design since the invention of the integrated circuit, effectively eliminating the "memory wall"—the data transfer bottleneck that has long hampered AI development.

This breakthrough is not merely a theoretical exercise; it is a direct response to the insatiable power and data demands of generative AI and large-scale neural networks. By moving data vertically over microns rather than horizontally over millimeters, these 3D stacks drastically reduce power consumption while increasing the speed of AI workloads by orders of magnitude. As the world approaches 2026, the transition to 3D logic is set to redefine the competitive landscape for hardware manufacturers and AI labs alike.

The Technical Leap: From 2.5D to Monolithic 3D

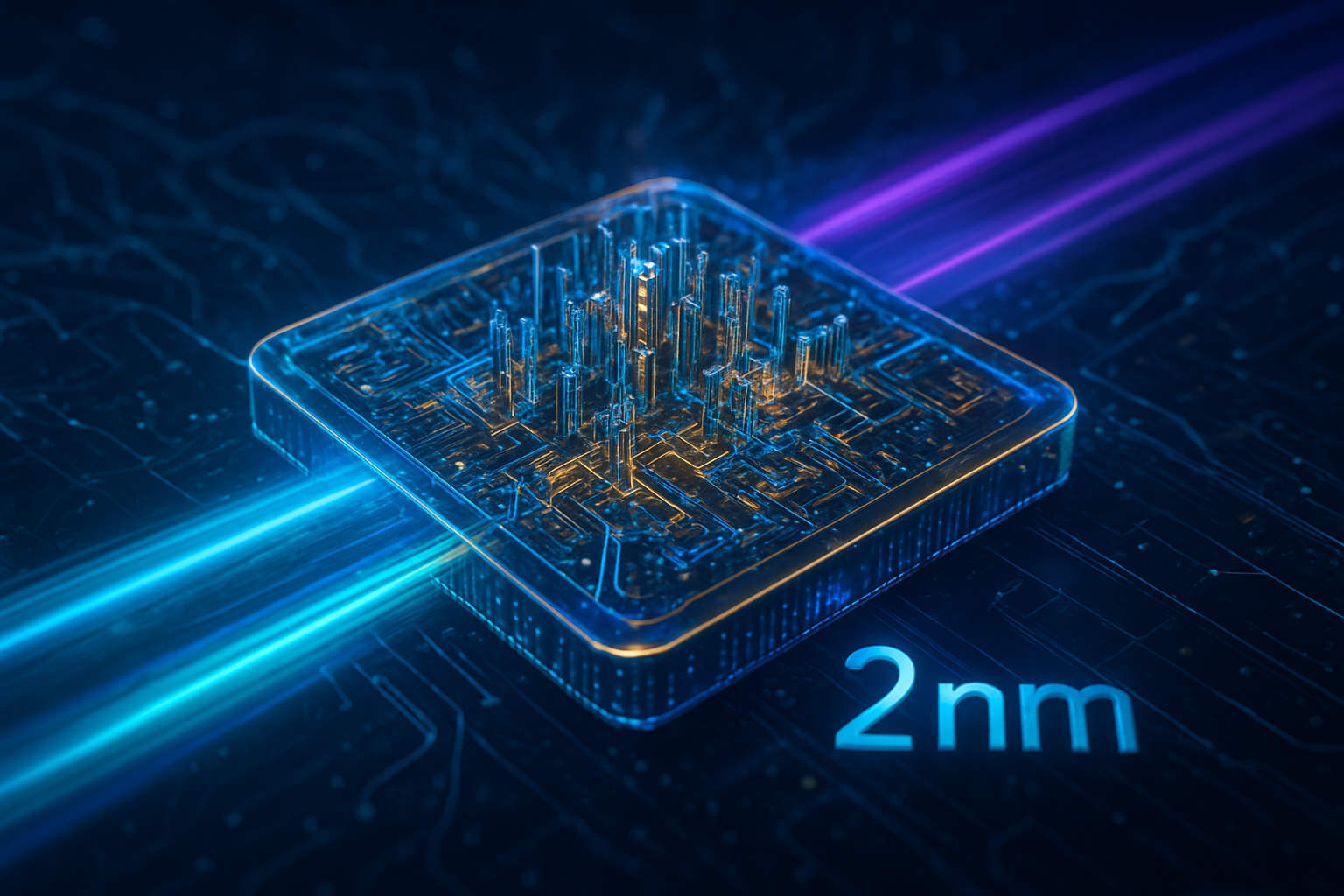

The transition to true 3D logic represents a departure from the "2.5D" packaging that has dominated the industry for the last few years. While 2.5D designs, such as NVIDIA’s (NASDAQ: NVDA) Blackwell architecture, place chiplets side-by-side on a silicon interposer, the new 3D paradigm involves direct vertical bonding. Leading this charge is TSMC (NYSE: TSM) with its System on Integrated Chips (SoIC) platform. In late 2025, TSMC achieved a 6μm bond pitch, allowing for logic-on-logic stacking that offers interconnect densities ten times higher than previous generations. This enables different chip components to communicate with nearly the same speed and efficiency as if they were on a single piece of silicon, but with the modularity of a multi-story building.

Complementing this is the rise of Complementary FET (CFET) technology, which was a highlight of the December 2025 IEDM conference. Unlike traditional FinFETs or Gate-All-Around (GAA) transistors that sit side-by-side, CFETs stack n-type and p-type transistors on top of each other. This verticality effectively doubles the transistor density for the same footprint, providing a roadmap for the upcoming "A10" (1nm) nodes. Furthermore, Intel (NASDAQ: INTC) has successfully deployed its Foveros Direct 3D technology in the new Clearwater Forest Xeon processors. This uses hybrid bonding to create copper-to-copper connections between layers, reducing latency and allowing for a more compact, power-efficient design than any 2D predecessor.

The most radical advancement comes from a collaboration between Stanford University, MIT, and SkyWater Technology (NASDAQ: SKYT). They have demonstrated a "monolithic 3D" AI chip that integrates Carbon Nanotube FETs (CNFETs) and Resistive RAM (RRAM) directly over traditional CMOS logic. This approach doesn't just stack finished chips; it builds the entire structure layer-by-layer in a single manufacturing process. Initial tests show a 4x improvement in throughput for large language models (LLMs), with simulations suggesting that taller stacks could yield a 100x to 1,000x gain in energy efficiency. This differs from existing technology by removing the physical separation between memory and compute, allowing AI models to "think" where they "remember."

Market Disruption and the New Hardware Arms Race

The shift to 3D logic is recalibrating the power dynamics among the world’s most valuable companies. NVIDIA (NASDAQ: NVDA) remains at the forefront with its newly announced "Rubin" R100 platform. By utilizing 8-Hi HBM4 memory stacks and 3D chiplet designs, NVIDIA is targeting a memory bandwidth of 13 TB/s—nearly double that of its predecessor. This allows the company to maintain its lead in the AI training market, where data movement is the primary cost. However, the complexity of 3D stacking has also opened a window for Intel (NASDAQ: INTC) to reclaim its "process leadership" title. Intel’s 18A node and PowerVia 2.0—a backside power delivery system that moves power routing to the bottom of the chip—have become the benchmark for high-performance AI silicon in 2025.

For specialized AI startups and hyperscalers like Amazon (NASDAQ: AMZN) and Google (NASDAQ: GOOGL), 3D logic offers a path to custom silicon that is far more efficient than general-purpose GPUs. By stacking their own proprietary AI accelerators directly onto high-bandwidth memory (HBM) using Samsung’s (KRX: 005930) SAINT-D platform, these companies can reduce the energy cost of AI inference by up to 70%. This is a strategic advantage in a market where electricity costs and data center cooling are becoming the primary constraints on AI scaling. Samsung’s ability to stack DRAM directly on logic without an interposer is a direct challenge to the traditional supply chain, potentially disrupting the dominance of dedicated packaging firms.

The competitive implications extend to the foundry model itself. As 3D stacking requires tighter integration between design and manufacturing, the "fabless" model is evolving into a "co-design" model. Companies that cannot master the thermal and electrical complexities of vertical stacking risk being left behind. We are seeing a shift where the value is moving from the individual chip to the "System-on-Package" (SoP). This favors integrated players and those with deep partnerships, like the alliance between Apple (NASDAQ: AAPL) and TSMC, which is rumored to be working on a 3D-stacked "M5" chip for 2026 that could bring server-grade AI capabilities to consumer devices.

The Wider Significance: Breaking the Memory Wall

The broader significance of 3D logic cannot be overstated; it is the key to solving the "Memory Wall" problem that has plagued computing for decades. In a traditional 2D architecture, the energy required to move data between the processor and memory is often orders of magnitude higher than the energy required to actually perform the computation. By stacking these components vertically, the distance data must travel is reduced from millimeters to microns. This isn't just an incremental improvement; it is a fundamental shift that enables "Agentic AI"—systems capable of long-term reasoning and multi-step tasks that require massive, high-speed access to persistent memory.

However, this breakthrough brings new concerns, primarily regarding thermal management. Stacking high-performance logic layers is akin to stacking several space heaters on top of each other. In 2025, the industry has had to pioneer microfluidic cooling—circulating liquid through tiny channels etched directly into the silicon—to prevent these 3D skyscrapers from melting. There are also concerns about manufacturing yields; if one layer in a ten-layer stack is defective, the entire expensive unit may have to be discarded. This has led to a surge in AI-driven "Design for Test" (DfT) tools that can predict and mitigate failures before they occur.

Comparatively, the move to 3D logic is being viewed by historians as a milestone on par with the transition from vacuum tubes to transistors. It marks the end of the "Planar Era" and the beginning of the "Volumetric Era." Just as the skyscraper allowed cities to grow when they ran out of land, 3D logic allows computing power to grow when we run out of horizontal space on a silicon wafer. This trend is essential for the sustainability of AI, as the world cannot afford the projected energy costs of 2D-based AI scaling.

The Horizon: 1nm, Glass Substrates, and Beyond

Looking ahead, the near-term focus will be on the refinement of hybrid bonding and the commercialization of glass substrates. Unlike organic substrates, glass offers superior flatness and thermal stability, which is critical for maintaining the alignment of vertically stacked layers. By 2026, we expect to see the first high-volume AI chips using glass substrates, enabling even larger and more complex 3D packages. The long-term roadmap points toward "True Monolithic 3D," where multiple layers of logic are grown sequentially on the same wafer, potentially leading to chips with hundreds of layers.

Future applications for this technology extend far beyond data centers. 3D logic will likely enable "Edge AI" devices—such as AR glasses and autonomous drones—to perform complex real-time processing that currently requires a cloud connection. Experts predict that by 2028, the "AI-on-a-Cube" will be the standard form factor, with specialized layers for sensing, memory, logic, and even integrated photonics for light-speed communication between chips. The challenge remains the cost of manufacturing, but as yields improve, 3D architecture will trickle down from $40,000 AI GPUs to everyday consumer electronics.

A New Dimension for Intelligence

The emergence of 3D logic marks a definitive turning point in the history of technology. By breaking the 2D barrier, the semiconductor industry has found a way to continue the legacy of Moore’s Law through architectural innovation rather than just physical shrinking. The primary takeaways are clear: the "memory wall" is falling, energy efficiency is the new benchmark for performance, and the vertical stack is the new theater of competition.

As we move into 2026, the significance of this development will be felt in every sector touched by AI. From more capable autonomous agents to more efficient data centers, the "skyscraper" approach to silicon is the foundation upon which the next decade of artificial intelligence will be built. Watch for the first performance benchmarks of NVIDIA’s Rubin and Intel’s Clearwater Forest in early 2026; they will be the first true tests of whether 3D logic can live up to its immense promise.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.