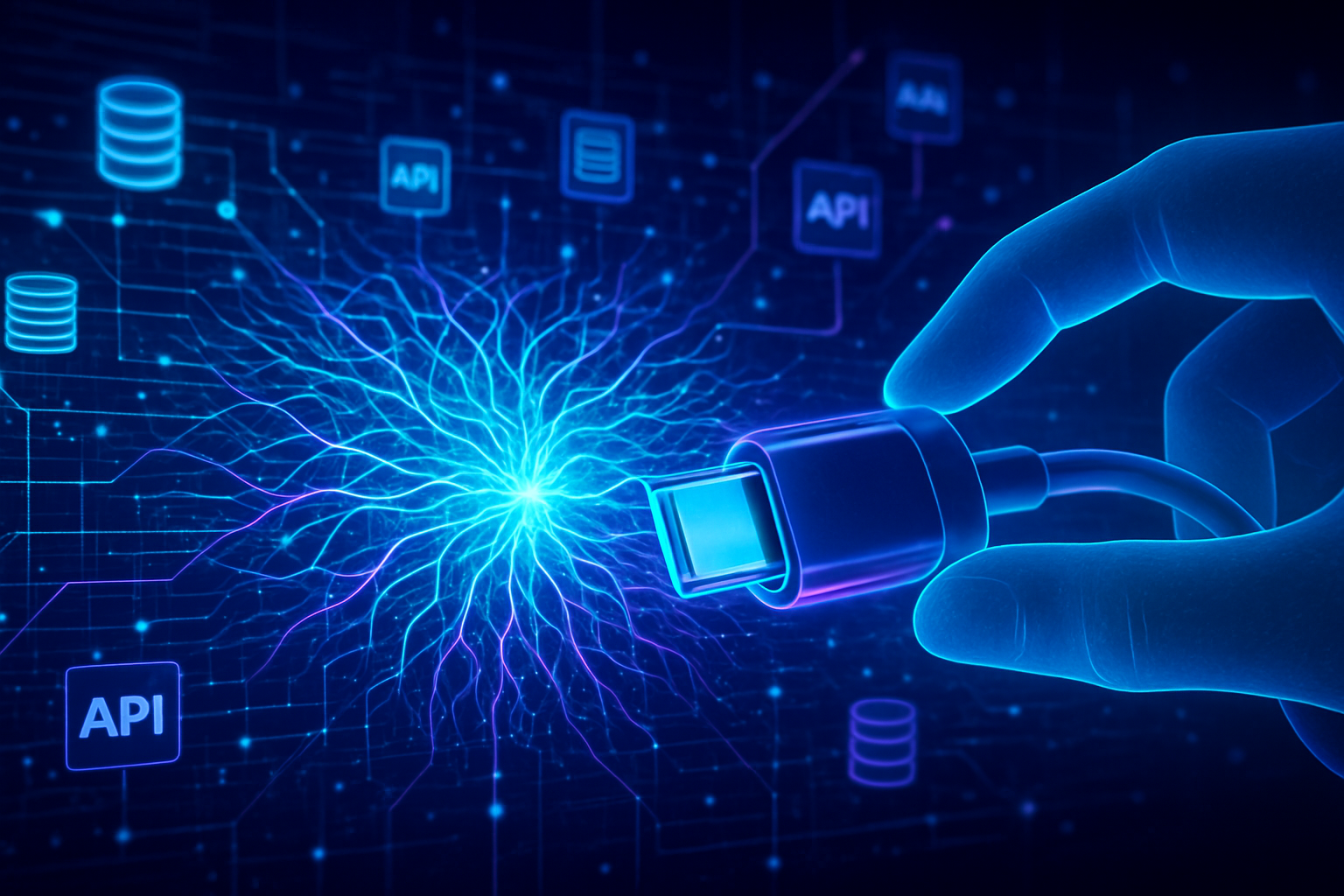

As of early 2026, the artificial intelligence landscape has shifted from a race for larger models to a race for more integrated, capable agents. At the center of this transformation is Anthropic’s Model Context Protocol (MCP), a revolutionary open standard that has earned the moniker "USB-C for AI." By creating a universal interface for AI models to interact with data and tools, Anthropic has effectively dismantled the walled gardens that previously hindered agentic workflows. The recent launch of "Enterprise Agent Skills" has further accelerated this trend, providing a standardized framework for agents to execute complex, multi-step tasks across disparate corporate databases and APIs.

The significance of this development cannot be overstated. Before the widespread adoption of MCP, connecting an AI agent to a company’s proprietary data—such as a SQL database or a Slack workspace—required custom, brittle code for every unique integration. Today, MCP acts as the foundational "plumbing" of the AI ecosystem, allowing any model to "plug in" to any data source that supports the standard. This shift from siloed AI to an interoperable agentic framework marks the beginning of the "Digital Coworker" era, where AI agents operate with the same level of access and procedural discipline as human employees.

The Model Context Protocol (MCP) operates on a sleek client-server architecture designed to solve the "fragmentation problem." At its core, an MCP server acts as a translator between an AI model and a specific data source or tool. While the initial 2024 launch focused on basic connectivity, the 2025 introduction of Enterprise Agent Skills added a layer of "procedural intelligence." These Skills are filesystem-based modules containing structured metadata, validation scripts, and reference materials. Unlike simple prompts, Skills allow agents to understand how to use a tool, not just that the tool exists. This technical specification ensures that agents follow strict corporate protocols when performing tasks like financial auditing or software deployment.

One of the most critical technical advancements within the MCP ecosystem is "progressive disclosure." To prevent the common "Lost in the Middle" phenomenon—where LLMs lose accuracy as context windows grow too large—Enterprise Agent Skills use a tiered loading system. The agent initially only sees a lightweight metadata description of a skill. It only "loads" the full technical documentation or specific reference files when they become relevant to the current step of a task. This dramatically reduces token consumption and increases the precision of the agent's actions, allowing it to navigate terabytes of data without overwhelming its internal memory.

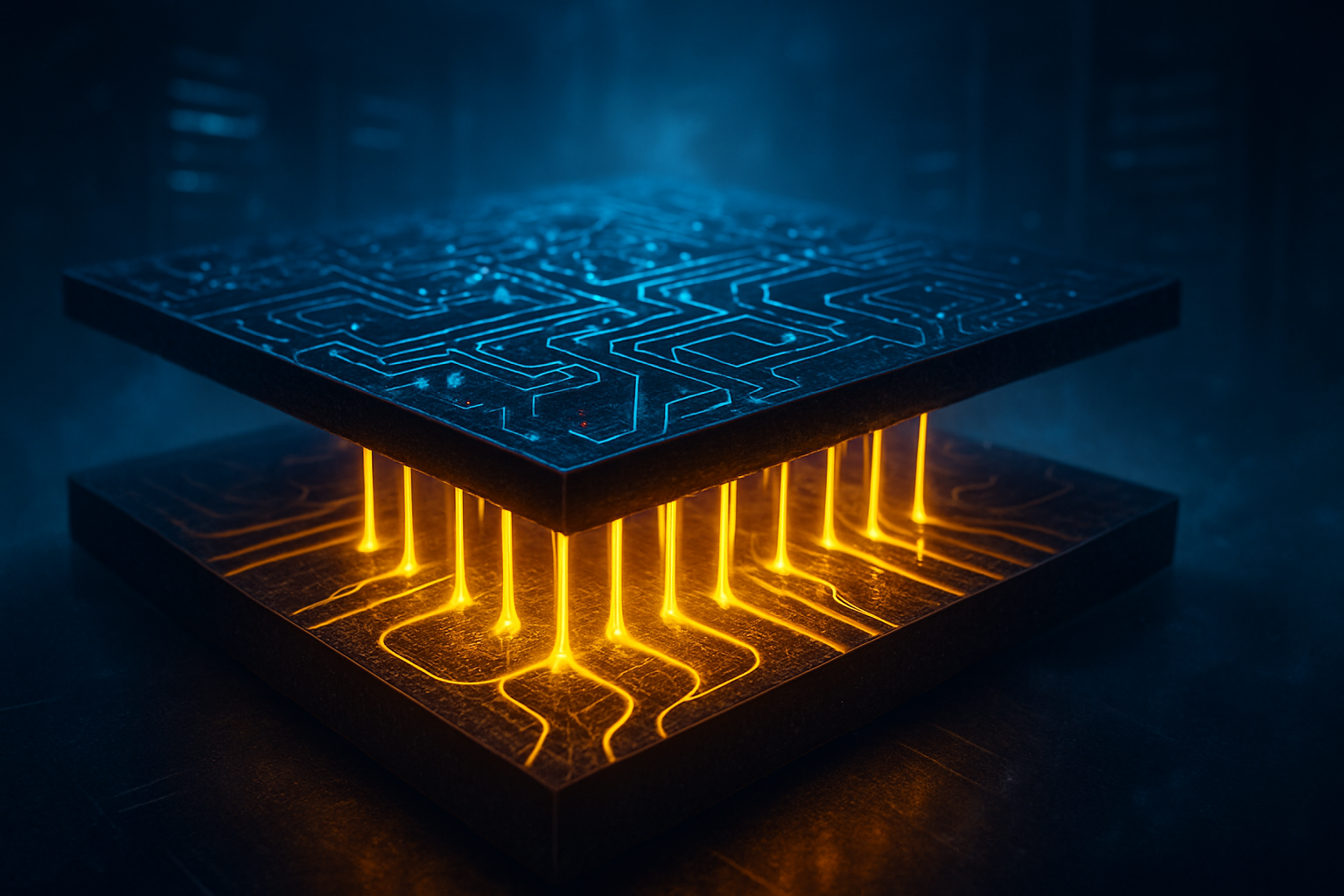

Furthermore, the protocol now emphasizes secure execution through virtual machine (VM) sandboxing. When an agent utilizes a Skill to process sensitive data, the code can be executed locally within a secure environment. Only the distilled, relevant results are passed back to the large language model (LLM), ensuring that proprietary raw data never leaves the enterprise's secure perimeter. This architecture differs fundamentally from previous "prompt-stuffing" approaches, offering a scalable, secure, and cost-effective way to deploy agents at the enterprise level. Initial reactions from the research community have been overwhelmingly positive, with many experts noting that MCP has effectively become the "HTTP of the agentic web."

The strategic implications of MCP have triggered a massive realignment among tech giants. While Anthropic pioneered the protocol, its decision to donate MCP to the Agentic AI Foundation (AAIF) under the Linux Foundation in late 2025 was a masterstroke that secured its future. Microsoft (NASDAQ: MSFT) was among the first to fully integrate MCP into Windows 11 and Azure AI Foundry, signaling that the standard would be the backbone of its "Copilot" ecosystem. Similarly, Alphabet (NASDAQ: GOOGL) has adopted MCP for its Gemini models, offering managed MCP servers that allow enterprise customers to bridge their Google Cloud data with any compliant AI agent.

The adoption extends beyond the traditional "Big Tech" players. Amazon (NASDAQ: AMZN) has optimized its custom Trainium chips to handle the high-concurrency workloads typical of MCP-heavy agentic swarms, while integrating the protocol directly into Amazon Bedrock. This move positions AWS as the preferred infrastructure for companies running massive fleets of interoperable agents. Meanwhile, companies like Block (NYSE: SQ) have contributed significant open-source frameworks, such as the Goose agent, which utilizes MCP as its primary connectivity layer. This unified front has created a powerful network effect: as more SaaS providers like Atlassian (NASDAQ: TEAM) and Salesforce (NYSE: CRM) launch official MCP servers, the value of being an MCP-compliant model increases exponentially.

For startups, the "USB-C for AI" standard has lowered the barrier to entry for building specialized agents. Instead of spending months building integrations for every popular enterprise app, a startup can build one MCP-compliant agent that instantly gains access to the entire ecosystem of MCP-enabled tools. This has led to a surge in "Agentic Service Providers" that focus on fine-tuning specific skills—such as legal discovery or medical coding—rather than building the underlying connectivity. The competitive advantage has shifted from who has the data to who has the most efficient skills for processing that data.

The rise of MCP and Enterprise Agent Skills fits into a broader trend of "Agentic Orchestration," where the focus is no longer on the chatbot but on the autonomous workflow. By early 2026, we are seeing the results of this shift: a move away from the "Token Crisis." Previously, the cost of feeding massive amounts of data into an LLM was a major bottleneck for enterprise adoption. By using MCP to fetch only the necessary data points on demand, companies have reduced their AI operational costs by as much as 70%, making large-scale agent deployment economically viable for the first time.

However, this level of autonomy brings significant concerns regarding governance and security. The "USB-C for AI" analogy also highlights a potential vulnerability: if an agent can plug into anything, the risk of unauthorized data access or accidental system damage increases. To mitigate this, the 2026 MCP specification includes a mandatory "Human-in-the-Loop" (HITL) protocol for high-risk actions. This allows administrators to set "governance guardrails" where an agent must pause and request human authorization before executing an API call that involves financial transfers or permanent data deletion.

Comparatively, the launch of MCP is being viewed as a milestone similar to the introduction of the TCP/IP protocol for the internet. Just as TCP/IP allowed disparate computer networks to communicate, MCP is allowing disparate "intelligence silos" to collaborate. This standardization is the final piece of the puzzle for the "Agentic Web," a future where AI agents from different companies can negotiate, share data, and complete complex transactions on behalf of their human users without manual intervention.

Looking ahead, the next frontier for MCP and Enterprise Agent Skills lies in "Cross-Agent Collaboration." We expect to see the emergence of "Agent Marketplaces" where companies can purchase or lease highly specialized skills developed by third parties. For instance, a small accounting firm might "rent" a highly sophisticated Tax Compliance Skill developed by a top-tier global consultancy, plugging it directly into their MCP-compliant agent. This modularity will likely lead to a new economy centered around "Skill Engineering."

In the near term, we anticipate a deeper integration between MCP and edge computing. As agents become more prevalent on mobile devices and IoT hardware, the need for lightweight MCP servers that can run locally will grow. Challenges remain, particularly in the realm of "Semantic Collisions"—where two different skills might use the same command to mean different things. Standardizing the vocabulary of these skills will be a primary focus for the Agentic AI Foundation throughout 2026. Experts predict that by 2027, the majority of enterprise software will be "Agent-First," with traditional user interfaces taking a backseat to MCP-driven autonomous interactions.

The evolution of Anthropic’s Model Context Protocol into a global open standard marks a definitive turning point in the history of artificial intelligence. By providing the "USB-C" for the AI era, MCP has solved the interoperability crisis that once threatened to stall the progress of agentic technology. The addition of Enterprise Agent Skills has provided the necessary procedural framework to move AI from a novelty to a core component of enterprise infrastructure.

The key takeaway for 2026 is that the era of "Siloed AI" is over. The winners in this new landscape will be the companies that embrace openness and contribute to the growing ecosystem of MCP-compliant tools and skills. As we watch the developments in the coming months, the focus will be on how quickly traditional industries—such as manufacturing and finance—can transition their legacy systems to support this new standard.

Ultimately, MCP is more than just a technical protocol; it is a blueprint for how humans and AI will interact in a hyper-connected world. By standardizing the way agents access data and perform tasks, Anthropic and its partners in the Agentic AI Foundation have laid the groundwork for a future where AI is not just a tool we use, but a seamless extension of our professional and personal capabilities.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.