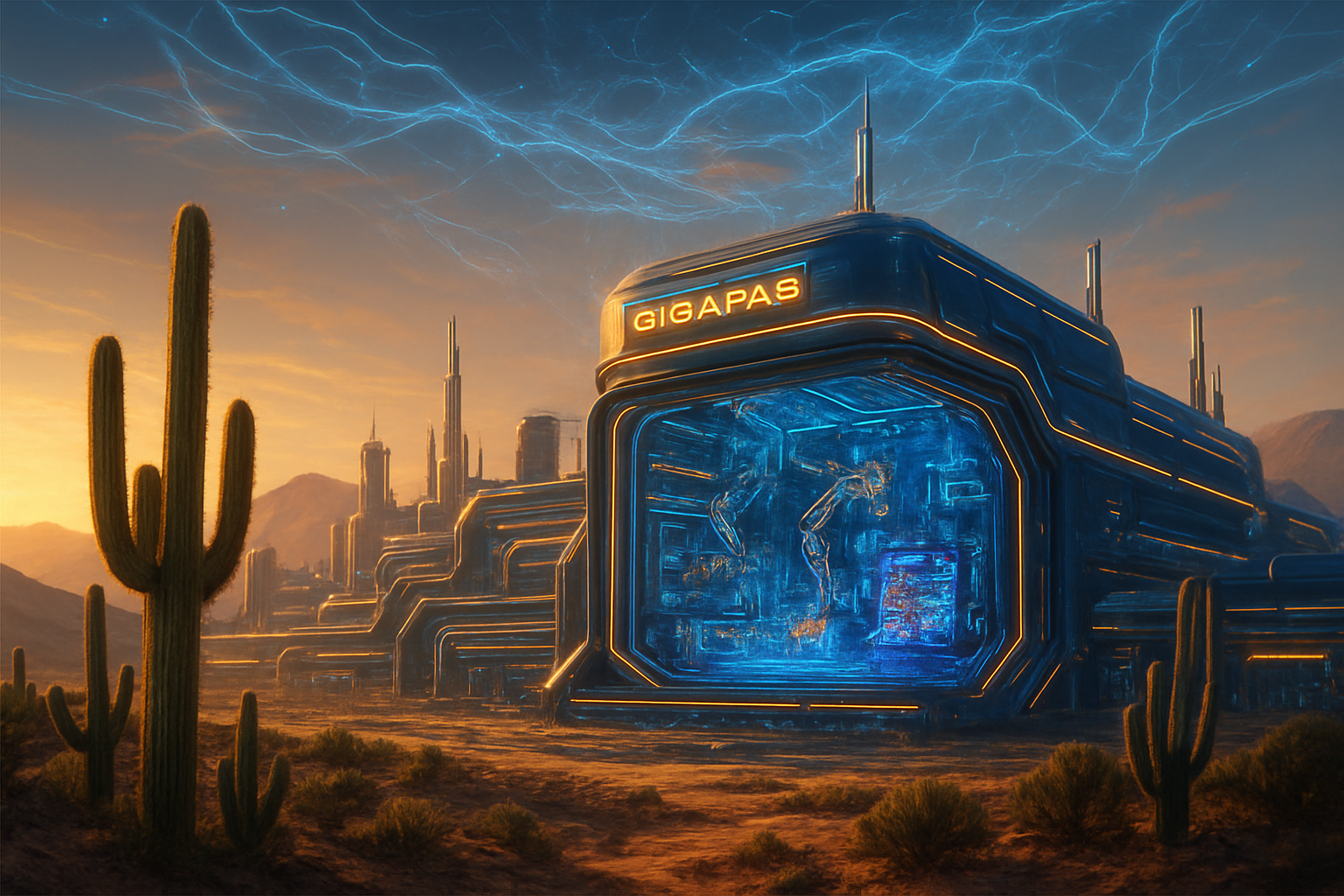

The global semiconductor landscape is witnessing a seismic shift as 2026 marks the definitive "Wide-Bandgap (WBG) Era." Driven by the insatiable power demands of AI data centers and the wholesale transition of the automotive industry toward high-voltage architectures, the market for Silicon Carbide (SiC) and Gallium Nitride (GaN) discrete devices is projected to exceed $5.3 billion this year. This milestone represents more than just a fiscal achievement; it signals the end of silicon’s decades-long dominance in high-power applications, where its thermal and electrical limits have finally been reached by the sheer scale of modern computing.

As of late January 2026, the industry is tracking a massive capacity build-out, with major manufacturers racing to bring new fabrication plants online. This surge is largely fueled by the realization that current AI hardware, despite its logical brilliance, is physically constrained by heat. By replacing traditional silicon with WBG materials, engineers are finding they can manage the immense thermal output of next-generation GPU clusters and EV inverters with unprecedented efficiency, effectively doubling down on the performance-per-watt metrics that now dictate market leadership.

Technical Superiority and the Rise of the 8-Inch Wafer

The technical transition at the heart of this growth centers on the physical properties of SiC and GaN compared to traditional Silicon (Si). Silicon Carbide boasts a thermal conductivity nearly 3.3 times higher than silicon, allowing it to dissipate heat far more effectively and operate at temperatures exceeding 200°C. Meanwhile, GaN’s superior electron mobility allows for switching frequencies in the megahertz range—significantly higher than silicon—which enables the use of much smaller passive components like inductors and capacitors. These properties are no longer just "nice-to-have" advantages; they are essential for the 800V Direct Current (DC) architectures now becoming the standard in both high-end electric vehicles and AI server racks.

A cornerstone of the 2026 market expansion is the massive investment by ROHM Semiconductor ([TYO: 6963]). The company’s new Miyazaki Plant No. 2, a sprawling 230,000 m² facility, has officially entered its high-volume phase this year. This plant is a critical hub for the production of 8-inch (200mm) SiC substrates. Moving from 6-inch to 8-inch wafers is a technical hurdle that has historically plagued the industry, but the successful scaling at the Miyazaki and Chikugo plants has increased chip output per wafer by nearly 1.8x. This efficiency gain has been instrumental in driving down the cost of SiC devices, making them competitive with silicon-based Insulated Gate Bipolar Transistors (IGBTs) for the first time in mid-market applications.

Initial reactions from the semiconductor research community have highlighted how these advancements solve the "thermal bottleneck" of modern AI. Recent tests of SiC-based power stages in server PSUs (Power Supply Units) have demonstrated peak efficiencies of 98%, a leap from the 94% ceiling typical of silicon. In the world of hyperscale data centers, that 4% difference translates into millions of dollars in saved electricity and cooling costs. Furthermore, NVIDIA ([NASDAQ: NVDA]) has reportedly begun exploring SiC interposers for its newest Blackwell-successor chips, aiming to reduce GPU operating temperatures by up to 20°C, which significantly extends the lifespan of the hardware under 24/7 AI training loads.

Corporate Maneuvering and Market Positioning

The surge in WBG demand has created a clear divide between companies that secured their supply chains early and those now scrambling for capacity. STMicroelectronics ([NYSE: STM]) and Infineon Technologies ([ETR: IFX]) continue to hold dominant positions, but the aggressive expansion of ROHM and Wolfspeed ([NYSE: WOLF]) has intensified the competitive landscape. These companies are no longer just component suppliers; they are strategic partners for the world’s largest tech and automotive giants. For instance, BYD ([HKG: 1211]) and Hyundai Motor Company ([KRX: 005380]) have integrated SiC into their 2026 vehicle lineups to achieve a 5-10% range increase without increasing battery size, a move that provides a massive competitive edge in the price-sensitive EV market.

In the data center space, the impact is equally transformative. Major cloud providers are shifting toward 800V high-voltage direct current architectures to power their AI clusters. This has benefited companies like Lucid Motors ([NASDAQ: LCID]), which has leveraged its expertise in high-voltage power electronics to consult on industrial power management. The strategic advantage now lies in "vertical integration"—those who control the substrate production (the raw SiC or GaN material) are less vulnerable to the price volatility and shortages that defined the early 2020s.

Wider Significance: Energy, AI, and Global Sustainability

The transition to WBG semiconductors represents a critical pivot in the global AI landscape. As concerns grow regarding the environmental impact of AI—specifically the massive energy consumption of large language model (LLM) training—SiC and GaN offer a tangible path toward "Greener AI." By reducing switching losses and improving thermal management, these materials are estimated to reduce the carbon footprint of a 10MW data center by nearly 15% annually. This aligns with broader ESG goals while simultaneously allowing companies to pack more compute power into the same physical footprint.

However, the rapid growth also brings potential concerns, particularly regarding the complexity of the manufacturing process. SiC crystals are notoriously difficult to grow, requiring temperatures near 2,500°C and specialized furnaces. Any disruption in the supply of high-purity graphite or specialized silicon carbide powder could create a bottleneck that slows the deployment of AI infrastructure. Comparisons are already being made to the 2021 chip shortage, with analysts warning that the "Power Gap" might become the next "Memory Gap" in the tech industry’s race toward artificial general intelligence.

The Horizon: 12-Inch Wafers and Ultra-Fast Charging

Looking ahead, the industry is already eyeing the next frontier: 12-inch (300mm) SiC production. While 8-inch wafers are the current state-of-the-art in 2026, R&D labs at ROHM and Wolfspeed are reportedly making progress on larger formats that could further slash costs by 2028. We are also seeing the rise of "GaN-on-SiC" and "GaN-on-GaN" technologies, which aim to combine the high-frequency benefits of Gallium Nitride with the superior thermal dissipation of Silicon Carbide for ultra-dense AI power modules.

On the consumer side, the proliferation of these materials will soon manifest in 350kW+ ultra-fast charging stations, capable of charging an EV to 80% in under 10 minutes without overheating. Experts predict that by 2027, the use of WBG semiconductors will be so pervasive that traditional silicon power devices will be relegated to low-power, "legacy" electronics. The primary challenge remains the development of standardized testing protocols for these materials, as their long-term reliability in the extreme environments of an AI server or a vehicle drivetrain is still being documented in real-time.

Conclusion: A Fundamental Shift in Power

The 2026 milestone of a $5 billion market for SiC and GaN discrete devices marks a fundamental shift in how we build the world’s most advanced machines. From the silicon-carbide-powered inverters in our cars to the gallium-nitride-cooled servers processing our queries, WBG materials have moved from a niche laboratory curiosity to the backbone of the global digital and physical infrastructure.

As we move through the remainder of 2026, the key developments to watch will be the output yield of ROHM’s Miyazaki plant and the potential for a "Power-Efficiency War" between AI labs. In a world where intelligence is limited by the power you can provide and the heat you can remove, the masters of wide-bandgap semiconductors may very well hold the keys to the future of AI development.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.