In a pivotal shift for the semiconductor industry, Samsung Electronics (KRX: 005930) is set to commence mass production of its next-generation High Bandwidth Memory 4 (HBM4) in February 2026. This milestone marks a significant turnaround for the South Korean tech giant, which has spent much of the last two years trailing its rivals in the lucrative AI memory sector. With this move, Samsung is positioning itself as the primary hardware backbone for the next wave of generative AI, having reportedly secured final qualification for NVIDIA’s (NASDAQ: NVDA) upcoming "Rubin" GPU architecture.

The start of mass production is more than just a logistical achievement; it represents a technological "leapfrog" that could redefine the competitive landscape of AI hardware. By integrating its most advanced memory cells with cutting-edge logic die manufacturing, Samsung is offering a "one-stop shop" solution that promises to break the "memory wall"—the performance bottleneck that has long limited the speed and efficiency of Large Language Models (LLMs). As the industry prepares for the formal debut of the NVIDIA Rubin platform, Samsung’s HBM4 is poised to become the new gold standard for high-performance computing.

Technical Superiority: 1c DRAM and the 4nm Logic Die

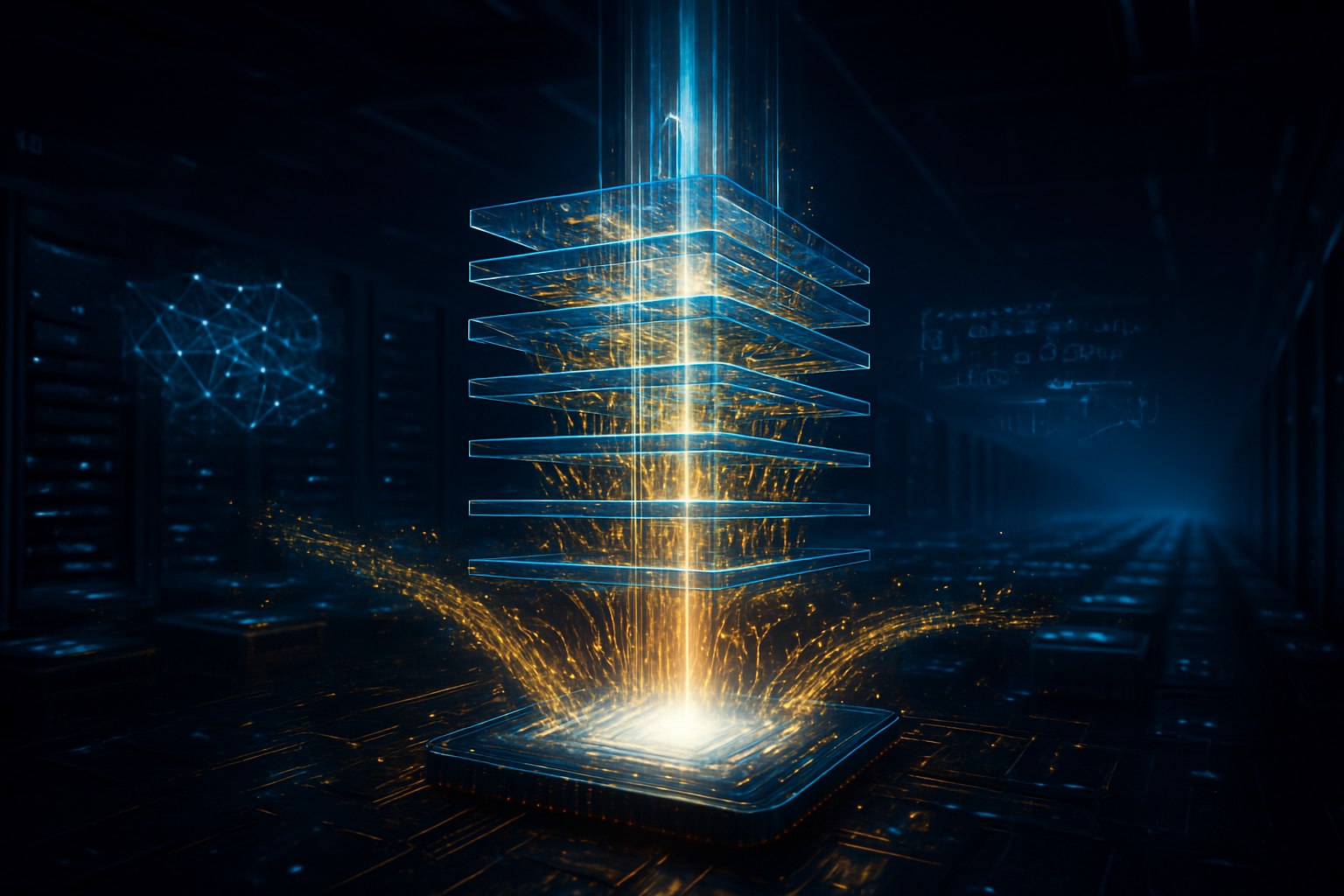

The technical specifications of Samsung's HBM4 are a testament to the company’s aggressive R&D strategy over the past 24 months. At the heart of the new stack is Samsung’s 6th-generation 10nm-class (1c) DRAM. While competitors like SK Hynix (KRX: 000660) and Micron Technology (NASDAQ: MU) are largely relying on 5th-generation (1b) DRAM for their initial HBM4 production runs, Samsung has successfully skipped a generation in its production scaling. This 1c process allows for significantly higher bit density and a 20% improvement in power efficiency compared to previous iterations, a crucial factor for data centers struggling with the immense energy demands of AI clusters.

Furthermore, Samsung is leveraging its unique position as both a memory manufacturer and a world-class foundry. Unlike its competitors, who often rely on third-party foundries like Taiwan Semiconductor Manufacturing Company (NYSE: TSM) for logic dies, Samsung is using its own 4nm foundry process to create the HBM4 logic die—the "brain" at the base of the memory stack that manages data flow. This vertical integration allows for tighter architectural optimization and reduced thermal resistance. The result is an industry-leading data transfer speed of 11.7 Gbps per pin, pushing total per-stack bandwidth to approximately 1.5 TB/s.

Industry experts note that this shift to a 4nm logic die is a departure from the 12nm and 7nm processes used in previous generations. By using 4nm technology, Samsung can embed more complex logic directly into the memory stack, enabling preliminary data processing to occur within the memory itself rather than on the GPU. This "near-memory computing" approach is expected to significantly reduce the latency involved in training massive models with trillions of parameters.

Reshaping the AI Competitive Landscape

Samsung’s aggressive entry into the HBM4 market is a direct challenge to the dominance of SK Hynix, which has held the majority share of the HBM market since the rise of ChatGPT. For NVIDIA, the qualification of Samsung’s HBM4 provides a much-needed diversification of its supply chain. The Rubin platform, expected to be officially unveiled at NVIDIA's GTC conference in March 2026, will reportedly feature eight HBM4 stacks, providing a staggering 288 GB of VRAM and an aggregate bandwidth exceeding 22 TB/s. By securing Samsung as a primary supplier, NVIDIA can mitigate the supply shortages that plagued the H100 and B200 generations.

The move also puts pressure on Micron Technology, which has been making steady gains in the U.S. market. While Micron’s HBM4 samples have shown promising results, Samsung’s ability to scale 1c DRAM by February gives it a first-mover advantage in the highest-performance tier. For tech giants like Microsoft (NASDAQ: MSFT), Google (NASDAQ: GOOGL), and Meta (NASDAQ: META), who are all designing their own custom AI silicon, Samsung’s "one-stop" HBM4 solution offers a streamlined path to high-performance memory integration without the logistical hurdles of coordinating between multiple vendors.

Strategic advantages are also emerging for Samsung's foundry business. By proving the efficacy of its 4nm process for HBM4 logic dies, Samsung is demonstrating a competitive alternative to TSMC’s "CoWoS" (Chip on Wafer on Substrate) packaging dominance. This could entice other chip designers to look toward Samsung’s turnkey solutions, which combine advanced logic and memory in a single manufacturing pipeline.

Broader Significance: The Evolution of the AI Architecture

Samsung’s HBM4 breakthrough arrives at a critical juncture in the broader AI landscape. As AI models move toward "Reasoning" and "Agentic" workflows, the demand for memory bandwidth is outpacing the demand for raw compute power. The shift to HBM4 marks the first time that memory architecture has undergone a fundamental redesign, moving from a simple storage component to an active participant in the computing process.

This development also addresses the growing concerns regarding the environmental impact of AI. With the 11.7 Gbps speed achieved at lower voltage levels due to the 1c process, Samsung is helping to bend the curve of energy consumption in the data center. Previous AI milestones were often characterized by "brute force" scaling; however, the HBM4 era is defined by architectural elegance and efficiency, signaling a more sustainable path for the future of artificial intelligence.

In comparison to previous milestones, such as the transition from HBM2 to HBM3, the move to HBM4 is considered a "generational leap" rather than an incremental upgrade. The integration of 4nm foundry logic into the memory stack effectively blurs the line between memory and processor, a trend that many believe will eventually lead to fully integrated 3D-stacked chips where the GPU and RAM are inseparable.

The Horizon: 16-Layer Stacks and Customized AI

Looking ahead, the road doesn't end with the initial February production. Samsung and its rivals are already eyeing the next frontier: 16-layer HBM4 stacks. While the initial February rollout will focus on 12-layer stacks, Samsung is expected to sample 16-layer variants by mid-2026, which would push single-stack capacities to 48 GB. These high-density modules will be essential for the ultra-large-scale training required for "World Models" and advanced video generation AI.

Furthermore, the industry is moving toward "Custom HBM." In the near future, we can expect to see HBM4 stacks where the logic die is specifically designed for a single customer’s workload—such as a stack optimized specifically for Google’s TPU or Amazon’s (NASDAQ: AMZN) Trainium chips. Experts predict that by 2027, the "commodity" memory market will have largely split into standard HBM and bespoke AI memory solutions, with Samsung's foundry-memory hybrid model serving as the blueprint for this transformation.

Challenges remain, particularly regarding heat dissipation in 16-layer stacks. Samsung is currently perfecting advanced non-conductive film (NCF) bonding techniques to ensure that these towering stacks of silicon don't overheat under the intense workloads of a Rubin-class GPU. The resolution of these thermal challenges will dictate the pace of memory scaling through the end of the decade.

A New Chapter in AI History

Samsung’s successful launch of HBM4 mass production in February 2026 marks a defining moment in the "Memory Wars." By combining 6th-gen 10nm-class DRAM with 4nm logic dies, Samsung has not only closed the gap with its competitors but has set a new benchmark for the entire industry. The 11.7 Gbps speeds and the partnership with NVIDIA’s Rubin platform ensure that Samsung will remain at the heart of the AI revolution for years to come.

As the industry looks toward the NVIDIA GTC event in March, all eyes will be on how these HBM4 chips perform in real-world benchmarks. For now, Samsung has sent a clear message: it is no longer a follower in the AI market, but a leader driving the hardware capabilities that make advanced artificial intelligence possible.

The coming months will be crucial as Samsung ramps up its fabrication lines in Pyeongtaek and Hwaseong. Investors and tech analysts should watch for the first shipment reports in late February and early March, as these will provide the first concrete evidence of Samsung’s yield rates and its ability to meet the unprecedented demand of the Rubin era.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.