As of January 13, 2026, the global semiconductor landscape has hardened into what analysts are calling the "Silicon Curtain," a profound geopolitical and technical bifurcation between Western and Chinese technology ecosystems. While a high-level trade truce brokered during the "Busan Rapprochement" in late 2025 prevented a total economic decoupling, the start of 2026 has been marked by the formalization of two mutually exclusive supply chains. The passage of the Remote Access Security Act in the U.S. House this week represents the final closure of the "cloud loophole," effectively treating remote access to high-end GPUs as a physical export and forcing Chinese firms to rely entirely on domestic compute or high-taxed, monitored imports.

This shift signifies a transition from broad, reactionary trade bans to a sophisticated "two-pronged squeeze" strategy. The U.S. is now leveraging its dominance in electronic design automation (EDA) and advanced packaging to maintain a "sliding scale" of control over China’s AI capabilities. Simultaneously, China’s "Big Fund" Phase 3 has successfully localized over 35% of its semiconductor equipment, allowing firms like Huawei and SMIC to scale 5nm production despite severe lithography restrictions. This era is no longer just about who builds the fastest chip, but who can architect the most resilient and sovereign AI stack.

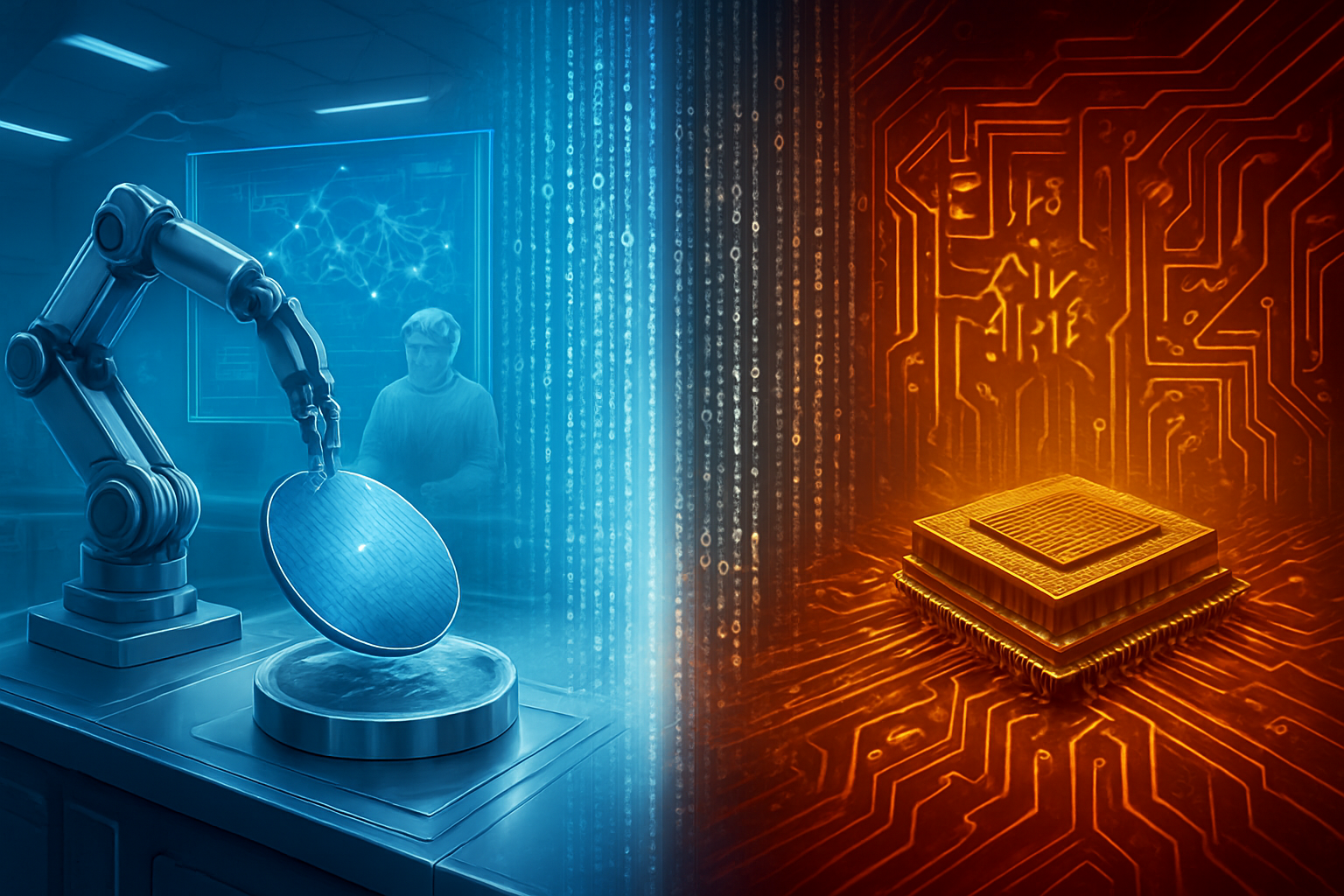

Advanced Packaging and the Race for 2nm Nodes

The technical battleground has shifted from raw transistor scaling to the frontiers of advanced packaging and chiplet architectures. As the industry approaches the physical limits of 2nm nodes, the focus in early 2026 is on 2.5D and 3D integration, specifically technologies like Taiwan Semiconductor Manufacturing Co. (NYSE: TSM) CoWoS (Chip-on-Wafer-on-Substrate). The U.S. has successfully localized these "backend" processes through the expansion of TSMC’s Arizona facilities and Amkor Technology’s new Peoria plant. This allows for the creation of "All-American" high-performance chips where the silicon, interposer, and high-bandwidth memory (HBM) are integrated entirely within North American borders to ensure supply chain integrity.

In response, China has pivoted to a "lithography bypass" strategy. By utilizing domestic advanced packaging platforms such as JCET’s X-DFOI, Chinese engineers are stitching together multiple 7nm or 5nm chiplets to achieve "virtual 3nm" performance. This architectural ingenuity is supported by the new ACC 1.0 (Advanced Chiplet Cloud) standard, an indigenous interconnect protocol designed to make Chinese-made chiplets cross-compatible. While Western firms move toward the Universal Chiplet Interconnect Express (UCIe) 2.0 standard, the divergence in these protocols ensures that a chiplet designed for a Western GPU cannot be easily integrated into a Chinese system-on-chip (SoC).

Furthermore, the "Nvidia Surcharge" introduced in December 2025 has added a new layer of technical complexity. Nvidia (NASDAQ: NVDA) is now permitted to export its H200 GPUs to China, but each unit carries a mandatory 25% "Washington Tax" and integrated firmware that permits real-time auditing of compute workloads. This firmware, developed in collaboration with U.S. national labs, utilizes a "proof-of-work" verification system to ensure that the chips are not being used to train prohibited military or surveillance-grade frontier models.

Initial reactions from the AI research community have been mixed. While some praise the "pragmatic" approach of allowing commercial sales to prevent a total market collapse, others warn that the "Silicon Curtain" is stifling global collaboration. Industry experts at the 2026 CES conference noted that the divergence in standards will likely lead to two separate AI software ecosystems, making it increasingly difficult for startups to develop cross-platform applications that work seamlessly on both Western and Chinese hardware.

Market Impact: The Re-shoring Race and the Efficiency Paradox

The current geopolitical climate has created a bifurcated market that favors companies with deep domestic ties. Intel (NASDAQ: INTC) has been a primary beneficiary, finalizing its $7.86 billion CHIPS Act award in late 2024 and reaching critical milestones for its Ohio "mega-fab." Similarly, Micron Technology (NASDAQ: MU) broke ground on its $100 billion Syracuse facility earlier this month, marking a decisive shift in HBM production toward U.S. soil. These companies are now positioned as the bedrock of a "trusted" Western supply chain, commanding premium prices for silicon that carries a "Made in USA" certification.

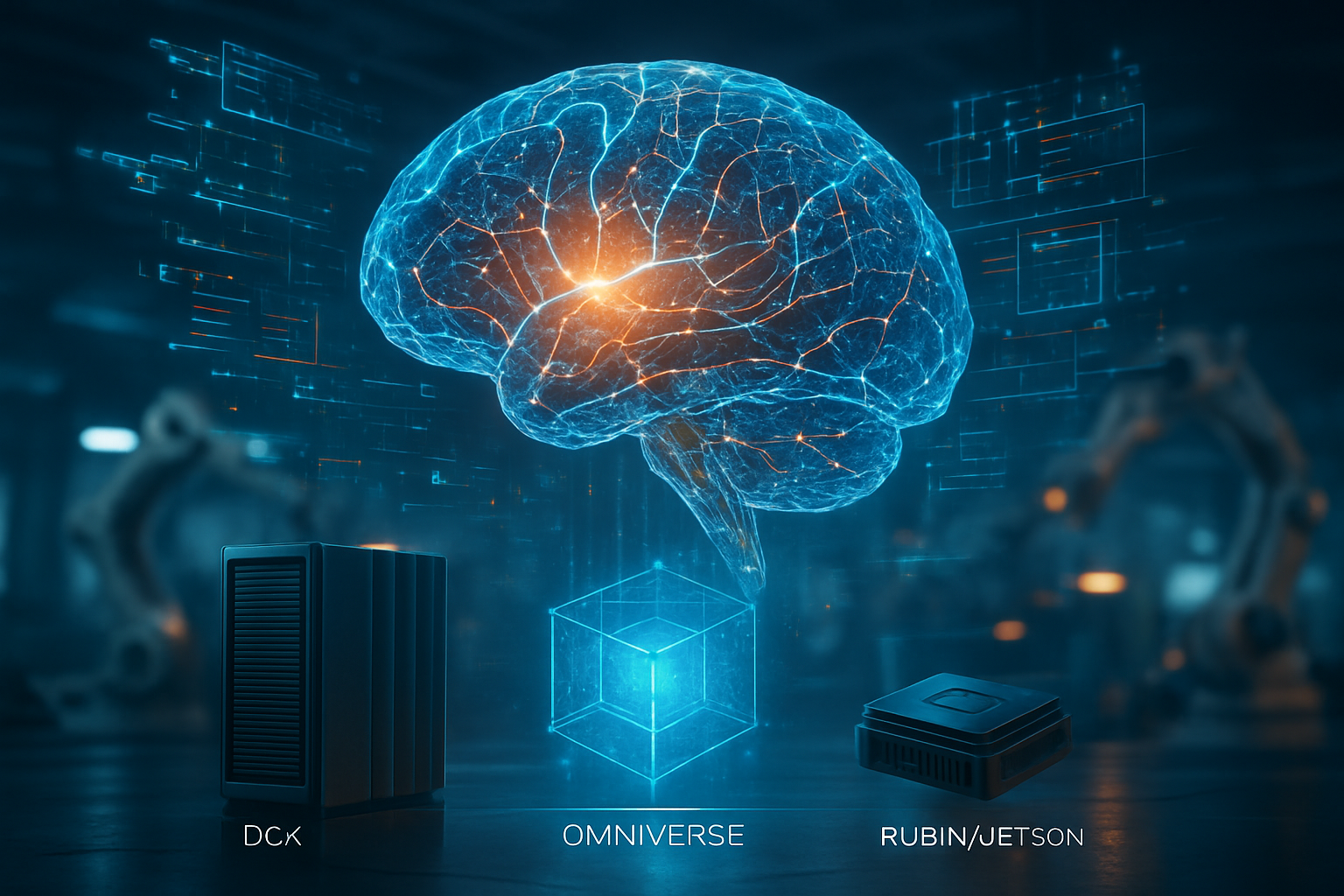

For major AI labs and tech giants like Microsoft (NASDAQ: MSFT) and Google (NASDAQ: GOOGL), the new trade regime has introduced a "compute efficiency paradox." The release of the DeepSeek-R1 model in 2025 proved that superior algorithmic architectures—specifically Mixture of Experts (MoE)—can compensate for hardware restrictions. This has forced a pivot in market positioning; instead of racing for the largest GPU clusters, companies are now competing on the efficiency of their inference stacks. Nvidia’s Blackwell architecture remains the gold standard, but the company now faces "good enough" domestic competition in China from firms like Huawei, whose Ascend 970 chips are being mandated for use by Chinese giants like ByteDance and Alibaba.

The disruption to existing products is most visible in the cloud sector. Amazon (NASDAQ: AMZN) and other hyperscalers have had to overhaul their remote access protocols to comply with the 2026 Remote Access Security Act. This has resulted in a significant drop in international revenue from Chinese AI startups that previously relied on "renting" American compute power. Conversely, this has accelerated the growth of sovereign cloud providers in regions like the Middle East and Southeast Asia, who are attempting to position themselves as neutral "tech hubs" between the two warring factions.

Strategic advantages are now being measured in "energy sovereignty." As AI clusters grow to gigawatt scales, the proximity of semiconductor fabs to reliable, carbon-neutral energy sources has become as critical as the silicon itself. Companies that can integrate their chip manufacturing with localized power grids—such as Intel’s partnerships with renewable energy providers in the Pacific Northwest—are gaining a competitive edge in long-term operational stability over those relying on aging, centralized infrastructure.

Broader Significance: The End of Globalized Silicon

The emergence of the Silicon Curtain marks the definitive end of the "flat world" era for semiconductors. For three decades, the industry thrived on a globalized model where design happened in California, lithography in the Netherlands, manufacturing in Taiwan, and packaging in China. That model has been replaced by "Techno-Nationalism." This trend is not merely a trade war; it is a fundamental reconfiguration of the global economy where semiconductors are treated with the same strategic weight as oil or nuclear material.

This development mirrors previous milestones, such as the 1986 U.S.-Japan Semiconductor Agreement, but at a vastly larger scale. The primary concern among economists is "innovation fragmentation." When the global talent pool is divided, and technical standards diverge, the rate of breakthrough discoveries in AI and materials science may slow. Furthermore, the aggressive use of rare earth "pauses" by China in late 2025—though currently suspended under the Busan trade deal—demonstrates that the supply chain remains vulnerable to "resource weaponization" at the lowest levels of the stack.

However, some argue that this competition is actually accelerating innovation. The pressure to bypass U.S. export controls led to China’s breakthrough in "virtual 3nm" packaging, while the U.S. push for self-sufficiency has revitalized its domestic manufacturing sector. The "efficiency paradox" introduced by DeepSeek-R1 has also shifted the AI community's focus away from "brute force" scaling toward more sustainable, reasoning-capable models. This shift could potentially solve the AI industry's looming energy crisis by making powerful models accessible on less energy-intensive hardware.

Future Outlook: The Race to 2nm and the STRIDE Act

Looking ahead to the remainder of 2026 and 2027, the focus will turn toward the "2nm Race." TSMC and Intel are both racing to reach high-volume manufacturing of 2nm nodes featuring Gate-All-Around (GAA) transistors. These chips will be the first to truly test the limits of current lithography technology and will likely be subject to even stricter export controls. Experts predict that the next wave of U.S. policy will focus on "Quantum-Secure Supply Chains," ensuring that the chips powering tomorrow's encryption are manufactured in environments free from foreign surveillance or "backdoor" vulnerabilities.

The newly introduced STRIDE Act (STrengthening Resilient Infrastructure and Domestic Ecosystems) is expected to be the center of legislative debate in mid-2026. This bill proposes a 10-year ban on CHIPS Act recipients using any Chinese-made semiconductor equipment, which would force a radical decoupling of the toolmaker market. If passed, it would provide a massive boost to Western toolmakers like ASML (NASDAQ: ASML) and Applied Materials, while potentially isolating Chinese firms like Naura into a "parallel" tool ecosystem that serves only the domestic market.

Challenges remain, particularly in the realm of specialized labor. Both the U.S. and China are facing significant talent shortages as they attempt to rapidly scale domestic manufacturing. The "Silicon Curtain" may eventually be defined not by who has the best machines, but by who can train and retain the largest workforce of specialized semiconductor engineers. The coming months will likely see a surge in "tech-diplomacy" as both nations compete for talent from neutral regions like India, South Korea, and the European Union.

Summary and Final Thoughts

The geopolitical climate for semiconductors in early 2026 is one of controlled escalation and strategic self-reliance. The transition from the "cloud loophole" era to the "Remote Access Security Act" regime signifies a world where compute power is a strictly guarded national resource. Key takeaways include the successful localization of advanced packaging in both the U.S. and China, the emergence of a "two-stack" technical ecosystem, and the shift toward algorithmic efficiency as a means of overcoming hardware limitations.

This development is perhaps the most significant in the history of the semiconductor industry, surpassing even the invention of the integrated circuit in its impact on global power dynamics. The "Silicon Curtain" is not just a barrier to trade; it is a blueprint for a new era of fragmented innovation. While the "Busan Rapprochement" provides a temporary buffer against total economic warfare, the underlying drive for technological sovereignty remains the dominant force in global politics.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.