In a milestone that cements artificial intelligence as the most potent tool in modern science, Google DeepMind’s AlphaFold has officially surpassed 3 million users worldwide. This achievement coincides with the five-year anniversary of AlphaFold 2’s historic victory at the CASP14 competition in late 2020—an event widely regarded as the "ImageNet moment" for biology. Over the last half-decade, the platform has evolved from a grand challenge solution into a foundational utility, fundamentally altering how humanity understands the molecular machinery of life.

The significance of reaching 3 million researchers cannot be overstated. By democratizing access to high-fidelity protein structure predictions, Alphabet Inc. (NASDAQ: GOOGL) has effectively compressed centuries of traditional laboratory work into a few clicks. What once required a PhD student years of arduous X-ray crystallography can now be accomplished in seconds, allowing the global scientific community to pivot its focus from "what" a protein looks like to "how" it can be manipulated to cure diseases, combat climate change, and protect biodiversity.

From Folding Proteins to Modeling Life: The Technical Evolution

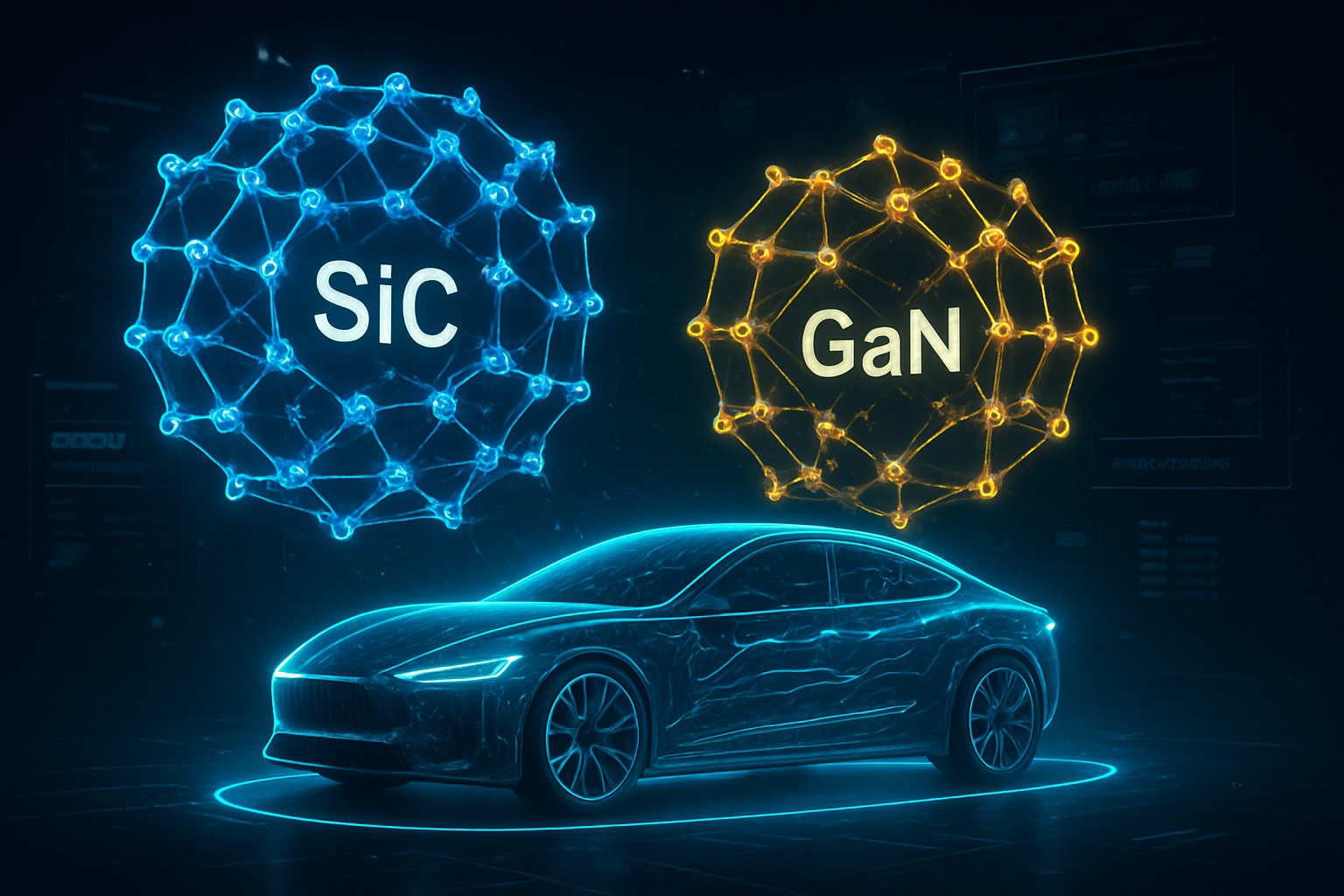

The journey from AlphaFold 2 to the current AlphaFold 3 represents a paradigm shift in computational biology. While the 2020 iteration solved the 50-year-old "protein folding problem" by predicting 3D shapes from amino acid sequences, AlphaFold 3, launched in 2024, introduced a sophisticated diffusion-based architecture. This shift allowed the model to move beyond static protein structures to predict the interactions of nearly all of life’s molecules, including DNA, RNA, ligands, and ions.

Technically, AlphaFold 3’s integration of a "Pairformer" module and a diffusion engine—similar to the technology powering generative image AI—has enabled a 50% improvement in predicting protein-ligand interactions. This is critical for drug discovery, as most medicines are small molecules (ligands) that bind to specific protein targets. The AlphaFold Protein Structure Database (AFDB), maintained in partnership with EMBL-EBI, now hosts over 214 million predicted structures, covering almost every protein known to science. This "protein universe" has become the primary reference for researchers in 190 countries, with over 1 million users hailing from low- and middle-income nations.

The research community's reaction has been one of near-universal adoption. Nobel laureate and DeepMind CEO Demis Hassabis, along with John Jumper, were awarded the 2024 Nobel Prize in Chemistry for this work, a rare instance of an AI development receiving the highest honor in a traditional physical science. Experts note that AlphaFold has transitioned from a breakthrough to a "standard operating procedure," comparable to the advent of DNA sequencing in the 1990s.

The Business of Biology: Partnerships and Competitive Pressure

The commercialization of AlphaFold’s insights is being spearheaded by Isomorphic Labs, a Google subsidiary that has rapidly become a titan in the "TechBio" sector. In 2024 and 2025, Isomorphic secured landmark deals worth approximately $3 billion with pharmaceutical giants such as Eli Lilly and Company (NYSE: LLY) and Novartis AG (NYSE: NVS). These partnerships are focused on identifying small molecule therapeutics for "intractable" disease targets, particularly in oncology and immunology.

However, Google is no longer the only player in the arena. The success of AlphaFold has ignited an arms race among tech giants and specialized AI labs. Microsoft Corporation (NASDAQ: MSFT), in collaboration with the Baker Lab, recently released RoseTTAFold 3, an open-source alternative that excels in de novo protein design. Meanwhile, NVIDIA Corporation (NASDAQ: NVDA) has positioned itself as the "foundry" for biological AI, offering its BioNeMo platform to help companies like Amgen and Astellas scale their own proprietary models. Meta Platforms, Inc. (NASDAQ: META) also remains a contender with its ESMFold model, which prioritizes speed over absolute precision, enabling the folding of massive metagenomic datasets in record time.

This competitive landscape has led to a strategic divergence. While AlphaFold remains the most cited and widely used tool for general research, newer entrants like Boltz-2 and Pearl are gaining ground in the high-value "lead optimization" market. These models provide more granular data on binding affinity—the strength of a drug’s connection to its target—which was a known limitation in earlier versions of AlphaFold.

A Wider Significance: Nobel Prizes, Plastic-Eaters, and Biosecurity

Beyond the boardroom and the lab, AlphaFold’s impact is felt in the broader effort to solve global crises. The tool has been instrumental in engineering enzymes that can break down plastic waste and in studying the proteins essential for bee conservation. In the realm of global health, more than 30% of AlphaFold-related research is now dedicated to neglected diseases, such as malaria and Leishmaniasis, providing researchers in developing nations with tools that were previously the exclusive domain of well-funded Western institutions.

However, the rapid advancement of biological AI has also raised significant concerns. In late 2025, a landmark study revealed that AI models could be used to "paraphrase" toxic proteins, creating synthetic variants of toxins like ricin that are biologically functional but invisible to current biosecurity screening software. This has led to the first biological "zero-day" vulnerabilities, prompting a flurry of regulatory activity.

The year 2025 has seen the enforcement of the EU AI Act and the issuance of the "Genesis Mission" Executive Order in the United States. These frameworks aim to balance innovation with safety, mandating that any AI model capable of designing biological agents must undergo stringent risk assessments. The debate has shifted from whether AI can solve biology to how we can prevent it from being used to create "dual-use" biological threats.

The Horizon: Virtual Cells and Clinical Trials

As AlphaFold enters its sixth year, the focus is shifting from structure to systems. Demis Hassabis has articulated a vision for the "virtual cell"—a comprehensive computer model that can simulate the entire complexity of a biological cell in real-time. Such a breakthrough would allow scientists to test the effects of a drug on a whole system before a single drop of liquid is touched in a lab, potentially reducing the 90% failure rate currently seen in clinical trials.

In the near term, the industry is watching Isomorphic Labs as it prepares for its first human clinical trials. Expected to begin in early 2026, these trials will be the ultimate test of whether AI-designed molecules can outperform those discovered through traditional methods. If successful, it will mark the beginning of an era where medicine is "designed" rather than "discovered."

Challenges remain, particularly in modeling the dynamic "dance" of proteins—how they move and change shape over time. While AlphaFold 3 provides a high-resolution snapshot, the next generation of models, such as Microsoft's BioEmu, are attempting to capture the full cinematic reality of molecular motion.

A Five-Year Retrospective

Looking back from the vantage point of December 2025, AlphaFold stands as a singular achievement in the history of science. It has not only solved a 50-year-old mystery but has also provided a blueprint for how AI can be applied to other "grand challenges" in physics, materials science, and climate modeling. The milestone of 3 million researchers is a testament to the power of open (or semi-open) science to accelerate human progress.

In the coming months, the tech world will be watching for the results of the first "AI-native" drug candidates entering Phase I trials and the continued regulatory response to biosecurity risks. One thing is certain: the biological revolution is no longer a future prospect—it is a present reality, and it is being written in the language of AlphaFold.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.