The semiconductor industry is on the cusp of a profound transformation, driven by an relentless pursuit of innovation in manufacturing techniques, materials science, and methodologies. As traditional scaling limits (often referred to as Moore's Law) become increasingly challenging, a new wave of advancements is emerging to overcome current manufacturing hurdles and dramatically enhance chip performance. These developments are not merely incremental improvements; they represent fundamental shifts that are critical for powering the next generation of artificial intelligence, high-performance computing, 5G/6G networks, and the burgeoning Internet of Things. The immediate significance of these breakthroughs is the promise of smaller, faster, more energy-efficient, and capable electronic devices across every sector, from consumer electronics to advanced industrial applications.

Engineering the Future: Technical Leaps in Chip Fabrication

The core of this revolution lies in several key technical areas, each pushing the boundaries of what's possible in chip design and production. At the forefront is advanced lithography, with Extreme Ultraviolet (EUV) technology now a mature process for sub-7 nanometer (nm) nodes. The industry is rapidly progressing towards High-Numerical Aperture (High-NA) EUV lithography, which aims to enable sub-2nm process nodes, further shrinking transistor dimensions. This is complemented by sophisticated multi-patterning techniques and advanced alignment stations, such as Nikon's Litho Booster 1000, which enhance overlay accuracy for complex 3D device structures, significantly improving process control and yield.

Beyond shrinking transistors, 3D stacking and advanced packaging are redefining chip integration. Techniques like 3D stacking involve vertically integrating multiple semiconductor dies (chips) connected by through-silicon vias (TSVs), drastically reducing footprint and improving performance through shorter interconnects. Companies like Taiwan Semiconductor Manufacturing Company (NYSE: TSM) with its 3DFabric and Intel Corporation (NASDAQ: INTC) with Foveros are leading this charge. Furthermore, chiplet architectures and heterogeneous integration, where specialized "chiplets" are fabricated separately and then integrated into a single package, allow for unprecedented flexibility, scalability, and the combination of diverse technologies. This approach is evident in products from Advanced Micro Devices (NASDAQ: AMD) and NVIDIA Corporation (NASDAQ: NVDA), utilizing chiplets in their CPUs and GPUs, as well as Intel's Embedded Multi-die Interconnect Bridge (EMIB) technology.

The fundamental building blocks of chips are also evolving with next-generation transistor architectures. The industry is transitioning from FinFETs to Gate-All-Around (GAA) transistors, including nanosheet and nanowire designs. GAA transistors offer superior electrostatic control by wrapping the gate around all sides of the channel, leading to significantly reduced leakage current, improved power efficiency, and enhanced performance scaling crucial for demanding applications like AI. Intel's RibbonFET and Samsung Electronics Co., Ltd.'s (KRX: 005930) Multi-Bridge Channel FET (MBCFET) are prime examples of this shift. These advancements differ from previous approaches by moving beyond the two-dimensional scaling limits of traditional silicon, embracing vertical integration, modular design, and novel material properties to achieve continued performance gains. Initial reactions from the AI research community and industry experts are overwhelmingly positive, recognizing these innovations as essential for sustaining the rapid pace of technological progress and enabling the next wave of AI capabilities.

Corporate Battlegrounds: Reshaping the Tech Industry's Competitive Landscape

The profound advancements in semiconductor manufacturing are creating new battlegrounds and strategic advantages across the tech industry, significantly impacting AI companies, tech giants, and innovative startups. Companies that can leverage these cutting-edge techniques and materials stand to gain immense competitive advantages, while others risk disruption.

At the forefront of beneficiaries are the leading foundries and chip designers. Taiwan Semiconductor Manufacturing Company (NYSE: TSM) and Samsung Electronics Co., Ltd. (KRX: 005930), as pioneers in advanced process nodes like 3nm and 2nm, are experiencing robust demand driven by AI workloads. Similarly, fabless chip designers like NVIDIA Corporation (NASDAQ: NVDA), Advanced Micro Devices (NASDAQ: AMD), Marvell Technology, Inc. (NASDAQ: MRVL), Broadcom Inc. (NASDAQ: AVGO), and Qualcomm Incorporated (NASDAQ: QCOM) are exceptionally well-positioned due to their focus on high-performance GPUs, custom compute solutions, and AI-driven processors. The equipment manufacturers, most notably ASML Holding N.V. (NASDAQ: ASML) with its near-monopoly in EUV lithography, and Applied Materials, Inc. (NASDAQ: AMAT), providing crucial fabrication support, are indispensable enablers of this technological leap and are poised for substantial growth.

The competitive implications for major AI labs and tech giants are particularly intense. Hyperscale cloud providers such as Alphabet Inc. (Google) (NASDAQ: GOOGL), Amazon.com, Inc. (NASDAQ: AMZN), Microsoft Corporation (NASDAQ: MSFT), and Meta Platforms, Inc. (NASDAQ: META) are investing hundreds of billions in capital expenditure to build their AI infrastructure. A significant trend is their strategic development of custom AI Application-Specific Integrated Circuits (ASICs), which grants them greater control over performance, cost, and supply chain. This move towards in-house chip design could potentially disrupt the market for off-the-shelf AI accelerators traditionally offered by semiconductor vendors. While these tech giants remain heavily reliant on advanced foundries for cutting-edge nodes, their vertical integration strategy is accelerating, elevating hardware control to a strategic asset as crucial as software innovation.

For startups, the landscape presents both formidable challenges and exciting opportunities. The immense capital investment required for R&D and state-of-the-art fabrication facilities creates high barriers to entry for manufacturing. However, opportunities abound for new domestic semiconductor design startups, particularly those focusing on niche markets or specialized technologies. Government incentives, such as the U.S. CHIPS Act, are designed to foster these new players and build a more resilient domestic ecosystem. Programs like "Startups for Sustainable Semiconductors (S3)" are emerging to provide crucial mentoring and customer access, helping innovative AI-focused startups navigate the complexities of chip production. Ultimately, market positioning is increasingly defined by access to advanced fabrication capabilities, resilient supply chains, and continuous investment in R&D and technology leadership, all underpinned by the strategic importance of semiconductors in national security and economic dominance.

A New Foundation: Broader Implications for AI and Society

The ongoing revolution in semiconductor manufacturing extends far beyond the confines of fabrication plants, fundamentally reshaping the broader AI landscape and driving profound societal impacts. These advancements are not isolated technical feats but represent a critical enabler for the accelerating pace of AI development, creating a virtuous cycle where more powerful chips fuel AI breakthroughs, and AI, in turn, optimizes chip design and manufacturing.

This era of "More than Moore" innovation, characterized by advanced packaging techniques like 2.5D and 3D stacking (e.g., TSMC's CoWoS used in NVIDIA's GPUs) and chiplet architectures, addresses the physical limits of traditional transistor scaling. By vertically integrating multiple layers of silicon and employing ultra-fine hybrid bonding, these methods dramatically shorten data travel distances, reducing latency and power consumption. This directly fuels the insatiable demand for computational power from cutting-edge AI, particularly large language models (LLMs) and generative AI, which require massive parallelization and computational efficiency. Furthermore, the rise of specialized AI chips – including GPUs, Tensor Processing Units (TPUs), Application-Specific Integrated Circuits (ASICs), and Neural Processing Units (NPUs) – optimized for specific AI workloads like image recognition and natural language processing, is a direct outcome of these manufacturing breakthroughs.

The societal impacts are far-reaching. More powerful and efficient chips will accelerate the integration of AI into nearly every aspect of human life, from transforming healthcare and smart cities to enhancing transportation through autonomous vehicles and revolutionizing industrial automation. The semiconductor industry, projected to be a trillion-dollar market by 2030, is a cornerstone of global economic growth, with AI-driven hardware demand fueling significant R&D and capital expansion. Increased power efficiency from optimized chip designs also contributes to greater sustainability, making AI more cost-effective and environmentally responsible to operate at scale. This moment is comparable to previous AI milestones, such as the advent of GPUs for parallel processing or DeepMind's AlphaGo surpassing human champions in Go; it represents a foundational shift that enables the next wave of algorithmic breakthroughs and a "Cambrian explosion" in AI capabilities.

However, these advancements also bring significant concerns. The complexity and cost of designing, manufacturing, and testing 3D stacked chips and chiplet systems are substantially higher than traditional monolithic designs. Geopolitical tensions exacerbate supply chain vulnerabilities, given the concentration of advanced chip production in a few regions, leading to a fierce global competition for technological dominance and raising concerns about national security. The immense energy consumption of advanced AI, particularly large data centers, presents environmental challenges, while the increasing capabilities of AI, powered by these chips, underscore ethical considerations related to bias, accountability, and responsible deployment. The global reliance on a handful of advanced chip manufacturers also creates potential power imbalances and technological dependence, necessitating careful navigation and sustained innovation to mitigate these risks.

The Road Ahead: Future Developments and Horizon Applications

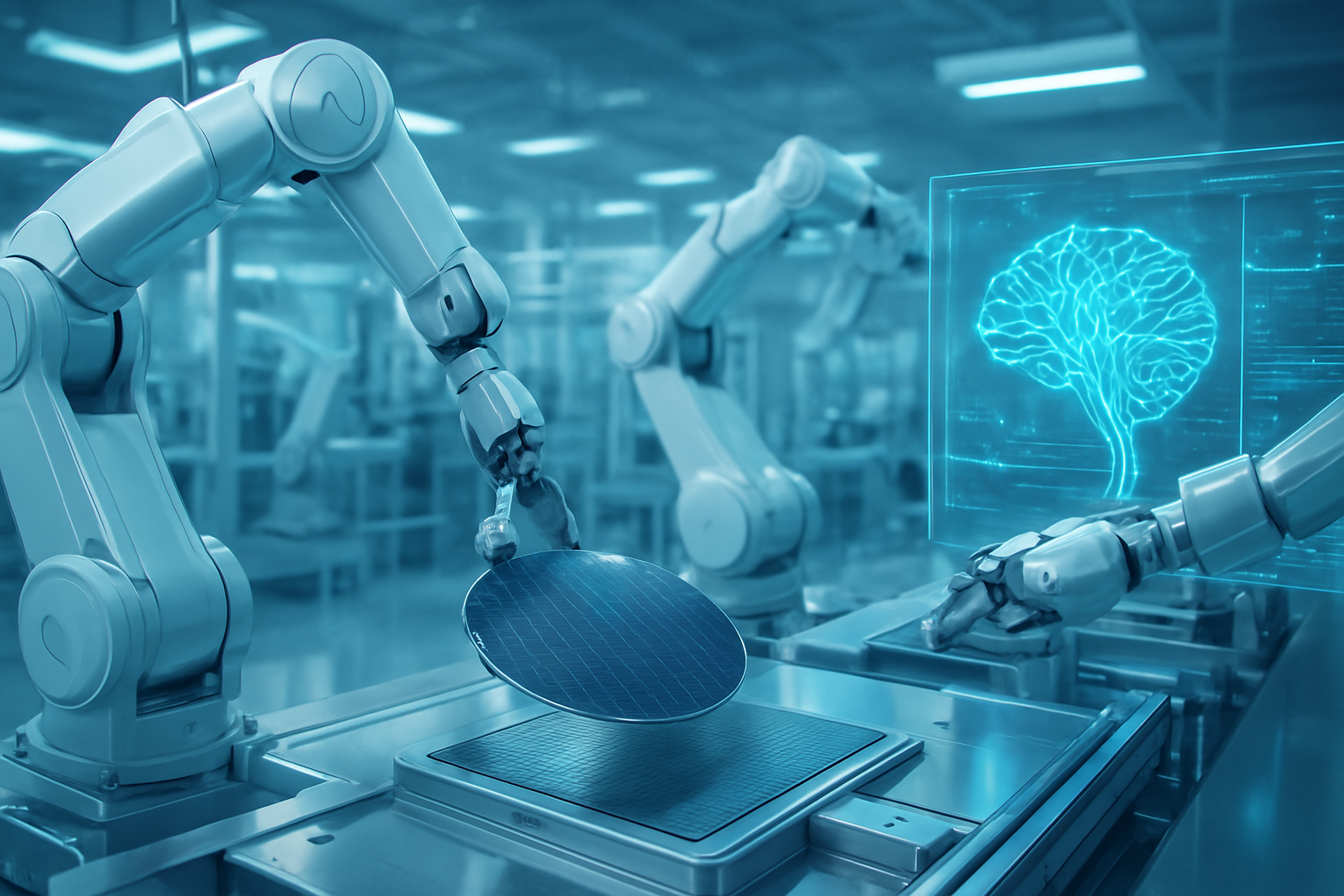

The trajectory of semiconductor manufacturing points towards a future characterized by both continued refinement of existing technologies and the exploration of entirely new paradigms. In the near term, advanced lithography will continue its march, with High-NA EUV pushing towards sub-2nm and even Beyond EUV (BEUV) being explored. The transition to Gate-All-Around (GAA) transistors is becoming mainstream for sub-3nm nodes, promising enhanced power efficiency and performance through superior channel control. Simultaneously, 3D stacking and chiplet architectures will see significant expansion, with advanced packaging techniques like CoWoS experiencing increased capacity to meet the surging demand for high-performance computing (HPC) and AI accelerators. Automation and AI-driven optimization will become even more pervasive in fabs, leveraging machine learning for predictive maintenance, defect detection, and yield enhancement, thereby streamlining production and accelerating time-to-market.

Looking further ahead, the industry will intensify its exploration of novel materials beyond silicon. Wide-bandgap semiconductors like Gallium Nitride (GaN) and Silicon Carbide (SiC) will become standard in high-power, high-frequency applications such as 5G/6G base stations, electric vehicles, and renewable energy systems. Long-term research will focus on 2D materials like graphene and molybdenum disulfide (MoS2) for ultra-thin, highly efficient transistors and flexible electronics. Methodologically, AI-enhanced design and verification will evolve, with generative AI automating complex design workflows from architecture to physical layout, significantly shortening design cycles. The trend towards heterogeneous computing integration, combining CPUs, GPUs, FPGAs, and specialized AI accelerators into unified architectures, will become the norm for optimizing diverse workloads.

These advancements will unlock a vast array of potential applications. In AI, specialized chips will continue to power ever more sophisticated algorithms and deep learning models, enabling breakthroughs in areas from personalized medicine to autonomous decision-making. Advanced semiconductors are indispensable for the expansion of 5G and future 6G wireless communication, requiring high-speed transceivers and optical switches. Autonomous vehicles will rely on these chips for real-time sensor processing and enhanced safety. In healthcare, miniaturized, powerful processors will lead to more accurate wearable health monitors, implantable devices, and advanced lab-on-a-chip diagnostics. The Internet of Things (IoT) and smart cities will see seamless connectivity and processing at the edge, while flexible electronics and even silicon-based qubits for quantum computing remain exciting, albeit long-term, prospects.

However, significant challenges loom. The rising capital intensity and costs of advanced fabs, now exceeding $30 billion, present a formidable barrier. Geopolitical fragmentation and the concentration of critical manufacturing in a few regions create persistent supply chain vulnerabilities and geopolitical risks. The industry also faces a talent shortage, particularly for engineers and technicians skilled in AI and advanced robotics. Experts predict continued market growth, potentially reaching $1 trillion by 2030, with AI and HPC remaining the primary drivers. There will be a sustained surge in demand for advanced packaging, a shift towards domain-specific and specialized chips facilitated by generative AI, and a strong trend towards the regionalization of manufacturing to enhance supply chain resilience. Sustainability will become an even greater imperative, with companies investing in energy-efficient production and green chemistry. The relentless pace of innovation, driven by the symbiotic relationship between AI and semiconductor technology, will continue to define the technological landscape for decades to come.

The Microcosm's Macro Impact: A Concluding Assessment

The semiconductor industry stands at a pivotal juncture, where a convergence of groundbreaking techniques, novel materials, and AI-driven methodologies is redefining the very essence of chip performance and manufacturing. From the precision of High-NA EUV lithography and the architectural ingenuity of 3D stacking and chiplet designs to the fundamental shift towards Gate-All-Around transistors and the integration of advanced materials like GaN and SiC, these developments are collectively overcoming long-standing manufacturing hurdles and extending the capabilities of digital technology far beyond the traditional limits of Moore's Law. The immediate significance is clear: an accelerated path to more powerful, energy-efficient, and intelligent devices that will underpin the next wave of innovation across AI, 5G/6G, IoT, and high-performance computing.

This era marks a profound transformation for the tech industry, creating a highly competitive landscape where access to cutting-edge fabrication, robust supply chains, and strategic investments in R&D are paramount. While leading foundries and chip designers stand to benefit immensely, tech giants are increasingly pursuing vertical integration with custom silicon, challenging traditional market dynamics. For society, these advancements promise ubiquitous AI integration, driving economic growth, and enabling transformative applications in healthcare, transportation, and smart infrastructure. However, the journey is not without its complexities, including escalating costs, geopolitical vulnerabilities in the supply chain, and the critical need to address environmental impacts and ethical considerations surrounding powerful AI.

In the grand narrative of AI history, the current advancements in semiconductor manufacturing represent a foundational shift, akin to the invention of the transistor itself or the advent of GPUs that first unlocked parallel processing for deep learning. They provide the essential hardware substrate upon which future algorithmic breakthroughs will be built, fostering a virtuous cycle of innovation. As we move into the coming weeks and months, the industry will be closely watching the deployment of High-NA EUV, the widespread adoption of GAA transistors, further advancements in 3D packaging capacity, and the continued integration of AI into every facet of chip design and production. The race for semiconductor supremacy is more than an economic competition; it is a determinant of technological leadership and societal progress in the digital age.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.