The global semiconductor landscape has reached a historic inflection point as reports emerge that Apple Inc. (NASDAQ: AAPL) and Amazon.com, Inc. (NASDAQ: AMZN) have officially solidified their positions as anchor customers for Intel Corporation’s (NASDAQ: INTC) 18A (1.8nm-class) foundry services. This development marks the most significant validation to date of Intel’s ambitious "IDM 2.0" strategy, positioning the American chipmaker as a formidable rival to the Taiwan Semiconductor Manufacturing Company (NYSE: TSM), commonly known as TSMC.

For the first time in over a decade, the leading edge of chip manufacturing is no longer the exclusive domain of Asian foundries. Amazon’s commitment involves a multi-billion-dollar expansion to produce custom AI fabric chips, while Apple has reportedly qualified the 18A process for its next generation of entry-level M-series processors. These partnerships represent more than just business contracts; they signify a strategic realignment of the world’s most powerful tech giants toward a more diversified and geographically resilient supply chain.

The 18A Breakthrough: PowerVia and RibbonFET Redefine Efficiency

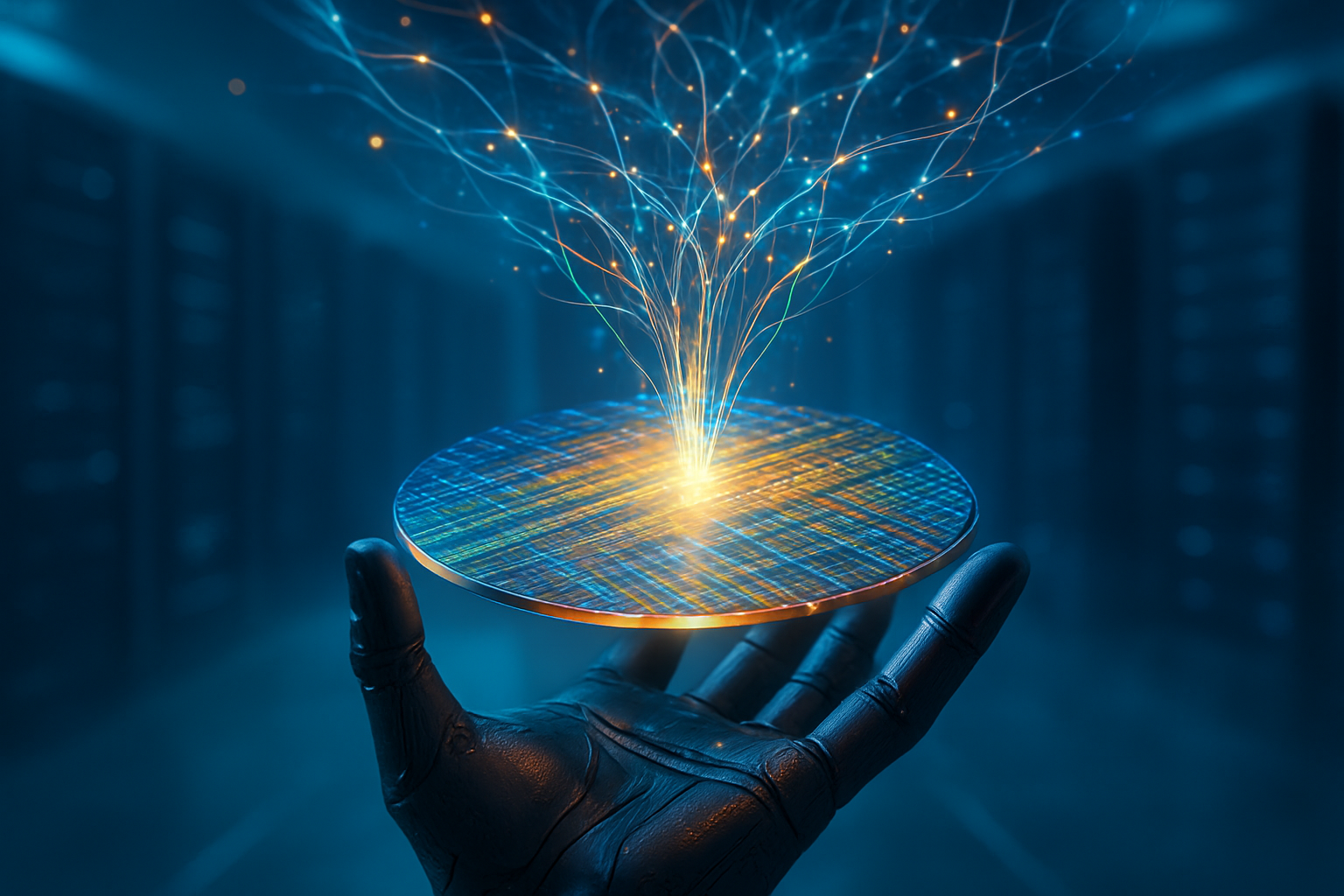

Technically, Intel’s 18A node is not merely an incremental upgrade but a radical shift in transistor architecture. It introduces two industry-first technologies: RibbonFET and PowerVia. RibbonFET is Intel’s implementation of Gate-All-Around (GAA) transistors, which provide better electrostatic control and higher drive current at lower voltages. However, the real "secret sauce" is PowerVia—a backside power delivery system that separates power routing from signal routing. By moving power lines to the back of the wafer, Intel has eliminated the "congestion" that typically plagues advanced nodes, leading to a projected 10-15% improvement in performance-per-watt over existing technologies.

As of January 2026, Intel’s 18A has entered high-volume manufacturing (HVM) at its Fab 52 facility in Arizona. While TSMC’s N2 node currently maintains a slight lead in raw transistor density, Intel’s 18A has claimed the performance crown for the first half of 2026 due to its early adoption of backside power delivery—a feature TSMC is not expected to integrate until its N2P or A16 nodes later this year. Initial reactions from the AI research community have been overwhelmingly positive, with experts noting that the 18A process is uniquely suited for the high-bandwidth, low-latency requirements of modern AI accelerators.

A New Global Order: The Strategic Realignment of Big Tech

The implications for the competitive landscape are profound. Amazon’s decision to fab its "AI fabric chip" on 18A is a direct play to scale its internal AI infrastructure. These chips are designed to optimize NeuronLink technology, the high-speed interconnect used in Amazon’s Trainium and Inferentia AI chips. By bringing this production to Intel’s domestic foundries, Amazon (NASDAQ: AMZN) reduces its reliance on the strained global supply chain while gaining access to Intel’s advanced packaging capabilities.

Apple’s move is arguably more seismic. Long considered TSMC’s most loyal and important customer, Apple (NASDAQ: AAPL) is reportedly using Intel’s 18AP (a performance-enhanced version of 18A) for its entry-level M-series SoCs found in the MacBook Air and iPad Pro. While Apple’s flagship iPhone chips remain on TSMC’s roadmap for now, the diversification into Intel Foundry suggests a "Taiwan+1" strategy designed to hedge against geopolitical risks in the Taiwan Strait. This move puts immense pressure on TSMC (NYSE: TSM) to maintain its pricing power and technological lead, while offering Intel the "VIP" validation it needs to attract other major fabless firms like Nvidia (NASDAQ: NVDA) and Advanced Micro Devices, Inc. (NASDAQ: AMD).

De-risking the Digital Frontier: Geopolitics and the AI Hardware Boom

The broader significance of these agreements lies in the concept of silicon sovereignty. Supported by the U.S. CHIPS and Science Act, Intel has positioned itself as a "National Strategic Asset." The successful ramp-up of 18A in Arizona provides the United States with a domestic 2nm-class manufacturing capability, a milestone that seemed impossible during Intel’s manufacturing stumbles in the late 2010s. This shift is occurring just as the "AI PC" market explodes; by late 2026, half of all PC shipments are expected to feature high-TOPS NPUs capable of running generative AI models locally.

Furthermore, this development challenges the status of Samsung Electronics (KRX: 005930), which has struggled with yield issues on its own 2nm GAA process. With Intel proving its ability to hit a 60-70% yield threshold on 18A, the market is effectively consolidating into a duopoly at the leading edge. The move toward onshoring and domestic manufacturing is no longer a political talking point but a commercial reality, as tech giants prioritize supply chain certainty over marginal cost savings.

The Road to 14A: What’s Next for the Silicon Renaissance

Looking ahead, the industry is already shifting its focus to the next frontier: Intel’s 14A node. Expected to enter production by 2027, 14A will be the world’s first process to utilize High-NA EUV (Extreme Ultraviolet) lithography at scale. Analyst reports suggest that Apple is already eyeing the 14A node for its 2028 iPhone "A22" chips, which could represent a total migration of Apple’s most valuable silicon to American soil.

Near-term challenges remain, however. Intel must prove it can manage the massive volume requirements of both Apple and Amazon simultaneously without compromising the yields of its internal products, such as the newly launched Panther Lake processors. Additionally, the integration of advanced packaging—specifically Intel’s Foveros technology—will be critical for the multi-die architectures that Amazon’s AI fabric chips require.

A Turning Point in Semiconductor History

The reports of Apple and Amazon joining Intel 18A represent the most significant shift in the semiconductor industry in twenty years. It marks the end of the era where leading-edge manufacturing was synonymous with a single geographic region and a single company. Intel has successfully navigated its "Five Nodes in Four Years" roadmap, culminating in a product that has attracted the world’s most demanding silicon customers.

As we move through 2026, the key metrics to watch will be the final yield rates of the 18A process and the performance benchmarks of the first consumer products powered by these chips. If Intel can deliver on its promises, the 18A era will be remembered as the moment the silicon balance of power shifted back to the West, fueled by the insatiable demand for AI and the strategic necessity of supply chain resilience.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.