The era of "clean-only" energy for Silicon Valley has entered a radical new phase. As of January 6, 2026, the global race for Artificial Intelligence dominance has collided with the physical limits of the power grid, forcing a historic pivot toward the one energy source capable of sustaining the "insatiable" appetite of next-generation neural networks: nuclear power. In what industry analysts are calling the "Great Nuclear Renaissance," the world’s largest technology companies are no longer content with purchasing carbon credits from wind and solar farms; they are now buying, reviving, and building nuclear reactors to secure the 24/7 "baseload" power required to train the AGI-scale models of the future.

This transition marks a fundamental shift in the tech industry's relationship with infrastructure. With global data center electricity consumption projected to hit 1,050 Terawatt-hours (TWh) this year—nearly double the levels seen in 2023—the bottleneck for AI progress has moved from the availability of high-end GPUs to the availability of gigawatt-scale electricity. For giants like Microsoft, Google, and Amazon, the choice was clear: embrace the atom or risk being left behind in a power-starved digital landscape.

The Technical Blueprint: From Three Mile Island to Modular Reactors

The most symbolic moment of this pivot came with the rebranding and technical refurbishment of one of the most infamous sites in American energy history. Microsoft (NASDAQ: MSFT) has partnered with Constellation Energy (NASDAQ: CEG) to restart Unit 1 of the Three Mile Island facility, now known as the Crane Clean Energy Center (CCEC). As of early 2026, the project is in an intensive technical phase, with over 500 on-site employees and a successful series of turbine and generator tests completed in late 2025. Backed by a $1 billion U.S. Department of Energy loan, the 835-megawatt facility is on track to come back online by 2027—a full year ahead of original estimates—dedicated entirely to powering Microsoft’s AI clusters on the PJM grid.

While Microsoft focuses on reviving established fission, Google (Alphabet) (NASDAQ: GOOGL) is betting on the future of Generation IV reactor technology. In late 2025, Google signed a landmark Power Purchase Agreement (PPA) with Kairos Power and the Tennessee Valley Authority (TVA). This deal centers on the "Hermes 2" demonstration reactor, a 50-megawatt plant currently under construction in Oak Ridge, Tennessee. Unlike traditional water-cooled reactors, Kairos uses a fluoride salt-cooled high-temperature design, which offers enhanced safety and modularity. Google’s "order book" strategy aims to deploy a fleet of these Small Modular Reactors (SMRs) to provide 500 megawatts of carbon-free power by 2035.

Amazon (NASDAQ: AMZN) has taken a multi-pronged approach to secure its energy future. Following a complex regulatory battle with the Federal Energy Regulatory Commission (FERC) over "behind-the-meter" power delivery, Amazon and Talen Energy (NASDAQ: TLN) successfully restructured a deal to pull up to 1,920 megawatts from the Susquehanna nuclear plant in Pennsylvania. Simultaneously, Amazon is investing heavily in SMR development through X-energy. Their joint project, the Cascade Advanced Energy Facility in Washington State, recently expanded its plans from 320 megawatts to a potential 960-megawatt capacity, utilizing the Xe-100 high-temperature gas-cooled reactor.

The Power Moat: Competitive Implications for the AI Giants

The strategic advantage of these nuclear deals cannot be overstated. In the current market, "power is the new hard currency." By securing dedicated nuclear capacity, the "Big Three" have effectively built a "Power Moat" that smaller AI labs and startups find impossible to cross. While a startup may be able to secure a few thousand H100 GPUs, they cannot easily secure the hundreds of megawatts of firm, 24/7 power required to run them. This has led to an even greater consolidation of AI capabilities within the hyperscalers.

Microsoft, Amazon, and Google are now positioned to bypass the massive interconnection queues that plague the U.S. power grid. With over 2 terawatts of energy projects currently waiting for grid access, the ability to co-locate data centers at existing nuclear sites or build dedicated SMRs allows these companies to bring new AI clusters online years faster than their competitors. This "speed-to-market" is critical as the industry moves toward "frontier" models that require exponentially more compute than GPT-4 or Gemini 1.5.

The competitive landscape is also shifting for other major players. Meta (NASDAQ: META), which initially trailed the nuclear trend, issued a massive Request for Proposals in late 2024 for up to 4 gigawatts of nuclear capacity. Meanwhile, OpenAI remains in a unique position; while it relies on Microsoft’s infrastructure, its CEO, Sam Altman, has made personal bets on the nuclear sector through his chairmanship of Oklo (NYSE: OKLO) and investments in Helion Energy. This "founder-led" hedge suggests that even the leading AI research labs recognize that software breakthroughs alone are insufficient without a massive, stable energy foundation.

The Global Significance: Climate Goals and the Nuclear Revival

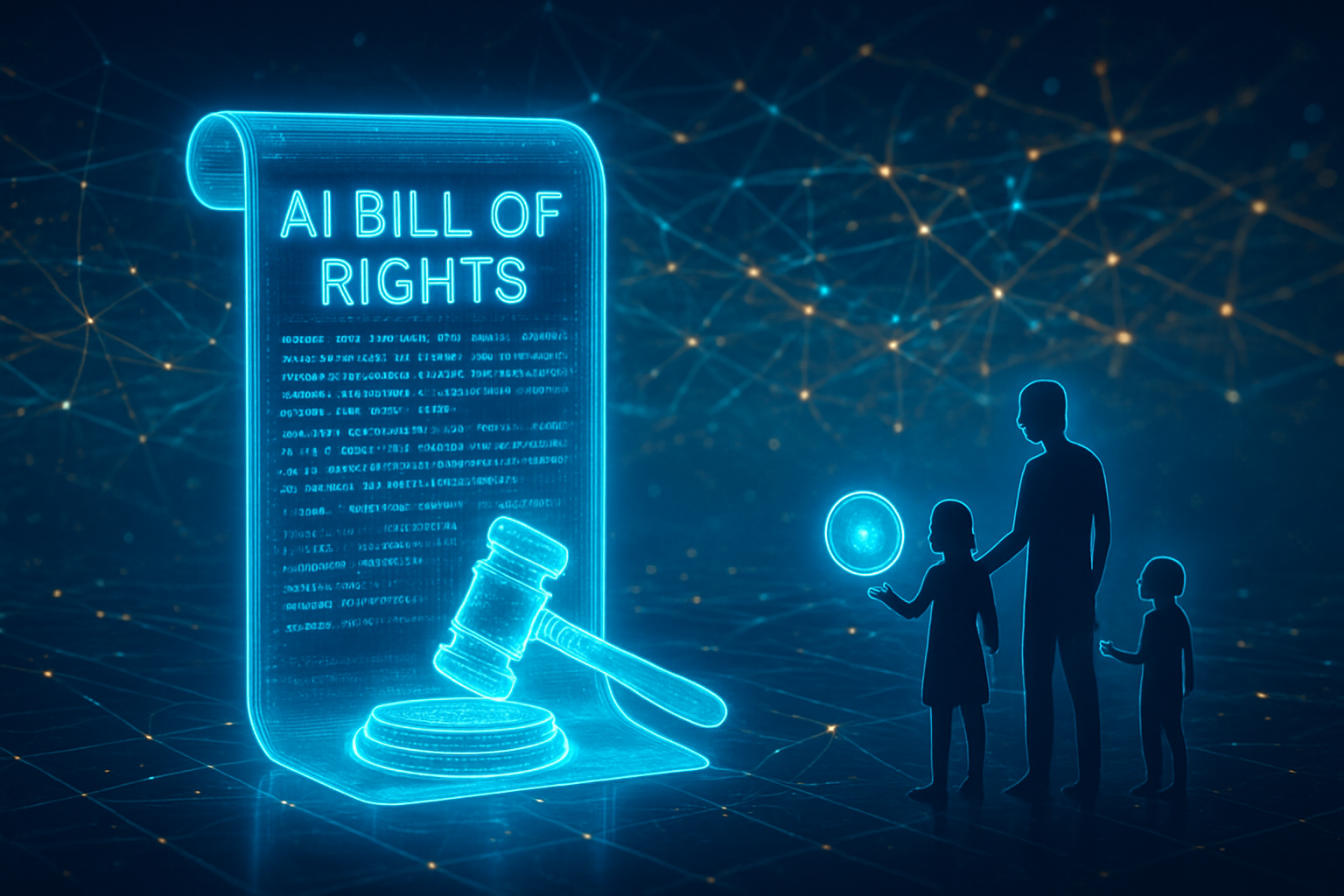

The "Nuclear Pivot" has profound implications for the global climate agenda. For years, tech companies have been the largest corporate buyers of renewable energy, but the intermittent nature of wind and solar proved insufficient for the "five-nines" (99.999%) uptime requirement of 2026-era data centers. By championing nuclear power, Big Tech is providing the financial "off-take" agreements necessary to revitalize an industry that had been in decline for decades. This has led to a surge in utility stocks, with companies like Vistra Corp (NYSE: VST) and Constellation Energy seeing record valuations.

However, the trend is not without controversy. Environmental researchers, such as those at HuggingFace, have pointed out the inherent inefficiency of current generative AI models, noting that a single query can consume ten times the electricity of a traditional search. There are also concerns about "grid fairness." As tech giants lock up existing nuclear capacity, energy experts warn that the resulting supply crunch could drive up electricity costs for residential and commercial consumers, leading to a "digital divide" in energy access.

Despite these concerns, the geopolitical significance of this energy shift is clear. The U.S. government has increasingly viewed AI leadership as a matter of national security. By supporting the restart of facilities like Three Mile Island and the deployment of Gen IV reactors, the tech sector is effectively subsidizing the modernization of the American energy grid, ensuring that the infrastructure for the next industrial revolution remains domestic.

The Horizon: SMRs, Fusion, and the Path to 2030

Looking ahead, the next five years will be a period of intense construction and regulatory testing. While the Three Mile Island restart provides a near-term solution for Microsoft, the long-term viability of the AI boom depends on the successful deployment of SMRs. Unlike the massive, bespoke reactors of the past, SMRs are designed to be factory-built and easily Scaled. If Kairos Power and X-energy can meet their 2030 targets, we may see a future where every major data center campus features its own dedicated modular reactor.

On the more distant horizon, the "holy grail" of energy—nuclear fusion—remains a major point of interest for AI visionaries. Companies like Helion Energy are working toward commercial-scale fusion, which would provide virtually limitless clean energy without the long-lived radioactive waste of fission. While most experts predict fusion is still decades away from powering the grid, the sheer scale of AI-driven capital currently flowing into the energy sector has accelerated R&D timelines in ways previously thought impossible.

The immediate challenge for the industry will be navigating the complex web of state and federal regulations. The FERC's recent scrutiny of Amazon's co-location deals suggests that the path to "energy independence" for Big Tech will be paved with legal challenges. Companies will need to prove that their massive power draws do not compromise the reliability of the public grid or unfairly shift costs to the general public.

A New Era of Symbiosis

The nuclear pivot of 2025-2026 represents a defining moment in the history of technology. It is the moment when the digital world finally acknowledged its absolute dependence on the physical world. The symbiosis between Artificial Intelligence and Nuclear Energy is now the primary engine of innovation, with the "Big Three" leading a charge that is simultaneously reviving a legacy industry and pioneering a modular future.

As we move further into 2026, the key metrics to watch will be the progress of the Crane Clean Energy Center's restart and the first regulatory approvals for SMR site permits. The success or failure of these projects will determine not only the carbon footprint of the AI revolution but also which companies will have the "fuel" necessary to reach the next frontier of machine intelligence. In the race for AGI, the winner may not be the one with the best algorithms, but the one with the most stable reactors.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.