The Consumer Electronics Show (CES) 2026 has officially closed the chapter on AI as a high-tech parlor trick. For the past two years, the industry teased "AI PCs" that offered little more than glorified chatbots and background blur for video calls. However, this year’s showcase in Las Vegas signaled a seismic shift. The narrative has moved decisively from "algorithmic novelty"—the mere ability to run a model—to "system integration and deployment at scale," where artificial intelligence is woven into the very fabric of the silicon and the operating system.

This transition marks the moment the Neural Processing Unit (NPU) became as fundamental to a computer as the CPU or GPU. With heavyweights like Qualcomm (NASDAQ: QCOM), Intel (NASDAQ: INTC), and AMD (NASDAQ: AMD) unveiling hardware that pushes NPU performance past the 50-80 TOPS (Trillions of Operations Per Second) threshold, the industry is no longer just building faster computers; it is building "agentic" machines capable of proactive reasoning. The AI PC is no longer a premium niche; it is the new global standard for the mainstream.

The Spec War: 80 TOPS and the 18A Milestone

The technical specifications revealed at CES 2026 represent a massive leap in local compute capability. Qualcomm stole the early headlines with the Snapdragon X2 Plus, featuring the Hexagon NPU which now delivers a staggering 80 TOPS. By targeting the $800 "sweet spot" of the laptop market, Qualcomm is effectively commoditizing high-end AI. Their 3rd Generation Oryon CPU architecture claims a 35% increase in single-core performance, but the real story is the efficiency—achieving these benchmarks while consuming 43% less power than previous generations, a direct challenge to the battery life dominance of Apple (NASDAQ: AAPL).

Intel countered with its most significant manufacturing milestone in a decade: the launch of the Intel Core Ultra Series 3 (code-named Panther Lake), built on the Intel 18A process node. This is the first time Intel’s most advanced AI silicon has been manufactured using its new backside power delivery system. The Panther Lake architecture features the NPU 5, providing 50 TOPS of dedicated AI performance. When combined with the integrated Arc Xe graphics and the CPU, the total platform throughput reaches 170 TOPS. This "all-engines-on" approach allows for complex multi-modal tasks—such as real-time video translation and local code generation—to run simultaneously without thermal throttling.

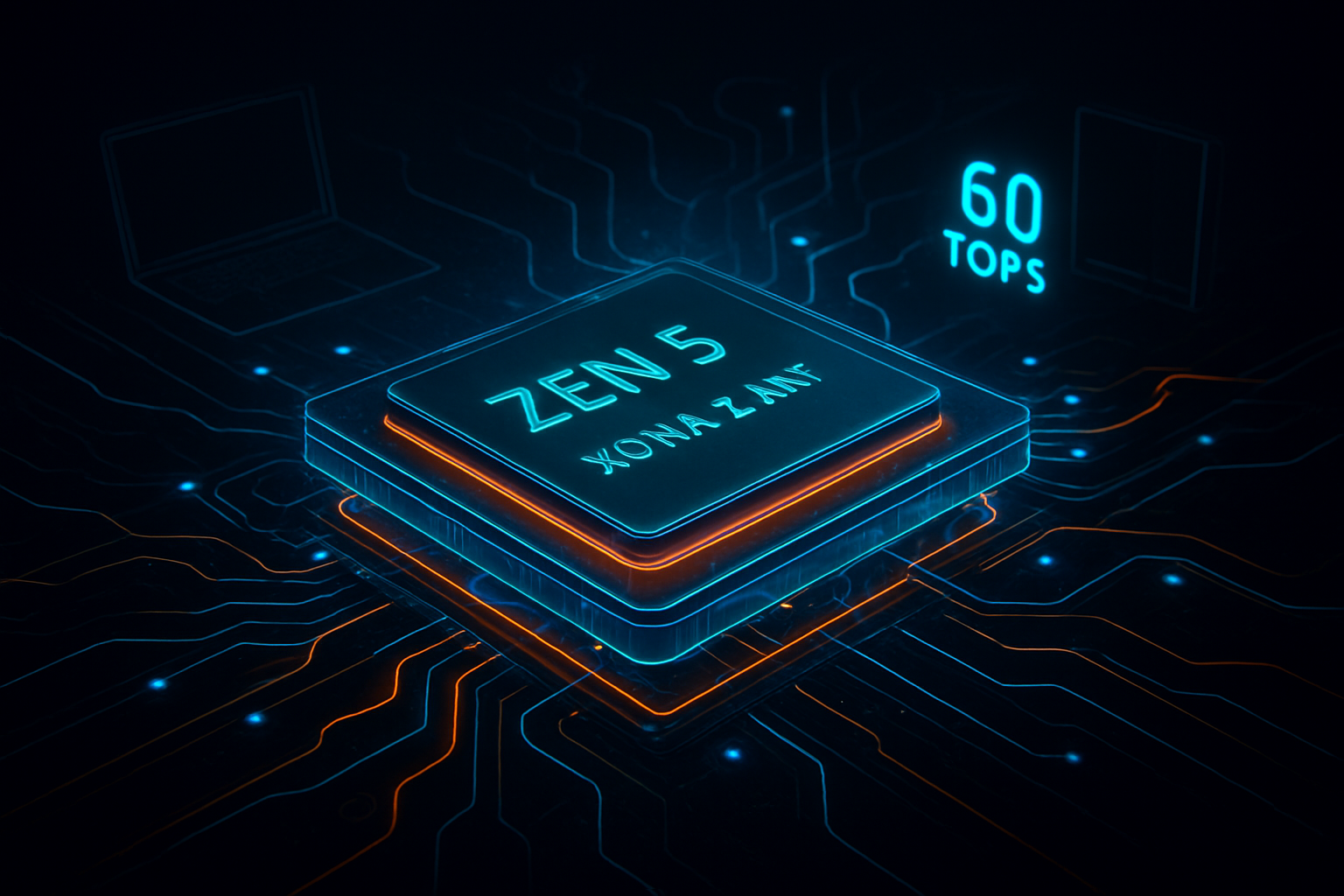

AMD, meanwhile, focused on "Structural AI" with its Ryzen AI 400 Series (Gorgon Point) and the high-end Ryzen AI Max+. The flagship Ryzen AI 9 HX 475 utilizes the XDNA 2 architecture to deliver 60 TOPS of NPU performance. AMD’s strategy is one of "AI Everywhere," ensuring that even their mid-range and workstation-class chips share the same architectural DNA. The Ryzen AI Max+ 395, boasting 16 Zen 5 cores, is specifically designed to rival the Apple M5 MacBook Pro, offering a "developer halo" for those building edge AI applications directly on their local machines.

The Shift from Chips to Ecosystems

The implications for the tech giants are profound. Intel’s announcement of over 200 OEM design wins—including flagship refreshes from Samsung (KRX: 005930) and Dell (NYSE: DELL)—suggests that the x86 ecosystem has successfully navigated the threat posed by the initial "Windows on Arm" surge. By integrating AI at the 18A manufacturing level, Intel is positioning itself as the "execution leader," moving away from the delays that plagued its previous iterations. For major PC manufacturers, the focus has shifted from selling "speeds and feeds" to selling "outcomes," where the hardware is a vessel for autonomous AI agents.

Qualcomm’s aggressive push into the mainstream $800 price tier is a strategic gamble to break the x86 duopoly. By offering 80 TOPS in a volume-market chip, Qualcomm is forcing a competitive "arms race" that benefits consumers but puts immense pressure on margins for legacy chipmakers. This development also creates a massive opportunity for software startups. With a standardized, high-performance NPU base across millions of new laptops, the barrier to entry for "NPU-native" software has vanished. We are likely to see a wave of startups focused on "Agentic Orchestration"—software that uses the NPU to manage a user’s entire digital life, from scheduling to automated document synthesis, without ever sending data to the cloud.

From Reactive Prompts to Proactive Agents

The wider significance of CES 2026 lies in the death of the "prompt." For the last few years, AI interaction was reactive: a user typed a query, and the AI responded. The hardware showcased this year enables "Agentic AI," where the system is "always-aware." Through features like Copilot Vision and proactive system monitoring, these PCs can anticipate user needs. If you are researching a flight, the NPU can locally parse your calendar, budget, and preferences to suggest a booking before you even ask.

This shift mirrors the transition from the "dial-up" era to the "always-on" broadband era. It marks the end of AI as a separate application and the beginning of AI as a system-level service. However, this "always-aware" capability brings significant privacy concerns. While the industry touts "local processing" as a privacy win—keeping data off corporate servers—the sheer amount of personal data being processed by local NPUs creates a new surface area for security vulnerabilities. The industry is moving toward a world where the OS is no longer just a file manager, but a cognitive layer that understands the context of everything on your screen.

The Horizon: Autonomous Workflows and the End of "Apps"

Looking ahead, the next 18 to 24 months will likely see the erosion of the traditional "application" model. As NPUs become more powerful, we expect to see the rise of "cross-app autonomous workflows." Instead of opening Excel to run a macro or Word to draft a memo, users will interact with a unified agentic interface that leverages the NPU to execute tasks across multiple software suites simultaneously. Experts predict that by 2027, the "AI PC" label will be retired simply because there will be no other kind of PC.

The immediate challenge remains software optimization. While the hardware is now capable of 80 TOPS, many current applications are still optimized for legacy CPU/GPU workflows. The "Developer Halo" period is now in full swing, as companies like Microsoft and Adobe race to rewrite their core engines to take full advantage of the NPU. We are also watching for the emergence of "Small Language Models" (SLMs) specifically tuned for these new chips, which will allow for high-reasoning capabilities with a fraction of the memory footprint of GPT-4.

A New Era of Personal Computing

CES 2026 will be remembered as the moment the AI PC became a reality for the masses. The transition from "algorithmic novelty" to "system integration and deployment at scale" is more than a marketing slogan; it is a fundamental re-architecting of how humans interact with machines. With Qualcomm, Intel, and AMD all delivering high-performance NPU silicon across their entire portfolios, the hardware foundation for the next decade of computing has been laid.

The key takeaway is that the "AI PC" is no longer a promise of the future—it is a shipping product in the present. As these 170-TOPS-capable machines begin to populate offices and homes over the coming months, the focus will shift from the silicon to the soul of the machine: the agents that inhabit it. The industry has built the brain; now, we wait to see what it decides to do.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.