Artificial Intelligence (AI) is rapidly emerging as a pivotal force in the global effort to combat environmental degradation and foster sustainability. From the depths of the ocean to the vastness of the atmosphere, AI is revolutionizing how we monitor, understand, and respond to ecological challenges. Its immediate significance lies in its unparalleled ability to process and analyze immense, complex datasets in real-time, providing actionable insights and predictive capabilities that were previously unattainable through traditional methods. This technological leap is enabling a proactive approach to environmental protection, moving beyond reactive responses to anticipate and mitigate threats before they escalate.

The integration of AI into environmental monitoring and solutions is not merely an incremental improvement; it represents a paradigm shift. By leveraging machine learning, computer vision, and advanced analytics, AI systems can detect subtle patterns, forecast future environmental conditions, and automate labor-intensive tasks with remarkable precision and efficiency. This transformative power is particularly crucial in marine conservation, where vast, often inaccessible environments demand sophisticated tools to protect biodiversity, combat pollution, and manage precious resources. The insights gleaned from AI are empowering scientists, conservationists, and policymakers to make more informed and effective decisions, paving the way for a more resilient and sustainable future for our planet.

Technical Leaps: AI's Precision in Environmental and Marine Stewardship

The technical advancements driving AI's role in environmental monitoring and marine conservation are sophisticated and multifaceted, marking a significant departure from conventional approaches. At its core, this revolution is powered by Machine Learning and Deep Learning, enabling systems to analyze vast environmental datasets from diverse sources—satellites, drones, underwater cameras, acoustic sensors, and IoT devices—to identify intricate patterns and make accurate predictions. Computer Vision and Image Recognition algorithms, often leveraging deep neural networks, are particularly transformative. For instance, systems like YOLO (You Only Look Once) are trained on extensive image and video datasets to automatically identify and classify marine species, track endangered animals, assess population sizes, and even detect specific behaviors from underwater cameras and drones. This contrasts sharply with traditional manual surveys or visual identification, which are labor-intensive, prone to human error, and limited in scale.

Beyond visual analysis, Acoustic Monitoring utilizes AI to analyze ocean soundscapes, identifying the clicks of dolphins, songs of whales, or even the calls of endangered species like the vaquita, providing crucial insights into population health and distribution. AI also powers Predictive Modeling and Forecasting, analyzing historical data to anticipate future environmental events with higher accuracy. This includes forecasting ocean temperature changes, sea-level rises, extreme weather events, harmful algal blooms, and even the migration patterns of fish populations, which is vital for sustainable fisheries management. Traditional models often rely on simpler statistical methods and struggle with the complexity and volume of real-world environmental data, offering less precise and timely predictions.

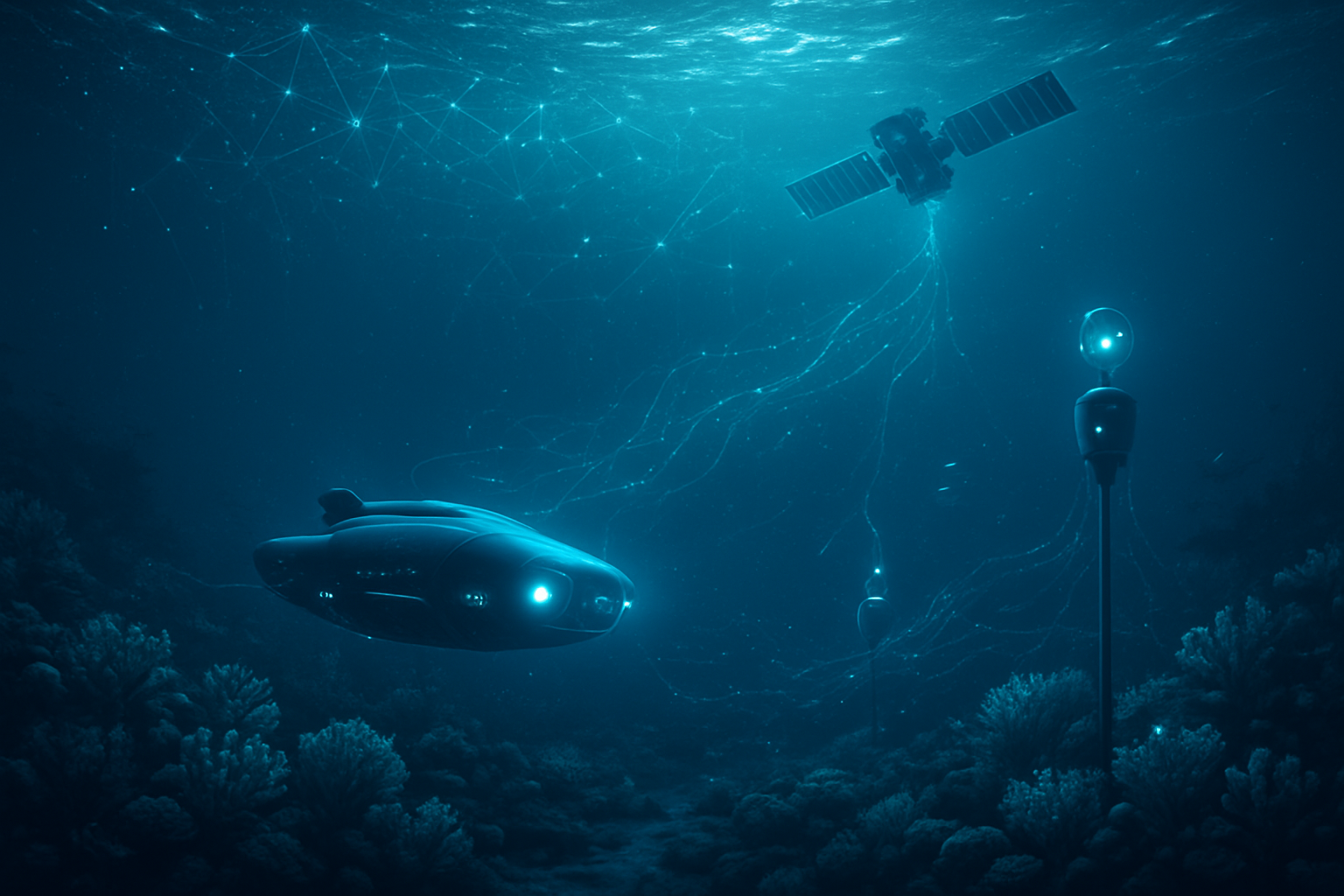

The deployment of Autonomous Underwater Vehicles (AUVs) and Marine Robots, equipped with AI, represents another significant technical leap. These systems can explore previously inaccessible deep-sea areas, map the ocean floor, collect high-resolution images, and gather data on water quality, species movement, and ecosystem changes continuously and in real-time, reducing human risk and expanding the scope of monitoring. Furthermore, AI-powered analysis of Environmental DNA (eDNA) offers a non-intrusive and comprehensive way to monitor aquatic biodiversity, detecting species even when they are rare or elusive. The initial reaction from the AI research community and industry experts has been overwhelmingly positive, hailing AI as a "game-changer" with "unparalleled capabilities," though also emphasizing the critical need for ethical considerations, human oversight, and sustainable practices to manage the technology's own environmental footprint.

Corporate Currents: Navigating the AI-Powered Green Economy

The burgeoning field of AI in environmental monitoring and solutions, particularly marine conservation, is creating significant opportunities and competitive shifts across AI companies, tech giants, and startups. This market is projected for substantial growth, indicating a lucrative new frontier.

Tech giants like Microsoft (NASDAQ: MSFT), Google (NASDAQ: GOOGL), and Amazon (NASDAQ: AMZN) are strategically positioning themselves as leaders. They leverage their vast cloud infrastructure (AWS, Azure, Google Cloud), extensive R&D capabilities, and global reach to offer comprehensive AI-driven environmental intelligence platforms. Microsoft's "AI for Good Lab" actively monitors animal behavior and analyzes satellite images for biodiversity protection, while Google's DeepMind has used AI to significantly reduce data center cooling energy consumption. Amazon applies AI to reduce packaging waste and monitor deforestation. These companies benefit from their ability to integrate AI solutions across diverse sectors, offering scalable services to governments, NGOs, and corporations, thereby setting industry standards and driving widespread adoption. Their existing client relationships and market penetration provide a significant competitive advantage, though their large-scale operations also face scrutiny regarding their own energy and water consumption.

Specialized AI companies and startups are also making significant inroads, often focusing on niche, high-impact problems. Companies like C3.ai (NYSE: AI) provide enterprise AI solutions for sustainability, while startups such as The Ocean Cleanup (private) use AI to track and collect plastic pollution. Others, like Pelagic Data Systems (private) and OceanMind (private), are deploying AI to combat illegal fishing by analyzing satellite and vessel tracking data. These agile innovators thrive on deep domain expertise and proprietary algorithms tailored to specific environmental challenges, giving them an edge in specialized markets. While they may not have the resources of tech giants, their focused innovation can lead to groundbreaking solutions that disrupt traditional methods. Many benefit from partnerships with larger entities or dedicated environmental funds, allowing them to scale their impact.

The competitive landscape is dynamic, with a "digital arms race" emerging where both conservation efforts and, paradoxically, resource extraction industries (e.g., optimized fishing) are leveraging AI. Companies that prioritize "Green AI" – minimizing AI's own environmental footprint – and ethical AI practices will gain a strategic advantage, appealing to a growing market of environmentally conscious clients and regulators. The ability to offer transparent, accurate, and scalable solutions for carbon accounting, emissions tracking, and environmental compliance provides a significant market differentiator. Ultimately, the companies that can effectively combine technological prowess with a genuine commitment to sustainability and ethical deployment will be best positioned to thrive in this evolving green economy.

Broader Horizons: AI's Role in the Global Environmental Narrative

AI's expanding role in environmental monitoring and solutions, particularly in marine conservation, signifies a critical juncture in the broader AI landscape. It represents a potent manifestation of the "AI for Good" movement, where advanced computational power is explicitly leveraged to address humanity's most pressing global challenges. This integration aligns perfectly with the overarching trend of Big Data and Advanced Analytics, as AI provides the essential tools to process the exponentially growing volume of environmental data from satellites, drones, and IoT sensors, transforming raw information into actionable intelligence. The shift towards real-time monitoring and predictive modeling is paramount, allowing for proactive interventions rather than reactive responses, a fundamental change in how we approach conservation.

The impacts are overwhelmingly positive, offering enhanced accuracy and efficiency in data analysis, real-time insights for rapid response, and unprecedented scalability for monitoring vast and remote ecosystems. For instance, AI's ability to identify species, track populations, and monitor habitat health in the vastness of the ocean far surpasses the limitations of human-intensive methods. This leads to informed decision-making for scientists, conservationists, and policymakers, enabling more effective resource management and policy development. From early efforts in land cover classification to today's sophisticated deep learning models that predict climate change impacts and track illegal fishing, AI has evolved from a descriptive tool to a truly predictive and integrated system.

However, this transformative potential is not without its concerns. A significant paradox lies in the environmental footprint of AI itself. Training and operating large-scale AI models demand substantial computational power, leading to considerable electricity consumption and associated carbon emissions. This raises critical questions about whether the environmental benefits outweigh the computational costs, especially if data centers rely on non-renewable energy sources or contribute to thermal pollution by using seawater for cooling. Furthermore, the rapid obsolescence of AI hardware contributes to electronic waste, which can introduce toxins into ecosystems if not properly managed.

Other concerns include data and algorithmic bias, where AI systems, learning from potentially biased historical data, might inadvertently prioritize certain species or ecosystems over others, leading to misallocation of limited conservation resources. There are also ethical considerations around automation bias and the potential for deskilling if over-reliance on AI diminishes the role of human judgment and traditional ecological knowledge. Data governance and privacy are also crucial, particularly when collecting vast datasets on sensitive ecological information or in territorial waters. The comparison to previous AI milestones highlights this evolution: from rudimentary pattern recognition to today's integrated, autonomous, and predictive systems, AI is no longer just a tool but a fundamental component in our strategy to understand and protect the natural world.

The Horizon Ahead: Charting AI's Future in Environmental Stewardship

The trajectory of AI in environmental monitoring and solutions, particularly marine conservation, points towards a future of unprecedented precision, integration, and proactive management. In the near term, we can expect significant advancements in enhanced monitoring and data analysis, with AI-powered underwater drones, cameras, and acoustic sensors providing real-time tracking and identification of marine species, movements, and behaviors. This will lead to more refined predictive analytics for ecosystem health, allowing algorithms to forecast coral bleaching events weeks or months in advance, identify harmful algal blooms, and anticipate species migration patterns with remarkable accuracy. The development of smart conservation tools, such as AI-powered nets that reduce bycatch and electronic monitoring systems for fishing vessels, will become more widespread, ensuring greater compliance and sustainability. Furthermore, the integration of Edge Computing will enable real-time data processing and decision-making directly on remote sensors or drones, drastically reducing latency and improving response times for critical environmental interventions.

Looking to the long term, the vision includes the creation of "digital twins" of entire ecosystems, such as the Great Barrier Reef. These sophisticated AI models will simulate the cascading effects of environmental changes, predicting vulnerabilities and allowing for highly targeted interventions. Advanced climate modeling will become hyper-accurate, processing data from millions of global sensors to provide reliable projections of future climate scenarios. The seamless integration of AI with emerging technologies like quantum computing and bio-inspired soft robotics will unlock even more precise predictions and enable delicate interactions with marine environments. Experts predict a shift towards global collaborative AI platforms that democratize access to marine conservation tools, fostering a shared, data-driven approach to ocean protection.

Despite this immense potential, several challenges need to be addressed. The persistent issues of data quality and availability remain paramount, as AI models are only as good as the data they are trained on. The high cost and specialized expertise required for AI deployment can be barriers, particularly for smaller organizations and developing nations. The energy consumption of AI itself, with its significant carbon footprint, presents an ethical paradox that demands the development of more energy-efficient algorithms and hardware. Furthermore, establishing ethical and regulatory frameworks is crucial to address concerns around data privacy, algorithmic bias, and ensuring that AI augments, rather than replaces, human expertise and traditional ecological knowledge. Experts predict a fundamental shift towards proactive conservation, where AI acts as a "new sensory layer for the ocean," enabling continuous learning and adaptation, and ultimately leading to more sophisticated and automated decision-making across a broader range of environmental applications.

A New Era for Earth's Protectors: AI's Enduring Legacy

The integration of Artificial Intelligence into environmental monitoring and marine conservation marks a pivotal moment in our collective efforts to safeguard the planet. This technological revolution has fundamentally reshaped our capacity to understand, predict, and respond to ecological challenges, moving us from a reactive stance to one of proactive, data-driven stewardship. The key takeaway is clear: AI provides unprecedented tools for real-time, comprehensive monitoring and analysis, enabling targeted interventions that were previously unimaginable. From identifying endangered marine species and tracking illegal fishing to forecasting climate impacts and optimizing resource management, AI is proving to be an indispensable ally in the fight for a sustainable future.

This development holds immense significance in the broader history of AI. It signifies the maturation of AI beyond purely commercial or entertainment applications, positioning it as a vital instrument for addressing "grand challenges" that threaten global well-being. By harnessing the power of deep learning, computer vision, and vast sensor networks, AI has transformed environmental science from a "data-rich but insight-poor" discipline into one capable of generating timely, actionable intelligence. This evolution, building on decades of computational advancements, underscores AI's growing role as a guardian of our natural world, offering a scalable and efficient pathway toward a healthier planet.

The long-term impact of AI in environmental conservation is poised to be profound. It promises greater accuracy, efficiency, and scalability in our efforts, leading to more effective conservation outcomes and informing smarter environmental policies. However, this transformative potential is inextricably linked with the need for responsible and ethical deployment. Addressing the environmental footprint of AI, ensuring data quality and mitigating algorithmic bias, and upholding the irreplaceable value of human judgment and traditional ecological knowledge will be crucial for its sustained success. The future demands ongoing interdisciplinary collaboration and a steadfast commitment to innovation that prioritizes both technological advancement and ecological integrity.

In the coming weeks and months, we should watch for increasingly integrated and smarter AI systems that provide a holistic, real-time picture of Earth's health. Expect further advancements in autonomous technologies, refined predictive analytics, and the widespread adoption of ethical AI frameworks that guide responsible development. Concrete examples, such as the AI-leveraged Eastern Tropical Pacific Marine Conservation Corridor, will serve as models for global initiatives, influencing international policy and governance. As AI continues to mature, its role as a vital ally in protecting our planet's invaluable ecosystems will only grow, demanding our ongoing attention and a collective commitment to leveraging this powerful technology for the greater good.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.