In a definitive moment for the American semiconductor industry, Intel (NASDAQ: INTC) has officially transitioned its ambitious 18A (1.8nm-class) process node into high-volume manufacturing as of January 2026. This milestone marks the culmination of CEO Pat Gelsinger’s "five nodes in four years" roadmap, a high-stakes strategy designed to restore the company’s manufacturing leadership after years of surrendering ground to Asian rivals. With the commercial launch of the Panther Lake consumer processors at CES 2026 and the imminent arrival of the Clearwater Forest server lineup, Intel has moved from the defensive to the offensive, signaling a major shift in the global balance of silicon power.

The immediate significance of the 18A node extends far beyond Intel’s internal product catalog. It represents the first time in over a decade that a U.S.-based foundry has achieved a perceived technological "leapfrog" over competitors in transistor architecture and power delivery. By being the first to deploy advanced gate-all-around (GAA) transistors alongside groundbreaking backside power delivery at scale, Intel is positioning itself not just as a chipmaker, but as a "systems foundry" capable of meeting the voracious computational demands of the generative AI era.

The Technical Trifecta: RibbonFET, PowerVia, and High-NA EUV

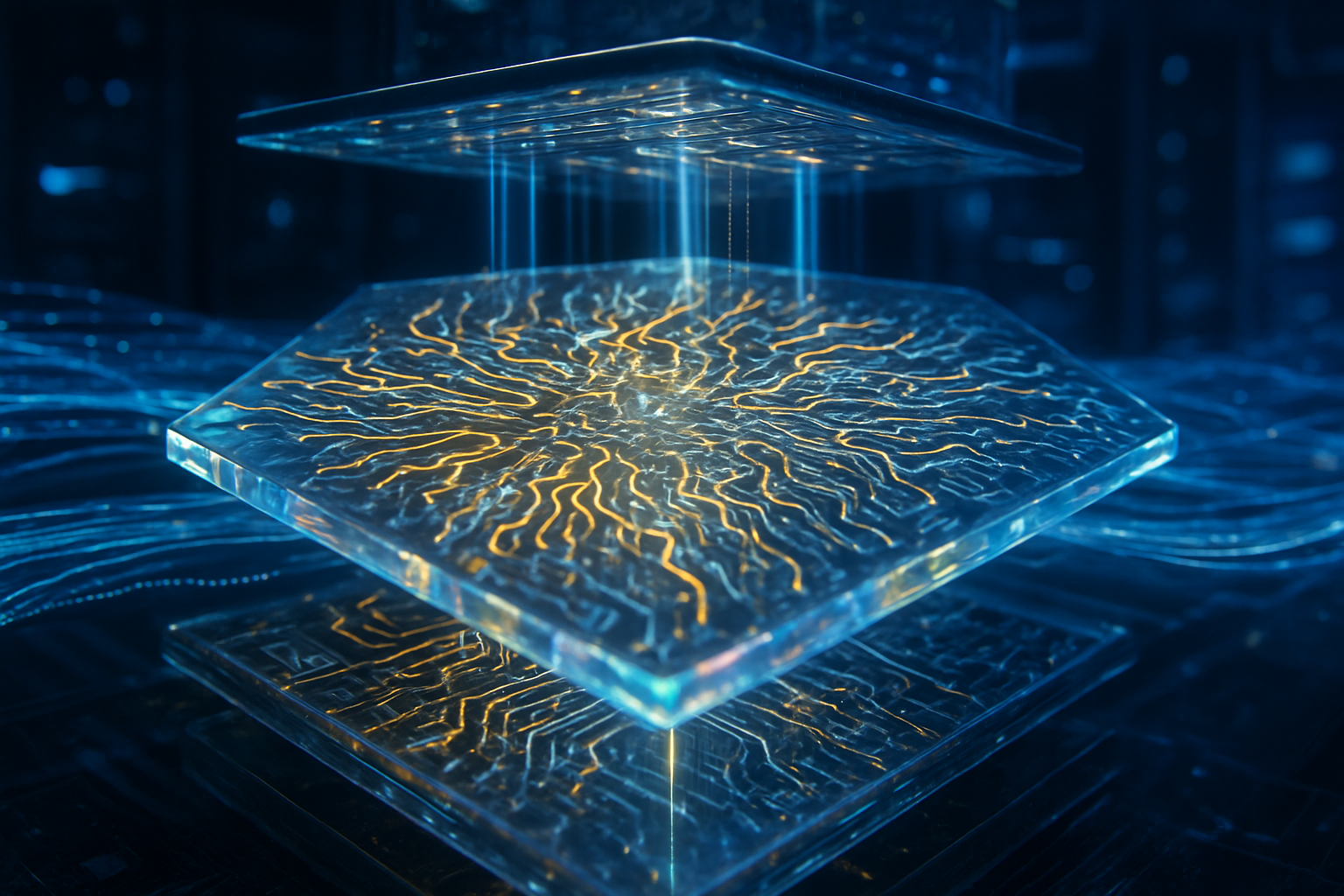

The 18A node’s success is built upon a "technical trifecta" that differentiates it from previous FinFET-based generations. At the heart of the node is RibbonFET, Intel’s implementation of GAA architecture. RibbonFET replaces the traditional FinFET design by surrounding the transistor channel on all four sides with a gate, allowing for finer control over current and significantly reducing leakage. According to early benchmarks from the Panther Lake "Core Ultra Series 3" mobile chips, this architecture provides a 15% frequency boost and a 25% reduction in power consumption compared to the preceding Intel 3-based models.

Complementing RibbonFET is PowerVia, the industry’s first implementation of backside power delivery. In traditional chip design, power and data lines are bundled together in a complex "forest" of wiring above the transistor layer. PowerVia decouples these, moving the power delivery to the back of the wafer. This innovation eliminates the wiring congestion that has plagued chip designers for years, resulting in a staggering 30% improvement in chip density and allowing for more efficient power routing to the most demanding parts of the processor.

Perhaps most critically, Intel has secured a strategic advantage through its early adoption of ASML (NASDAQ: ASML) High-Numerical Aperture (High-NA) Extreme Ultraviolet (EUV) lithography machines. While the base 18A node was developed using standard 0.33 NA EUV, Intel has integrated the newer Twinscan EXE:5200B High-NA tools for critical layers in its 18A-P (Performance) variants. These machines, which cost upwards of $380 million each, provide a 1.7x reduction in feature size. By mastering High-NA tools now, Intel is effectively "de-risking" the upcoming 14A (1.4nm) node, which is slated to be the world’s first node designed entirely around High-NA lithography.

A New Power Dynamic: Microsoft, TSMC, and the Foundry Wars

The arrival of 18A has sent ripples through the corporate landscape, most notably through the validation of Intel Foundry’s business model. Microsoft (NASDAQ: MSFT) has emerged as the node’s most prominent advocate, having committed to a $15 billion lifetime deal to manufacture custom silicon—including its Azure Maia 3 AI accelerators—on the 18A process. This partnership is a direct challenge to the dominance of TSMC (NYSE: TSM), which has long been the exclusive manufacturing partner for the world’s most advanced AI chips.

While TSMC remains the volume leader with its N2 (2nm) node, the Taiwanese giant has taken a more conservative approach, opting to delay the adoption of High-NA EUV until at least 2027. This has created a "technology gap" that Intel is exploiting to attract high-profile clients. Industry insiders suggest that Apple (NASDAQ: AAPL) has begun exploring 18A for specific performance-critical components in its 2027 product line, while Nvidia (NASDAQ: NVDA) is reportedly in discussions regarding Intel’s advanced 2.5D and 3D packaging capabilities to augment its existing supply chains.

The competitive implications are stark: Intel is no longer just competing on clock speeds; it is competing on the very physics of how chips are built. For startups and AI labs, the emergence of a viable second source for leading-edge silicon could alleviate the supply bottlenecks that have defined the AI boom. By offering a "Systems Foundry" approach—combining 18A logic with Foveros packaging and open-standard interconnects—Intel is attempting to provide a turnkey solution for companies that want to move away from off-the-shelf hardware and toward bespoke, application-specific AI silicon.

The "Angstrom Era" and the Rise of Sovereign AI

The launch of 18A is the opening salvo of the "Angstrom Era," a period where transistor features are measured in units of 0.1 nanometers. This technological shift coincides with a broader geopolitical trend: the rise of "Sovereign AI." As nations and corporations grow wary of centralized cloud dependencies and sensitive data leaks, the demand for on-device AI has surged. Intel’s Panther Lake is a direct response to this, featuring an NPU (Neural Processing Unit) capable of 55 TOPS (Trillions of Operations Per Second) and a total platform throughput of 180 TOPS when paired with its Xe3 "Celestial" integrated graphics.

This development is fundamental to the "AI PC" transition. By early 2026, AI-advanced PCs are expected to account for nearly 60% of all global shipments. The 18A node’s efficiency gains allow these high-performance AI tasks—such as local LLM (Large Language Model) reasoning and real-time agentic automation—to run on thin-and-light laptops without sacrificing battery life. This mirrors the industry's shift away from cloud-only AI toward a hybrid model where sensitive "reasoning" happens locally, secured by Intel's hardware-level protections.

However, the rapid advancement is not without concerns. The immense cost of 18A development and High-NA adoption has led to a bifurcated market. While Intel and TSMC race toward the sub-1nm horizon, smaller players like Samsung (KRX: 005930) face increasing pressure to keep pace. Furthermore, the environmental impact of such energy-intensive manufacturing processes remains a point of scrutiny, even as the chips themselves become more power-efficient.

Looking Ahead: From 18A to 14A and Beyond

The roadmap beyond 18A is already coming into focus. Intel’s D1X facility in Oregon is currently piloting the 14A (1.4nm) node, which will be the first to fully utilize the throughput of the High-NA EXE:5200B machines. Experts predict that 14A will deliver a further 15% performance-per-watt improvement, potentially arriving by late 2027. Intel is also expected to lean into Glass Substrates, a new packaging material that could replace organic substrates to enable even higher interconnect density and better thermal management for massive AI "superchips."

In the near term, the focus remains on the rollout of Clearwater Forest, Intel’s 18A-based server CPU. Designed with up to 288 E-cores, it aims to reclaim the data center market from AMD (NASDAQ: AMD) and Amazon (NASDAQ: AMZN)-designed ARM chips. The challenge for Intel will be maintaining the yield rates of these complex multi-die designs. While 18A yields are currently reported in the healthy 70% range, the complexity of 3D-stacked chips remains a significant hurdle for consistent high-volume delivery.

A Definitive Turnaround

The successful deployment of Intel 18A represents a watershed moment in semiconductor history. It validates the "Systems Foundry" vision and demonstrates that the "five nodes in four years" plan was more than just marketing—it was a successful, albeit grueling, re-engineering of the company's DNA. Intel has effectively ended its period of "stagnation," re-entering the ring as a top-tier competitor capable of setting the technological pace for the rest of the industry.

As we move through the first quarter of 2026, the key metrics to watch will be the real-world battery life of Panther Lake laptops and the speed at which Microsoft and other foundry customers ramp up their 18A orders. For the first time in a generation, the "Intel Inside" sticker is once again a symbol of the leading edge, but the true test lies in whether Intel can maintain this momentum as it moves into the even more challenging territory of the 14A node and beyond.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.