On January 15, 2026, the global community celebrated a milestone that many skeptics in the early 2000s thought impossible: the 25th anniversary of Wikipedia. As the site turned a quarter-century old today, the Wikimedia Foundation marked the occasion not just with digital time capsules and community festivities, but with a series of landmark partnerships that signal a fundamental shift in how the world’s most famous encyclopedia will survive the generative AI revolution. Formalizing agreements with Microsoft Corp. (NASDAQ: MSFT), Meta Platforms, Inc. (NASDAQ: META), and the AI search innovator Perplexity, Wikipedia has officially transitioned from a passive, scraped resource into a high-octane "Knowledge as a Service" (KaaS) backbone for the modern AI ecosystem.

These partnerships represent a strategic pivot intended to secure the nonprofit's financial and data future. By moving away from a model where AI giants "scrape" data for free—often straining Wikipedia’s infrastructure without compensation—the Foundation is now providing structured, high-integrity data streams through its Wikimedia Enterprise API. This move ensures that as AI models like Copilot, Llama, and Perplexity’s "Answer Engine" become the primary way humans access information, they are grounded in human-verified, real-time data that is properly attributed to the volunteer editors who create it.

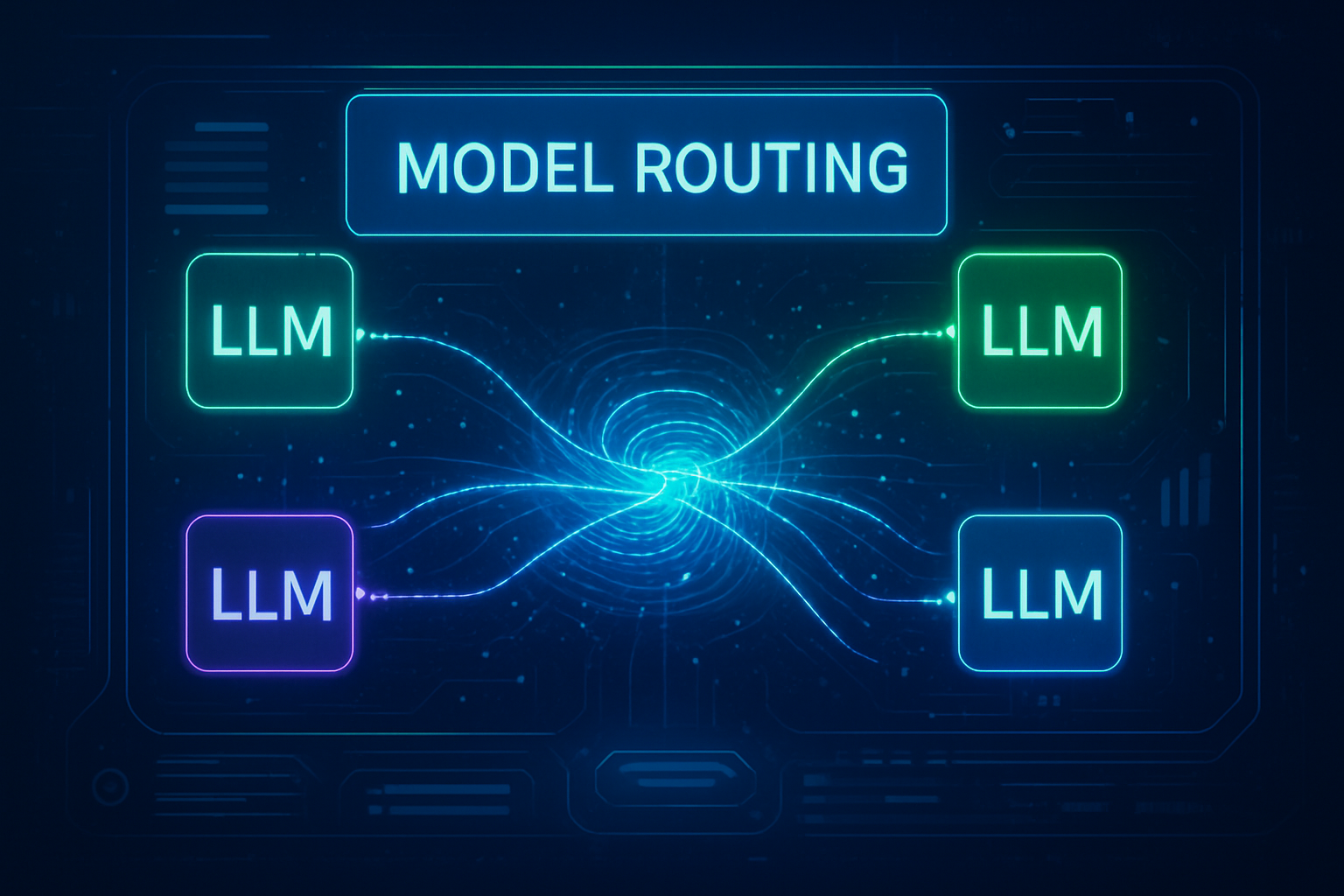

The Wikimedia Enterprise Evolution: Technical Sovereignty for the LLM Era

At the heart of these announcements is a suite of significant technical upgrades to the Wikimedia Enterprise API, designed specifically for the needs of Large Language Model (LLM) developers. Unlike traditional web scraping, which delivers messy HTML, the new "Wikipedia AI Trust Protocol" offers structured data in Parsed JSON formats. This allows AI models to ingest complex tables, scientific statistics, and election results with nearly 100% accuracy, bypassing the error-prone "re-parsing" stage that often leads to hallucinations.

Perhaps the most groundbreaking technical addition is the introduction of two new machine-learning metrics: the Reference Need Score and the Reference Risk Score. The Reference Need Score uses internal Wikipedia telemetry to flag claims that require more citations, effectively telling an AI model, "this fact is still under debate." Conversely, the Reference Risk Score aggregates the reliability of existing citations on a page. By providing this metadata, Wikipedia allows partners like Meta Platforms, Inc. (NASDAQ: META) to weight their training data based on the integrity of the source material. This is a radical departure from the "all data is equal" approach of early LLM training.

Initial reactions from the AI research community have been overwhelmingly positive. Dr. Elena Rossi, an AI ethics researcher, noted that "Wikipedia is providing the first real 'nutrition label' for training data. By exposing the uncertainty and the citation history of an article, they are giving developers the tools to build more honest AI." Industry experts also highlighted the new Realtime Stream, which offers a 99% Service Level Agreement (SLA), ensuring that breaking news edited on Wikipedia is reflected in AI assistants within seconds, rather than months.

Strategic Realignment: Why Big Tech is Paying for "Free" Knowledge

The decision by Microsoft Corp. (NASDAQ: MSFT) and Meta Platforms, Inc. (NASDAQ: META) to join the Wikimedia Enterprise ecosystem is a calculated strategic move. For years, these companies have relied on Wikipedia as a "gold standard" dataset for fine-tuning their models. However, the rise of "model collapse"—a phenomenon where AI models trained on AI-generated content begin to degrade in quality—has made human-curated data more valuable than ever. By securing a direct, structured pipeline to Wikipedia, these giants are essentially purchasing insurance against the dilution of their AI's intelligence.

For Perplexity, the partnership is even more critical. As an "answer engine" that provides real-time citations, Perplexity’s value proposition relies entirely on the accuracy and timeliness of its sources. By formalizing its relationship with the Wikimedia Foundation, Perplexity gains more granular access to the "edit history" of articles, allowing it to provide users with more context on why a specific fact was updated. This positions Perplexity as a high-trust alternative to more opaque search engines, potentially disrupting the market share held by traditional giants like Alphabet Inc. (NASDAQ: GOOGL).

The financial implications are equally significant. While Wikipedia remains free for the public, the Foundation is now ensuring that profitable tech firms pay their "fair share" for the massive server costs their data-hungry bots generate. In the last fiscal year, Wikimedia Enterprise revenue surged by 148%, and the Foundation expects these new partnerships to eventually cover up to 30% of its operating costs. This diversification reduces Wikipedia’s reliance on individual donor campaigns, which have become increasingly difficult to sustain in a fractured attention economy.

Combating Model Collapse and the Ethics of "Sovereign Data"

The wider significance of this move cannot be overstated. We are witnessing the end of the "wild west" era of web data. As the internet becomes flooded with synthetic, AI-generated text, Wikipedia remains one of the few remaining "clean" reservoirs of human thought and consensus. By asserting control over its data distribution, the Wikimedia Foundation is setting a precedent for what industry insiders are calling "Sovereign Data"—the idea that high-quality, human-governed repositories must be protected and valued as a distinct class of information.

However, this transition is not without its concerns. Some members of the open-knowledge community worry that a "tiered" system—where tech giants get premium API access while small researchers rely on slower methods—could create a digital divide. The Foundation has countered this by reiterating that all Wikipedia content remains licensed under Creative Commons; the "product" being sold is the infrastructure and the metadata, not the knowledge itself. This balance is a delicate one, but it mirrors the shift seen in other industries where "open source" and "enterprise support" coexist to ensure the survival of the core project.

Compared to previous AI milestones, such as the release of GPT-4, the Wikipedia-AI Pact is less about a leap in processing power and more about a leap in information ethics. It addresses the "parasitic" nature of the early AI-web relationship, moving toward a symbiotic model. If Wikipedia had not acted, it risked becoming a ghost town of bots scraping bots; today’s announcement ensures that the human element remains at the center of the loop.

The Road Ahead: Human-Centered AI and Global Representation

Looking toward the future, the Wikimedia Foundation’s new CEO, Bernadette Meehan, has outlined a vision where Wikipedia serves as the "trust layer" for the entire internet. In the near term, we can expect to see Wikipedia-integrated AI features that help editors identify gaps in knowledge—particularly in languages and regions of the Global South that have historically been underrepresented. By using AI to flag what is missing from the encyclopedia, the Foundation can direct its human volunteers to the areas where they are most needed.

A major challenge remains the "attribution war." While the new agreements mandate that partners like Microsoft Corp. (NASDAQ: MSFT) and Meta Platforms, Inc. (NASDAQ: META) provide clear citations to Wikipedia editors, the reality of conversational AI often obscures these links. Future technical developments will likely focus on "deep linking" within AI responses, allowing users to jump directly from a chat interface to the specific Wikipedia talk page or edit history where a fact was debated. Experts predict that as AI becomes our primary interface with the web, Wikipedia will move from being a "website we visit" to a "service that powers everything we hear."

A New Chapter for the Digital Commons

As the 25th-anniversary celebrations draw to a close, the key takeaway is clear: Wikipedia has successfully navigated the existential threat posed by generative AI. By leaning into its role as the world’s most reliable human dataset and creating a sustainable commercial framework for its data, the Foundation has secured its place in history for another quarter-century. This development is a pivotal moment in the history of the internet, marking the transition from a web of links to a web of verified, structured intelligence.

The significance of this moment lies in its defense of human labor. At a time when AI is often framed as a replacement for human intellect, Wikipedia’s partnerships prove that AI is actually more dependent on human consensus than ever before. In the coming weeks, industry observers should watch for the integration of the "Reference Risk Scores" into mainstream AI products, which could fundamentally change how users perceive the reliability of the answers they receive. Wikipedia at 25 is no longer just an encyclopedia; it is the vital organ keeping the AI-driven internet grounded in reality.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.