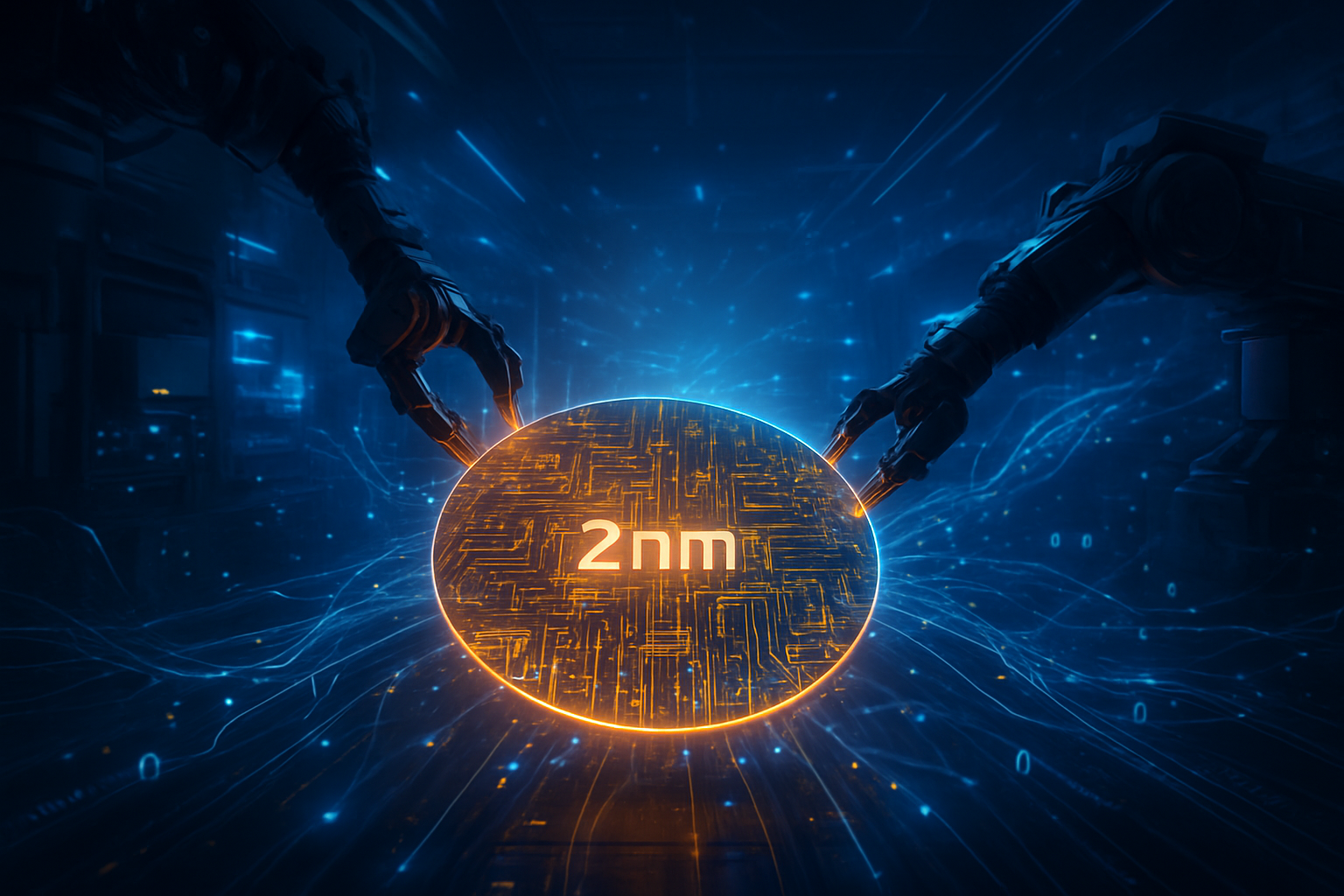

As of January 5, 2026, the global semiconductor landscape has officially shifted. Taiwan Semiconductor Manufacturing Company (NYSE: TSM) has announced the successful commencement of mass production for its 2nm (N2) process technology, marking the industry’s first large-scale transition to Nanosheet Gate-All-Around (GAA) transistors. This milestone, centered at the company’s state-of-the-art Fab 20 and Fab 22 facilities, represents the most significant architectural change in chip manufacturing in over a decade, promising to break the efficiency bottlenecks that have begun to plague the artificial intelligence and mobile computing sectors.

The immediate significance of this development cannot be overstated. With 2nm capacity already reported as overbooked through the end of the year, the move to N2 is not merely a technical upgrade but a strategic linchpin for the world’s most valuable technology firms. By delivering a 15% increase in speed and a staggering 30% reduction in power consumption compared to the previous 3nm node, TSMC is providing the essential hardware foundation required to sustain the current "AI supercycle" and the next generation of energy-conscious consumer electronics.

A Fundamental Shift: Nanosheet GAA and the Rise of Fab 20 & 22

The transition to the N2 node marks TSMC’s formal departure from the FinFET (Fin Field-Effect Transistor) architecture, which has been the industry standard since the 16nm era. The new Nanosheet GAA technology utilizes horizontal stacks of silicon "sheets" entirely surrounded by the transistor gate on all four sides. This design provides superior electrostatic control, drastically reducing the current leakage that had become a growing concern as transistors approached atomic scales. By allowing chip designers to adjust the width of these nanosheets, TSMC has introduced a level of "width scalability" that enables a more precise balance between high-performance computing and low-power efficiency.

Production is currently anchored in two primary hubs in Taiwan. Fab 20, located in the Hsinchu Science Park, served as the initial bridge from research to pilot production and is now operating at scale. Simultaneously, Fab 22 in Kaohsiung—a massive "Gigafab" complex—has activated its first phase of 2nm production to meet the massive volume requirements of global clients. Initial reports suggest that TSMC has achieved yield rates between 60% and 70%, an impressive feat for a first-generation GAA process, which has historically been difficult for competitors like Samsung (KRX: 005930) and Intel (NASDAQ: INTC) to stabilize at high volumes.

Industry experts have reacted with a mix of awe and relief. "The move to GAA was the industry's biggest hurdle in continuing Moore's Law," noted one lead analyst at a top semiconductor research firm. "TSMC's ability to hit volume production in early 2026 with stable yields effectively secures the roadmap for AI model scaling and mobile performance for the next three years. This isn't just an iteration; it’s a new foundation for silicon physics."

The Silicon Elite: Capacity War and Market Positioning

The arrival of 2nm silicon has triggered an unprecedented scramble among tech giants, resulting in an overbooked order book that spans well into 2027. Apple (NASDAQ: AAPL) has once again secured its position as the primary anchor customer, reportedly claiming over 50% of the initial 2nm capacity. These chips are destined for the upcoming A20 processors in the iPhone 18 series and the M6 series of MacBooks, giving Apple a significant lead in power efficiency and on-device AI processing capabilities compared to its rivals.

NVIDIA (NASDAQ: NVDA) and AMD (NASDAQ: AMD) are also at the forefront of this transition, driven by the insatiable power demands of data centers. NVIDIA is transitioning its high-end compute tiles for the "Rubin" GPU architecture to 2nm to combat the "power wall" that threatens the expansion of massive AI training clusters. Similarly, AMD has confirmed that its Zen 6 "Venice" CPUs and MI450 AI accelerators will leverage the N2 node. This early adoption allows these companies to maintain a competitive edge in the high-performance computing (HPC) market, where every percentage point of energy efficiency translates into millions of dollars in saved operational costs for cloud providers.

For competitors like Intel, the pressure is mounting. While Intel has its own 18A node (equivalent to the 1.8nm class) entering the market, TSMC’s successful 2nm ramp-up reinforces its dominance as the world’s most reliable foundry. The strategic advantage for TSMC lies not just in the technology, but in its ability to manufacture these complex chips at a scale that no other firm can currently match. With 2nm wafers reportedly priced at a premium of $30,000 each, the barrier to entry for the "Silicon Elite" has never been higher, further consolidating power among the industry's wealthiest players.

AI and the Energy Imperative: Wider Implications

The shift to 2nm is occurring at a critical juncture for the broader AI landscape. As large language models (LLMs) grow in complexity, the energy required to train and run them has become a primary bottleneck for the industry. The 30% power reduction offered by the N2 node is not just a technical specification; it is a vital necessity for the sustainability of AI expansion. By reducing the thermal footprint of data centers, TSMC is enabling the next wave of AI breakthroughs that would have been physically or economically impossible on 3nm or 5nm hardware.

This milestone also signals a pivot toward "AI-first" silicon design. Unlike previous nodes where mobile phones were the sole drivers of innovation, the N2 node has been optimized from the ground up for high-performance computing. This reflects a broader trend where the semiconductor industry is no longer just serving consumer electronics but is the literal engine of the global digital economy. The transition to GAA technology ensures that the industry can continue to pack more transistors into a given area, maintaining the momentum of Moore’s Law even as traditional scaling methods hit their physical limits.

However, the move to 2nm also raises concerns regarding the geographical concentration of advanced chipmaking. With Fab 20 and Fab 22 both located in Taiwan, the global tech economy remains heavily dependent on a single region for its most critical hardware. While TSMC is expanding its footprint in Arizona, those facilities are not expected to reach 2nm parity until 2027 or later. This creates a "silicon shield" that is as much a geopolitical factor as it is a technological one, keeping the global spotlight firmly on the stability of the Taiwan Strait.

The Angstrom Roadmap: N2P, A16, and Super Power Rail

Looking beyond the current N2 milestone, TSMC has already laid out an aggressive roadmap for the "Angstrom Era." By the second half of 2026, the company expects to introduce N2P, a performance-enhanced version of the 2nm node that will likely be adopted by flagship Android SoC makers like Qualcomm (NASDAQ: QCOM) and MediaTek (TWSE: 2454). N2P is expected to offer incremental gains in performance and power, refining the GAA process as it matures.

The most anticipated leap, however, is the A16 (1.6nm) node, slated for mass production in late 2026. The A16 node will introduce "Super Power Rail" technology, TSMC’s proprietary version of Backside Power Delivery (BSPDN). This revolutionary approach moves the entire power distribution network to the backside of the wafer, connecting it directly to the transistor's source and drain. By separating the power and signal paths, Super Power Rail eliminates voltage drops and frees up significant space on the front side of the chip for signal routing.

Experts predict that the combination of GAA and Super Power Rail will define the next five years of semiconductor innovation. The A16 node is projected to offer an additional 10% speed increase and a 20% power reduction over N2P. As AI models move toward real-time multi-modal processing and autonomous agents, these technical leaps will be essential for providing the necessary "compute-per-watt" to make such applications viable on mobile devices and edge hardware.

A Landmark in Computing History

TSMC’s successful mass production of 2nm chips in January 2026 will be remembered as the moment the semiconductor industry successfully navigated the transition from FinFET to Nanosheet GAA. This shift is more than a routine node shrink; it is a fundamental re-engineering of the transistor that ensures the continued growth of artificial intelligence and high-performance computing. With the roadmap for N2P and A16 already in motion, the "Angstrom Era" is no longer a theoretical future but a tangible reality.

The key takeaway for the coming months will be the speed at which TSMC can scale its yield and how quickly its primary customers—Apple, NVIDIA, and AMD—can bring their 2nm-powered products to market. As the first 2nm-powered devices begin to appear later this year, the gap between the "Silicon Elite" and the rest of the industry is likely to widen, driven by the immense performance and efficiency gains of the N2 node.

In the long term, this development solidifies TSMC’s position as the indispensable architect of the modern world. While challenges remain—including geopolitical tensions and the rising costs of wafer production—the commencement of 2nm mass production proves that the limits of silicon are still being pushed further than many thought possible. The AI revolution has found its new engine, and it is built on a foundation of nanosheets.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.