In a move that has sent shockwaves through the global technology sector and brought the U.S.-China trade war to a fever pitch, President Trump signed a sweeping Section 232 proclamation on January 14, 2026, imposing an immediate 25% tariff on advanced semiconductors. Citing a critical threat to national security due to the United States' reliance on foreign-made logic chips, the administration has framed the move as a necessary "sovereign toll" to force the reshoring of high-tech manufacturing. The proclamation marks a radical shift from targeted export controls to a broad-based fiscal barrier, effectively taxing the very hardware that powers the modern artificial intelligence revolution.

The geopolitical tension escalated further on January 16, 2026, when Commerce Secretary Howard Lutnick issued a blunt "100% tariff ultimatum" to South Korean memory giants Samsung Electronics (KRX:005930) and SK Hynix (KRX:000660). Speaking at a groundbreaking for a new Micron Technology (NASDAQ:MU) facility, Lutnick declared that foreign memory manufacturers must transition from simple packaging to full-scale wafer fabrication on American soil or face a doubling of their costs at the U.S. border. This "Build-or-Pay" mandate has left international allies and tech conglomerates scrambling to navigate a new era of managed trade where access to the American market is contingent on multi-billion dollar domestic investments.

Technical Scope and the 'Surgical Strike' on High-End Silicon

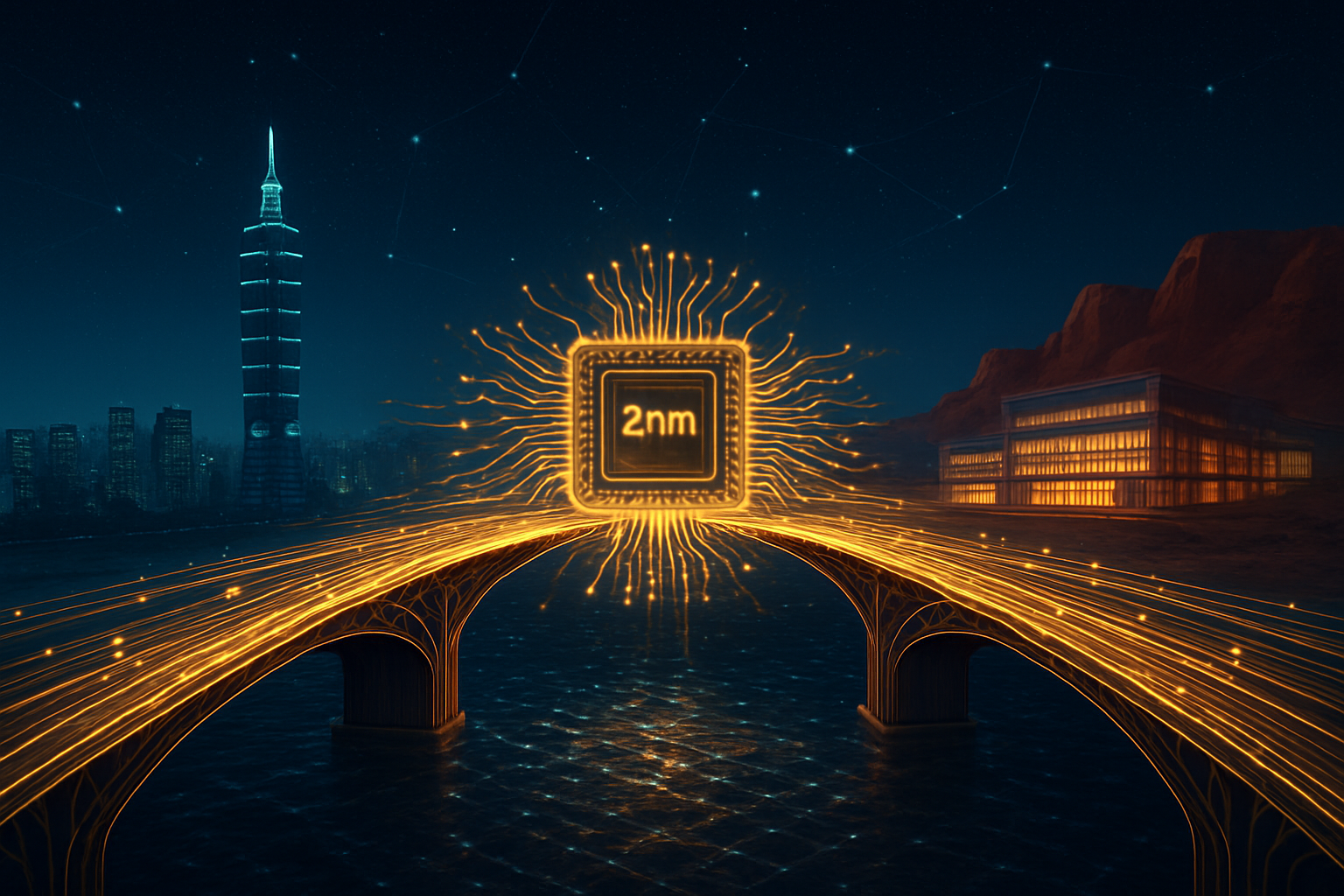

The Section 232 proclamation, titled "Adjusting Imports of Semiconductors," utilizes the Trade Expansion Act of 1962 to implement a two-phase strategy aimed at reclaiming the domestic silicon supply chain. Phase One, which became effective on January 15, 2026, specifically targets high-end logic integrated circuits used in data centers and AI training clusters. The technical parameters for these tariffs are remarkably precise, focusing on chips that exceed a Total Processing Performance (TPP) of 14,000 with a DRAM bandwidth exceeding 4,500 GB/s. This technical "surgical strike" ensures that the 25% levy hits the most powerful hardware currently in production, most notably the H200 series from NVIDIA (NASDAQ:NVDA).

Unlike previous trade measures that focused on denying China access to technology, this proclamation introduces a "revenue-sharing" model that affects even approved exports. In a paradoxical "whiplash" policy, the administration approved the export of NVIDIA's H200 chips to China on January 13, only to slap a 25% tariff on them the following day. Because these chips, often fabricated by Taiwan Semiconductor Manufacturing Company (NYSE:TSM), must transit through U.S. facilities for mandatory third-party security testing before reaching international buyers, the tariff acts as a mandatory surcharge on every high-end GPU sold globally.

Industry experts and the AI research community have expressed immediate alarm over the potential for increased R&D costs. While the proclamation includes "carve-outs" for U.S.-based data centers with a power capacity over 100 MW and specific exemptions for domestic startups, the complexity of the Harmonized Tariff Schedule (HTS) codes—specifically 8471.50 and 8473.30—has created a compliance nightmare for hardware integrators. Researchers fear that the increased cost of "compute" will further widen the gap between well-funded tech giants and academic institutions, potentially centralizing AI innovation within a handful of elite, federally-subsidized corporations.

Corporate Fallout and the Rise of Domestic Champions

The corporate fallout from the Jan 14 proclamation has been immediate and severe, particularly for NVIDIA and Advanced Micro Devices (NASDAQ:AMD). NVIDIA, which relies on a complex global supply chain that bridges Taiwanese fabrication with U.S. design, now finds itself in the crossfire of a fiscal battle. The 25% tariff on the H200 effectively raises the price of the world’s most sought-after AI chip by tens of thousands of dollars per unit. While NVIDIA's market dominance provides some pricing power, the company faces the risk of a "shadow ban" in China, as Beijing has reportedly instructed domestic firms like Alibaba (NYSE:BABA) and Tencent (OTC:TCEHY) to halt purchases to avoid paying the "Trump Fee" to the U.S. Treasury.

The big winners in this new landscape appear to be domestic champions with existing U.S. fabrication footprints. Intel (NASDAQ:INTC) has seen its stock buoyed by the prospect of becoming the primary beneficiary of the administration's "Tariffs-for-Investment" model. Under this framework, companies that commit to massive domestic expansions, such as the $500 billion "Taiwan Deal" signed by TSMC, can receive a 15% tariff cap and duty-free import quotas. This creates a tiered competitive environment where those who "build American" enjoy a significant price advantage over foreign competitors who remain tethered to overseas foundries.

However, for startups and mid-tier AI labs, the disruption to the supply chain could be catastrophic. Existing products that rely on just-in-time delivery of specialized components are seeing lead times extend as customs officials implement the new TPP benchmarks. Market positioning is no longer just about who has the best architecture, but who has the most favorable "tariff offset" status. The strategic advantage has shifted overnight from firms with the most efficient global supply chains to those with the deepest political ties and the largest domestic construction budgets.

The Geopolitical Schism: A New 'Silicon Curtain'

This development represents a watershed moment in the broader AI landscape, signaling the end of the "borderless" era of technology development. For decades, the semiconductor industry operated on the principle of comparative advantage, with design in the West and manufacturing in the East. The Section 232 proclamation effectively dismantles this model, replacing it with a "Silicon Curtain" that prioritizes national security and domestic industrial policy over market efficiency. It echoes the steel and aluminum tariffs of 2018 but with far higher stakes, as semiconductors are now viewed as the "oil of the 21st century."

The geopolitical implications for the U.S.-China trade war are profound. China has already retaliated by implementing a "customs blockade" on H200 shipments in Shenzhen and Hong Kong, signaling that it will not subsidize the U.S. economy through tariff payments. This standoff threatens to bifurcate the global AI ecosystem into two distinct technological blocs: a U.S.-led bloc powered by high-cost, domestically-manufactured silicon, and a China-led bloc forced to accelerate the development of homegrown alternatives like Huawei’s Ascend 910C. The risk of a total "decoupling" has moved from a theoretical possibility to an operational reality.

Comparisons to previous AI milestones, such as the release of GPT-4 or the initial export bans of 2022, suggest that the 2026 tariffs may be more impactful in the long run. While software breakthroughs define what AI can do, these tariffs define who can afford to do it. The "100% ultimatum" on Samsung and SK Hynix is particularly significant, as it targets the High Bandwidth Memory (HBM) that is essential for all large-scale AI training. By threatening to double the cost of memory, the U.S. is using its market size as a weapon to force a total reconfiguration of the global high-tech map.

Future Developments: The Race for Reshoring

Looking ahead, the next several months will be defined by intense negotiations as the administration’s "Phase Two" looms. South Korean officials have already entered "emergency response mode" to seek a deal similar to Taiwan’s, hoping to secure a tariff cap in exchange for accelerated wafer fabrication plants in Texas and Indiana. If Samsung and SK Hynix fail to reach an agreement by mid-2026, the 100% tariff on memory chips could trigger a massive inflationary spike in the cost of all computing hardware, from enterprise servers to high-end consumer electronics.

The industry also anticipates a wave of "tariff-dodging" innovation. Designers may begin to optimize AI models for lower-performance chips that fall just below the TPP 14,000 threshold, or explore novel architectures that rely less on high-bandwidth memory. However, the technical challenge of maintaining AI progress while operating under fiscal constraints is immense. Near-term, we expect to see an "AI construction boom" across the American Rust Belt and Silicon Prairie, as the combination of CHIPS Act subsidies and Section 232 penalties makes U.S. manufacturing the only viable long-term strategy for global chipmakers.

Conclusion: Reimagining the Global Supply Chain

The January 2026 Section 232 proclamation is a definitive assertion of technological sovereignty that will be remembered as a turning point in AI history. By leveraging 25% and 100% tariffs as tools of industrial policy, the Trump administration has fundamentally altered the economics of artificial intelligence. The key takeaways are clear: the era of globalized, low-cost semiconductor supply chains is over, and the future of AI hardware is now inextricably linked to domestic manufacturing capacity and geopolitical loyalty.

The long-term impact of this "Silicon Curtain" remains to be seen. While it may succeed in reshoring critical manufacturing and securing the U.S. supply chain, it risks stifling global innovation and provoking a permanent technological schism with China. In the coming weeks, the industry will be watching for the outcome of the South Korean negotiations and the planned Trump-Xi Summit in April 2026. For now, the world of AI is in a state of suspended animation, waiting to see if the high cost of the new "sovereign toll" will be the price of security or the cause of a global tech recession.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.