The transition to a high-efficiency, electrified future has reached a critical tipping point as of January 2, 2026. Recent breakthroughs in Silicon Carbide (SiC) research and manufacturing are fundamentally reshaping the landscape of power electronics. By moving beyond traditional silicon and embracing wide bandgap (WBG) materials, the industry is unlocking unprecedented performance in electric vehicles (EVs), renewable energy storage, and, most crucially, the massive power-hungry data centers that fuel modern generative AI.

The immediate significance of these developments lies in the convergence of AI and hardware. While AI models demand more energy than ever before, AI-driven manufacturing techniques are simultaneously being used to perfect the very SiC chips required to manage that power. This symbiotic relationship has accelerated the shift toward 200mm (8-inch) wafer production and next-generation "trench" architectures, promising a new era of energy efficiency that could reduce global data center power consumption by nearly 10% over the next decade.

The Technical Edge: M3e Platforms and AI-Optimized Crystal Growth

At the heart of the recent SiC surge is a series of technical milestones that have pushed the material's performance limits. In late 2025, onsemi (NASDAQ:ON) unveiled its EliteSiC M3e technology, a landmark development in planar MOSFET architecture. The M3e platform achieved a staggering 30% reduction in conduction losses and a 50% reduction in turn-off losses compared to previous generations. This leap is vital for 800V EV traction inverters and high-density AI power supplies, where reducing the "thermal signature" is the primary bottleneck for increasing compute density.

Simultaneously, Infineon Technologies (OTC:IFNNY) has successfully scaled its CoolSiC Generation 2 (G2) MOSFETs. These devices offer up to 20% better power density and are specifically designed to support multi-level topologies in data center Power Supply Units (PSUs). Unlike previous approaches that relied on simple silicon replacements, these new SiC designs are "smart," featuring integrated gate drivers that minimize parasitic inductance. This allows for switching frequencies that were previously unattainable, enabling smaller, lighter, and more efficient power converters.

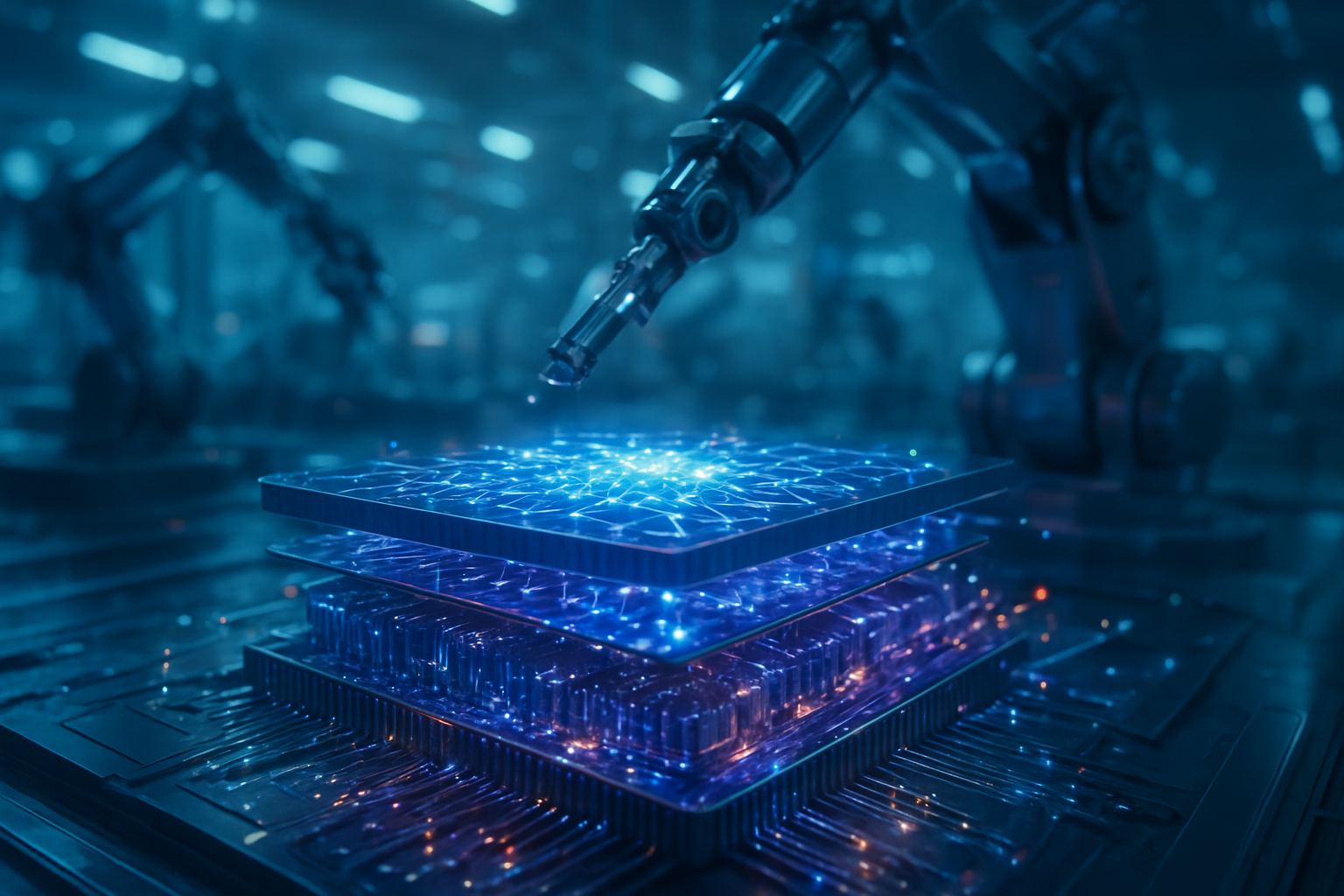

Perhaps the most transformative technical advancement is the integration of AI into the manufacturing process itself. SiC is notoriously difficult to produce due to "killer defects" like basal plane dislocations. New systems from Applied Materials (NASDAQ:AMAT), such as the PROVision 10 with ExtractAI technology, now use deep learning to identify these microscopic flaws with 99% accuracy. By analyzing datasets from the crystal growth process (boule formation), AI models can now predict wafer failure before slicing even begins, leading to a 30% reduction in yield detraction—a move that has been hailed by the research community as the "holy grail" of SiC production.

The Scale War: Industry Giants and the 200mm Transition

The competitive landscape of 2026 is defined by a "Scale War" as major players race to transition from 150mm to 200mm (8-inch) wafers. This shift is essential for driving down costs and meeting the projected $10 billion market demand. Wolfspeed (NYSE:WOLF) has taken a commanding lead with its $5 billion "John Palmour" (JP) Manufacturing Center in North Carolina. As of this month, the facility has moved into high-volume 200mm crystal production, increasing the company's wafer capacity by tenfold compared to its legacy sites.

In Europe, STMicroelectronics (NYSE:STM) has countered with its fully integrated Silicon Carbide Campus in Sicily. This site represents the first time a manufacturer has handled the entire SiC lifecycle—from raw powder and 200mm substrate growth to finished modules—on a single campus. This vertical integration provides a massive strategic advantage, allowing STMicro to supply major automotive partners like Tesla (NASDAQ:TSLA) and BMW with a more resilient and cost-effective supply chain.

The disruption to existing products is already visible. Legacy silicon-based Insulated Gate Bipolar Transistors (IGBTs) are rapidly being phased out of high-performance applications. Startups and major AI labs are the primary beneficiaries, as the new SiC-based 12 kW PSU designs from Infineon and onsemi have reached 99.0% peak efficiency. This allows AI clusters to handle massive "power spikes"—surging from 0% to 200% load in microseconds—without the voltage sags that can crash intensive AI training batches.

Broader Significance: Decarbonization and the AI Power Crisis

The wider significance of the SiC breakthrough extends far beyond the semiconductor fab. As generative AI continues its exponential growth, the strain on global power grids has become a top-tier geopolitical concern. SiC is the "invisible enabler" of the AI revolution; without the efficiency gains provided by wide bandgap semiconductors, the energy costs of training next-generation Large Language Models (LLMs) would be economically and environmentally unsustainable.

Furthermore, the shift to SiC-enabled 800V DC architectures in data centers is a major milestone in the green energy transition. By moving to higher-voltage DC distribution, facilities can eliminate multiple energy-wasting conversion stages and reduce the need for heavy copper cabling. Research from late 2025 indicates that these architectures can reduce overall data center energy consumption by up to 7%. This aligns with broader global trends toward decarbonization and the "electrification of everything."

However, this transition is not without concerns. The extreme concentration of SiC manufacturing capability in a handful of high-tech facilities in the U.S., Europe, and Malaysia creates new supply chain vulnerabilities. Much like the advanced logic chips produced by TSMC, the world is becoming increasingly dependent on a very specific type of hardware to keep its digital and physical infrastructure running. Comparing this to previous milestones, the SiC 200mm transition is being viewed as the "lithography moment" for power electronics—a fundamental shift in how we manage the world's energy.

Future Horizons: 300mm Wafers and the Rise of Gallium Nitride

Looking ahead, the next frontier for SiC research is already appearing on the horizon. While 200mm is the current gold standard, industry experts predict that the first 300mm (12-inch) SiC pilot lines could emerge by late 2028. This would further commoditize high-efficiency power electronics, making SiC viable for even low-cost consumer appliances. Additionally, the interplay between SiC and Gallium Nitride (GaN) is expected to evolve, with SiC dominating high-voltage applications (EVs, Grids) and GaN taking over lower-voltage, high-frequency roles (consumer electronics, 5G/6G base stations).

We also expect to see "Smart Power" modules becoming more autonomous. Future iterations will likely feature edge-AI chips embedded directly into the power module to perform real-time health monitoring and predictive maintenance. This would allow a power grid or an EV fleet to "heal" itself by rerouting power or adjusting switching parameters the moment a potential failure is detected. The challenge remains the high initial cost of material synthesis, but as AI-driven yield optimization continues to improve, those barriers are falling faster than anyone predicted two years ago.

Conclusion: The Nervous System of the Energy Transition

The breakthroughs in Silicon Carbide technology witnessed at the start of 2026 mark a definitive end to the era of "good enough" silicon power. The convergence of AI-driven manufacturing and wide bandgap material science has created a virtuous cycle of efficiency. SiC is no longer just a niche material for luxury EVs; it has become the nervous system of the modern energy transition, powering everything from the AI clusters that think for us to the electric grids that sustain us.

As we move through the coming weeks and months, watch for further announcements regarding 200mm yield rates and the deployment of 800V DC architectures in hyperscale data centers. The significance of this development in the history of technology cannot be overstated—it is the hardware foundation upon which the sustainable AI era will be built. The "Silicon" in Silicon Valley may soon be sharing its namesake with "Carbide" as the primary driver of technological progress.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.