In a move set to redefine the power semiconductor landscape, onsemi (NASDAQ: ON) and GlobalFoundries (NASDAQ: GFS) have announced a strategic collaboration to develop and manufacture 650V Gallium Nitride (GaN) power devices. This partnership, finalized in late December 2025, marks a critical pivot in the industry as it transitions from traditional 150mm wafers to high-volume 200mm GaN-on-silicon manufacturing. By combining onsemi’s leadership in power systems with GlobalFoundries’ large-scale U.S. fabrication capabilities, the alliance aims to address the skyrocketing energy demands of AI data centers and the efficiency requirements of next-generation electric vehicles (EVs).

The immediate significance of this announcement lies in its creation of a robust, domestic "Made in America" supply chain for wide-bandgap semiconductors. As the global tech industry faces increasing geopolitical pressures and supply chain volatility, the onsemi-GlobalFoundries partnership offers a secure, high-capacity source for the critical components that power the modern digital and green economy. With customer sampling scheduled to begin in the first half of 2026, the collaboration is poised to dismantle the "power wall" that has long constrained the performance of high-density server racks and the range of electric transport.

Scaling the Power Wall: The Shift to 200mm GaN-on-Silicon

The technical cornerstone of this collaboration is the development of 650V enhancement-mode (eMode) lateral GaN-on-silicon power devices. Unlike traditional silicon-based MOSFETs, GaN offers significantly higher electron mobility and breakdown strength, allowing for faster switching speeds and reduced thermal losses. The move to 200mm (8-inch) wafers is a game-changer; it provides a substantial increase in die count per wafer compared to the previous 150mm industry standard, effectively lowering the unit cost and enabling the economies of scale necessary for mass-market adoption.

Technically, the 650V rating is the "sweet spot" for high-efficiency power conversion. Onsemi is integrating its proprietary silicon drivers, advanced controllers, and thermally enhanced packaging with GlobalFoundries’ specialized GaN process. This "system-in-package" approach allows for bidirectional power flow and integrated protection, which is vital for the high-frequency switching environments of AI power supplies. By operating at higher frequencies, these GaN devices allow for the use of smaller passive components, such as inductors and capacitors, leading to a dramatic increase in power density—essentially packing more power into a smaller physical footprint.

Initial reactions from the industry have been overwhelmingly positive. Power electronics experts note that the transition to 200mm manufacturing is the "tipping point" for GaN technology to move from niche applications to mainstream infrastructure. While previous GaN efforts were often hampered by yield issues and high costs, the combined expertise of these two giants—utilizing GlobalFoundries’ mature CMOS-compatible fabrication processes—suggests a level of reliability and volume that has previously eluded domestic GaN production.

Strategic Dominance: Reshaping the Semiconductor Supply Chain

The collaboration places onsemi (NASDAQ: ON) and GlobalFoundries (NASDAQ: GFS) in a formidable market position. For onsemi, the partnership accelerates its roadmap to a complete GaN portfolio, covering low, medium, and high voltage applications. For GlobalFoundries, it solidifies its role as the premier U.S. foundry for specialized power technologies. This is particularly timely following Taiwan Semiconductor Manufacturing Company’s (NYSE: TSM) announcement that it would exit the GaN foundry service market by 2027. By licensing TSMC’s 650V GaN technology in late 2025, GlobalFoundries has effectively stepped in to fill a massive vacuum in the global foundry landscape.

Major tech giants building out AI infrastructure, such as Microsoft (NASDAQ: MSFT) and Google (NASDAQ: GOOGL), stand to benefit significantly. As AI server racks now demand upwards of 100kW per rack, the efficiency gains provided by 650V GaN are no longer optional—they are a prerequisite for managing operational costs and cooling requirements. Furthermore, domestic automotive manufacturers like Ford (NYSE: F) and General Motors (NYSE: GM) gain a strategic advantage by securing a U.S.-based source for onboard chargers (OBCs) and DC-DC converters, helping them meet local-content requirements and insulate their production lines from overseas disruptions.

The competitive implications are stark. This alliance creates a "moat" around the U.S. power semiconductor industry, leveraging CHIPS Act funding—including the $1.5 billion previously awarded to GlobalFoundries—to build a manufacturing powerhouse. Existing players who rely on Asian foundries for GaN production may find themselves at a disadvantage as "Made in America" mandates become more prevalent in government and defense-linked aerospace projects, where thermal efficiency and supply chain security are paramount.

The AI and Electrification Nexus: Broadening the Horizon

This development fits into a broader global trend where the energy transition and the AI revolution are converging. The massive energy footprint of generative AI has forced a reckoning in data center design. GaN technology is a key pillar of this transformation, enabling the high-efficiency power delivery units (PDUs) required to keep pace with the power-hungry GPUs and TPUs driving the AI boom. By reducing energy waste at the conversion stage, these 650V devices directly contribute to the decarbonization goals of the world’s largest technology firms.

The "Made in America" aspect cannot be overstated. By centering production in Malta, New York, and Burlington, Vermont, the partnership revitalizes U.S. manufacturing in a sector that was once dominated by offshore facilities. This shift mirrors the earlier transition from silicon to Silicon Carbide (SiC) in the EV industry, but with GaN offering even greater potential for high-frequency applications and consumer electronics. The move signals a broader strategic intent to maintain technological sovereignty in the foundational components of the 21st-century economy.

However, the transition is not without its hurdles. While the performance benefits of GaN are clear, the industry must still navigate the complexities of integrating these new materials into existing system architectures. There are also concerns regarding the long-term reliability of GaN-on-silicon under the extreme thermal cycling found in automotive environments. Nevertheless, the collaboration between onsemi and GlobalFoundries represents a major milestone, comparable to the initial commercialization of the IGBT in the 1980s, which revolutionized industrial motor drives.

From Sampling to Scale: What Lies Ahead for GaN

In the near term, the focus will be on the successful rollout of customer samples in the first half of 2026. This period will be critical for validating the performance and reliability of the 200mm GaN-on-silicon process in real-world conditions. Beyond AI data centers and EVs, the horizon for these 650V devices includes applications in solar microinverters and energy storage systems (ESS), where high-efficiency DC-to-AC conversion is essential for maximizing the output of renewable energy sources.

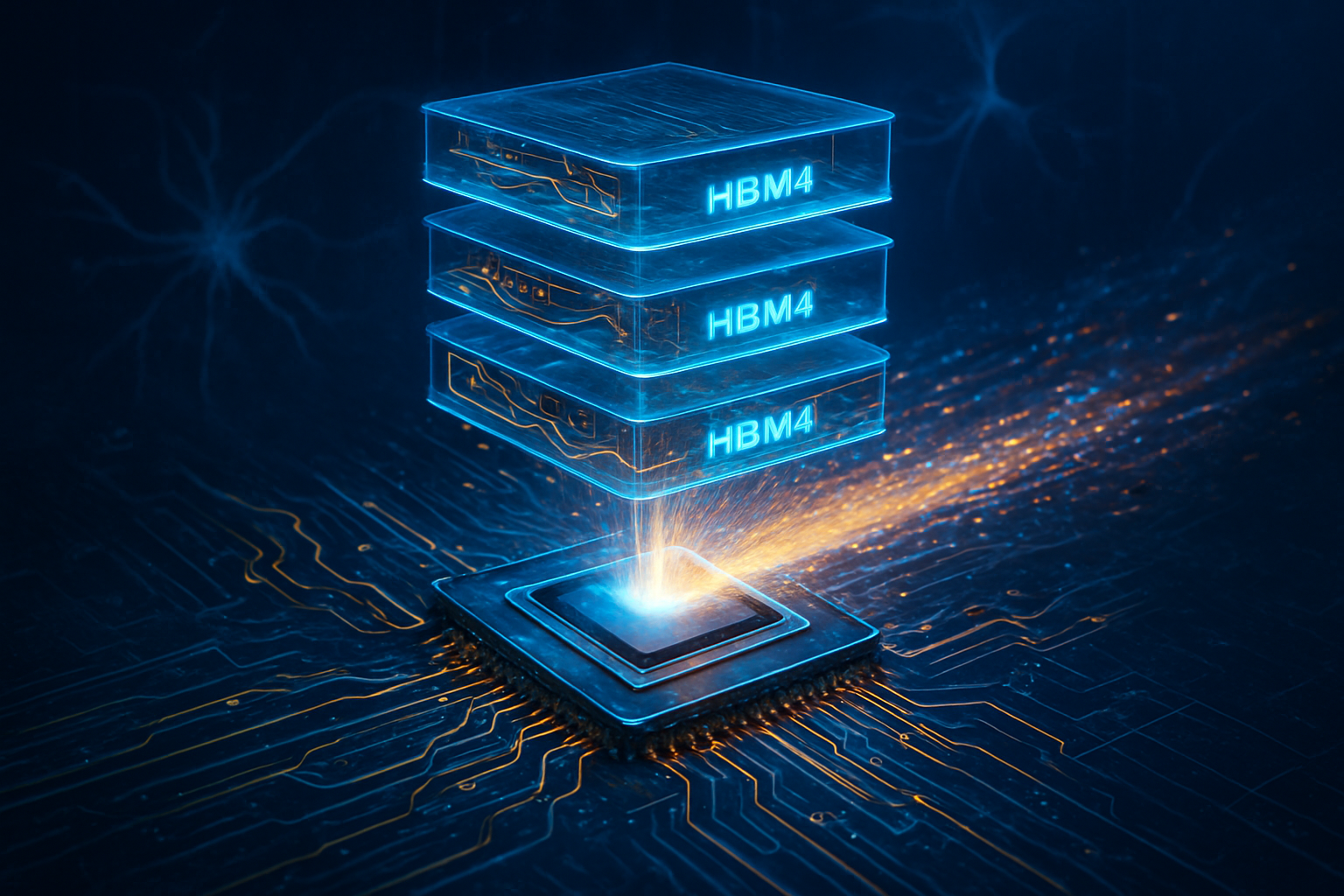

Experts predict that as manufacturing yields stabilize on the 200mm platform, we will see a rapid decline in the cost-per-watt of GaN devices, potentially reaching parity with high-end silicon MOSFETs by late 2027. This would trigger a second wave of adoption in consumer electronics, such as ultra-fast chargers for laptops and smartphones. The next technical frontier will likely involve the development of 800V and 1200V GaN devices to support the 800V battery architectures becoming common in high-performance electric vehicles.

The primary challenge remaining is the talent gap in wide-bandgap semiconductor engineering. As manufacturing returns to U.S. soil, the demand for specialized engineers who understand the nuances of GaN design and fabrication is expected to surge. Both onsemi and GlobalFoundries are likely to increase their investments in university partnerships and domestic training programs to ensure the long-term viability of this new manufacturing ecosystem.

A New Era of Domestic Power Innovation

The collaboration between onsemi and GlobalFoundries is more than just a business deal; it is a strategic realignment of the power semiconductor industry. By focusing on 650V GaN-on-silicon at the 200mm scale, the two companies are positioning themselves at the heart of the AI and EV revolutions. The key takeaways are clear: domestic manufacturing is back, GaN is ready for the mainstream, and the "power wall" is finally being breached.

In the context of semiconductor history, this partnership may be viewed as the moment when the United States reclaimed its lead in power electronics manufacturing. The long-term impact will be felt in more efficient data centers, faster-charging EVs, and a more resilient global supply chain. In the coming weeks and months, the industry will be watching closely for the first performance data from the 200mm pilot lines and for further announcements regarding the expansion of this GaN platform into even higher voltage ranges.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.